4.1: The Role of Socialization

4.1.1: The Role of Socialization

Socialization prepares people for social life by teaching them a group’s shared norms, values, beliefs, and behaviors.

Learning Objective

Describe the three goals of socialization and why each is important

Key Points

- Socialization prepares people to participate in a social group by teaching them its norms and expectations.

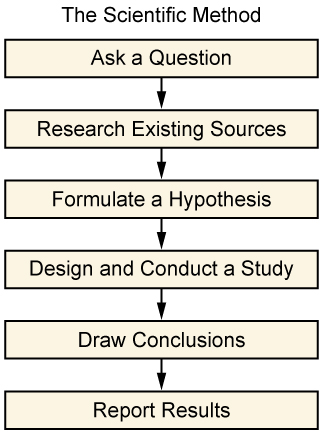

- Socialization has three primary goals: teaching impulse control and developing a conscience, preparing people to perform certain social roles, and cultivating shared sources of meaning and value.

- Socialization is culturally specific, but this does not mean certain cultures are better or worse than others.

Key Terms

- Jeffrey J. Arnett

-

In his 1995 paper, “Broad and Narrow Socialization: The Family in the Context of a Cultural Theory,” sociologist Jeffrey J. Arnett outlined his interpretation of the three primary goals of socialization.

- socialization

-

The process of learning one’s culture and how to live within it.

- norm

-

A rule that is enforced by members of a community.

Example

- The belief that killing is immoral is an American norm, learned through socialization. As children grow up, they are exposed to social cues that foster this norm, and they begin to form a conscience composed of this and other norms.

The role of socialization is to acquaint individuals with the norms of a given social group or society. It prepares individuals to participate in a group by illustrating the expectations of that group.

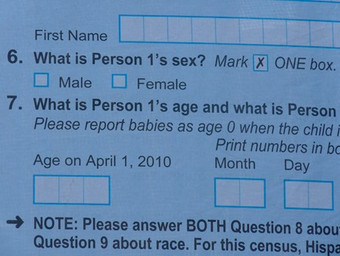

Socialization is very important for children, who begin the process at home with family, and continue it at school. They are taught what will be expected of them as they mature and become full members of society. Socialization is also important for adults who join new social groups. Broadly defined, it is the process of transferring norms, values, beliefs, and behaviors to future group members.

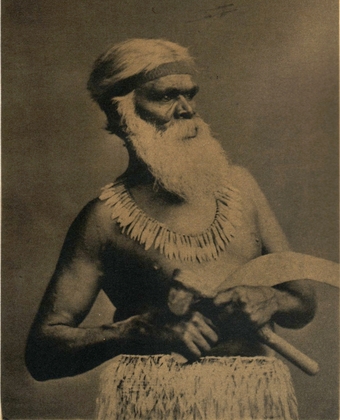

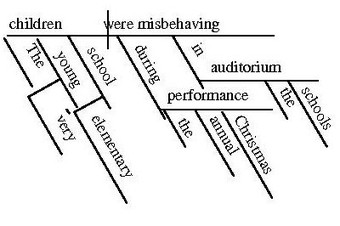

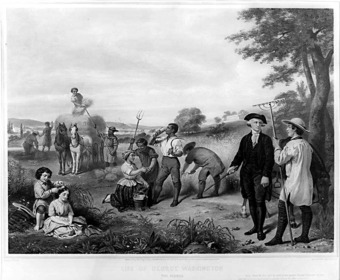

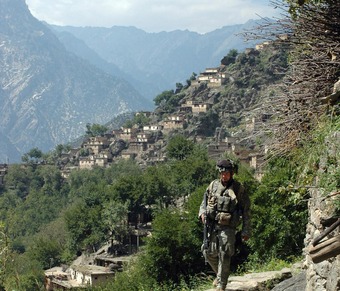

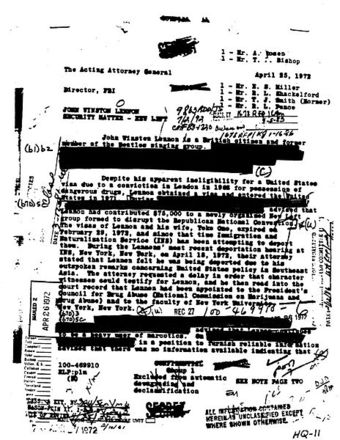

Socialization in School

Schools, such as this kindergarten in Afghanistan, serve as primary sites of socialization.

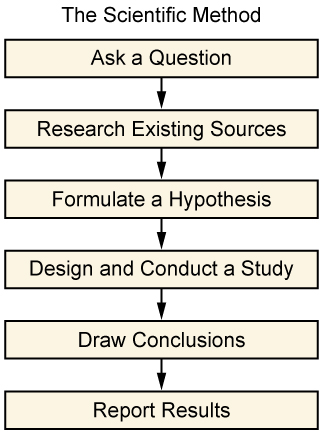

Three Goals of Socialization

In his 1995 paper, “Broad and Narrow Socialization: The Family in the Context of a Cultural Theory,” sociologist Jeffrey J. Arnett outlined his interpretation of the three primary goals of socialization. First, socialization teaches impulse control and helps individuals develop a conscience. This first goal is accomplished naturally: as people grow up within a particular society, they pick up on the expectations of those around them and internalize these expectations to moderate their impulses and develop a conscience. Second, socialization teaches individuals how to prepare for and perform certain social roles—occupational roles, gender roles, and the roles of institutions such as marriage and parenthood. Third, socialization cultivates shared sources of meaning and value. Through socialization, people learn to identify what is important and valued within a particular culture.

The term “socialization” refers to a general process, but socialization always takes place in specific contexts. Socialization is culturally specific: people in different cultures are socialized differently, to hold different beliefs and values, and to behave in different ways. Sociologists try to understand socialization, but they do not rank different schemes of socialization as good or bad; they study practices of socialization to determine why people behave the way that they do.

4.1.2: Nature vs. Nurture: A False Debate

Is nature (an individual’s innate qualities) or nurture (personal experience) more important in determining physical and behavioral traits?

Learning Objective

Discuss both sides of the nature versus nurture debate, understanding the implications of each

Key Points

- Nature refers to innate qualities like human nature or genetics.

- Nurture refers to care given to children by parents or, more broadly, to environmental influences such as media and marketing.

- The nature versus nurture debate raises philosophical questions about determinism and free will.

Key Terms

- determinism

-

The doctrine that all actions are determined by the current state and immutable laws of the universe, with no possibility of choice.

- nature

-

The innate characteristics of a thing. What something will tend by its own constitution, to be or do. Distinct from what might be expected or intended.

- nurture

-

The environmental influences that contribute to the development of an individual; see also nature.

Example

- Recently, the nature versus nurture debate has entered the realm of law and criminal defense. In some cases, lawyers for violent offenders have argued that criminal activity is caused by nature -that is, by genes, rather than rational decision-making processes. Such arguments may suggest that the accused are less culpable for their crimes. A “genetic predisposition to violence” could be a mitigating factor in crime if the science behind genetic determinants can be found conclusive. “Nurture”-based explanations, such as a disadvantaged background, have in some cases already been accepted as mitigating factors.

The nature versus nurture debate rages over whether an individual’s innate qualities or personal experiences are more important in determining physical and behavioral traits .

In the social and political sciences, the nature versus nurture debate may be compared with the structure versus agency debate, a similar discussion over whether social structure or individual agency (choice or free will) is more important for determining individual and social outcomes.

Historically, the “nurture” in the nature versus nurture debate has referred to the care parents give to children. But today, the concept of nurture has expanded to refer to any environmental factor – which may arise from prenatal, parental, extended family, or peer experiences, or even from media, marketing, and socioeconomic status. Environmental factors could begin to influence development even before it begins: a substantial amount of individual variation might be traced back to environmental influences that affect prenatal development.

The “nature” in the nature versus nurture debate generally refers to innate qualities. In historical terms, nature might refer to human nature or the soul. In modern scientific terms, it may refer to genetic makeup and biological traits . For example, researchers have long studied twins to determine the influence of biology on personality traits. These studies have revealed that twins, raised separately, still share many common personality traits, lending credibility to the nature side of the debate. However, sample sizes are usually small, so generalization of the results must be done with caution.

Identical Twins

Because of their identical genetic makeup, twins are used in many studies to assess the nature versus nurture debate.

The nature versus nurture debate conjures deep philosophical questions about free will and determinism. The “nature” side may be criticized for implying that we behave in ways in which we are naturally inclined, rather than in ways we choose. Similarly, the “nurture” side may be criticized for implying that we behave in ways determined by our environment, not ourselves.

Of course, sociologists point out that our environment is, at least in part, a social creation.

4.1.3: Sociobiology

Sociobiology examines and explains social behavior based on biological evolution.

Learning Objective

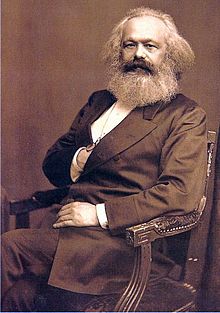

Discuss the concept of sociobiology in relation to natural selection and Charles Darwin, as well as genetics and instinctive behaviors

Key Points

- Sociobiologists believe that human behavior, like nonhuman animal behavior, can be partly explained as the outcome of natural selection.

- Sociobiologists are interested in instinctive, or intuitive behavior, and in explaining the similarities, rather than the differences, between cultures.

- Many critics draw an intellectual link between sociobiology and biological determinism, the belief that most human differences can be traced to specific genes rather than differences in culture or social environments.

Key Terms

- natural selection

-

A process by which heritable traits conferring survival and reproductive advantage to individuals, or related individuals, tend to be passed on to succeeding generations and become more frequent in a population, whereas other less favorable traits tend to become eliminated.

- biological determinism

-

The hypothesis that biological factors such as an organism’s genes (as opposed to social or environmental factors) determine psychological and behavioral traits.

- sociobiology

-

The science that applies the principles of evolutionary biology to the study of social behavior in both humans and animals.

Example

- A sociobiological explanation of humans running might argue that human beings are good at running because our bodies have evolved to run bipedally. In the course of human evolution, we had to run away from predators. Those who had the genetic makeup to be effective runners survived and passed along their genes, while those lacking the genetic predisposition for running were killed by predators.

Sociobiology is a field of scientific study which is based on the assumption that social behavior has resulted from evolution. It attempts to explain and examine social behavior within that context. Often considered a branch of biology and sociology, it also draws from ethology, anthropology, evolution, zoology, archaeology, population genetics, and other disciplines. Within the study of human societies, sociobiology is very closely allied to the fields of Darwinian anthropology, human behavioral ecology, and evolutionary psychology. While the term “sociobiology” can be traced to the 1940s, the concept didn’t gain major recognition until 1975 with the publication of Edward O. Wilson’s book, Sociobiology: The New Synthesis .

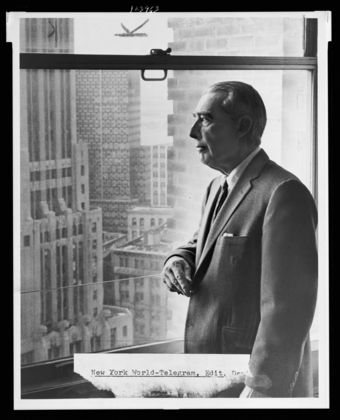

Edward O. Wilson

E. O. Wilson is a central figure in the history of sociobiology.

Sociobiologists believe that human behavior, like nonhuman animal behavior, can be partly explained as the outcome of natural selection. They contend that in order to fully understand behavior, it must be analyzed in terms of evolutionary considerations. Natural selection is fundamental to evolutionary theory. Variants of hereditary traits, which increase an organism’s ability to survive and reproduce, are more likely to be passed on to subsequent generations. Thus, inherited behavioral mechanisms that allowed an organism a greater chance of surviving and reproducing in the past are more likely to survive in present organisms.

Following this evolutionary logic, sociobiologists are interested in how behavior can be explained as a result of selective pressures in the history of a species. Thus, they are often interested in instinctive, or intuitive behavior, and in explaining the similarities, rather than the differences, between cultures. Sociobiologists reason that common behaviors likely evolved over time because they made individuals who exhibited those behaviors more likely to survive and reproduce.

Many critics draw an intellectual link between sociobiology and biological determinism, the belief that most human differences can be traced to specific genes rather than differences in culture or social environments. Critics also see parallels between sociobiology and biological determinism as a philosophy underlying the social Darwinian and eugenics movements of the early 20th century as well as controversies in the history of intelligence testing.

4.1.4: Deprivation and Development

Social deprivation, or prevention from culturally normal interaction with society, affects mental health and impairs child development.

Learning Objective

Explain why social deprivation is problematic for a person (especially children) and the issues it can lead to

Key Points

- As they develop, humans go through several critical periods, or windows of time during which they need to experience particular environmental stimuli in order to develop properly.

- Feral children provide an example of the effects of severe social deprivation during critical developmental periods.

- Attachment theory argues that infants must develop stable, on-going relationships with at least one adult caregiver in order to form a basis for successful development.

- The term maternal deprivation is a catch phrase summarizing the early work of psychiatrist and psychoanalyst John Bowlby on the effects of separating infants and young children from their mother.

- In United States law, the “tender years” doctrine was long applied when custody of infants and toddlers was preferentially given to mothers.

Key Terms

- Attachment Theory

-

Attachment theory describes the dynamics of long-term relationships between humans. Its most important tenet is that an infant needs to develop a relationship with at least one primary caregiver for social and emotional development to occur normally.

- feral children

-

A feral child is a human child who has lived isolated from human contact from a very young age, and has no experience of human care, loving or social behavior, and, crucially, of human language.

- Social deprivation

-

In instances of social deprivation, particularly for children, social experiences tend to be less varied and development may be delayed or hindered.

Example

- Social deprivation theory has had implications for family law. “Tender years” laws in the United States are based on social attachment theories and social deprivation theories, especially the theory of maternal deprivation, developed by psychiatrist and psychoanalyst John Bowlby. Maternal deprivation theory explains the effects of separating infants and young children from their mother. The idea that separation from the female caregiver has profound effects is one with considerable resonance outside the conventional study of child development. In United States law, the “tender years” doctrine was long applied in child custody cases, and led courts to preferentially award custody of infants and toddlers to mothers.

Humans are social beings, and social interaction is essential to normal human development. Social deprivation occurs when an individual is deprived of culturally normal interaction with the rest of society. Certain groups of people are more likely to experience social deprivation. For example, social deprivation often occurs along with a broad network of correlated factors that all contribute to social exclusion; these factors include mental illness, poverty, poor education, and low socioeconomic status.

By observing and interviewing victims of social deprivation, research has provided an understanding of how social deprivation is linked to human development and mental illness. As they develop, humans pass through critical periods, or windows of time during which they need to experience particular environmental stimuli in order to develop properly. But when individuals experience social deprivation, they miss those critical periods. Thus, social deprivation may delay or hinder development, especially for children.

Feral children provide an example of the effects of severe social deprivation during critical developmental periods. Feral children are children who grow up without social interaction. In some cases, they may have been abandoned early in childhood and grown up in the wilderness. In other cases, they may have been abused by parents who kept them isolated from other people. In several recorded cases, feral children failed to develop language skills, had only limited social understanding, and could not be rehabilitated.

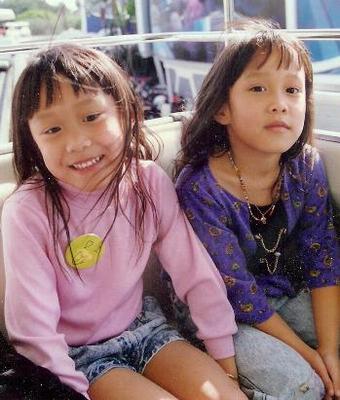

Attachment theory may explain why social deprivation has such dire effects for children . According to attachment theory, an infant needs to develop a relationship with at least one primary caregiver for social and emotional development to occur normally.

Maternal Deprivation

The idea that separation from the female caregiver has profound effects is one with considerable resonance outside the conventional study of child development.

4.1.5: Isolation and Development

Social isolation refers to a complete or near-complete lack of contact with society, which can affect all aspects of a person’s life.

Learning Objective

Interpret why social isolation can be problematic for a person in society and the importance of social connections

Key Points

- True social isolation is not the same as loneliness. It is often a chronic condition that persists for years and affects all aspects of a person’s existence.

- Emotional isolation is a term used to describe a state of isolation where the individual is emotionally isolated, but may have a well functioning social network.

- Social networks promote good health by providing direct support, encouraging healthy behaviors, and linking people with diffuse social networks that facilitate access to a wide range of resources supportive of health.

- Sociologists debate whether new technologies, such as the Internet and mobile phones, exacerbate social isolation or encourage it.

- A widely-held hypothesis is that social ties link people with diffuse social networks that facilitate access to a wide range of resources supportive of health.

Key Terms

- social isolation

-

Social isolation refers to a complete or near-complete lack of contact with society. It is usually involuntary, making it distinct from isolating tendencies or actions taken by an individual who is seeking to distance himself from society.

- emotional isolation

-

Emotional isolation is a term used to describe a state of isolation where the individual is emotionally isolated, but may have a well functioning social network.

- social network

-

The web of a person’s social, family, and business contacts, who provide material and social resources and opportunities.

Example

- Socially isolated individuals are more likely to experience negative health outcomes, such as failing to seek treatment for conditions before they become life-threatening. Individuals with vibrant social networks are more likely to encounter friends, family, or acquaintances who encourage them to visit the doctor to get a persistent cough checked out, whereas an isolated individual may allow the cough to progress until they experience serious difficulty breathing. Isolated individuals are also less likely to seek or get treatment for drug addiction or mental health problems, which they might be unable to recognize on their own. Older adults are especially prone to social isolation as their families and friends pass away.

Social isolation occurs when members of a social species (like humans) have complete or near-complete lack of contact with society. Social isolation is usually imposed involuntary, not chosen. Social isolation is not the same as loneliness rooted in temporary lack of contact with other humans, nor is it the same as isolating actions that might be consciously undertaken by an individual. A related phenomenon, emotional isolation may occur when individuals are emotionally isolated, even though they may have well-functioning social networks.

While loneliness is often fleeting, true social isolation often lasts for years or decades and tends to be a chronic condition that affects all aspects of a person’s existence and can have serious consequences for health and well being. Socially isolated people have no one to turn to in personal emergencies, no one to confide in during a crisis, and no one against whom to measure their own behavior against or from whom to learn etiquette or socially acceptable behavior. Social isolation can be problematic at any age, although it has different effects for different age groups (that is, social isolation for children may have different effects than social isolation for adults, although both age groups may experience it).

Social isolation can be dangerous because the vitality of individuals’ social relationships affect their health. Social contacts influence individuals’ behavior by encouraging health-promoting behaviors, such as adequate sleep, diet, exercise, and compliance with medical regimens or by discouraging health-damaging behaviors, such as smoking, excessive eating, alcohol consumption, or drug abuse. Socially isolated individuals lack these beneficial influences, as well as lacking a social support network that can provide help and comfort in times of stress and distress. Social relationships can also connect people with diffuse social networks that facilitate access to a wide range of resources supportive of health, such as medical referral networks, access to others dealing with similar problems, or opportunities to acquire needed resources via jobs, shopping, or financial institutions. These effects are different from receiving direct support from a friend; instead, they are based on the ties that close social ties provide to more distant connections.

Sociologists debate whether new technologies, such as the Internet and mobile phones exacerbate social isolation or could help overcome it. With the advent of online social networking communities, people have increasing options for engaging in social activities that do not require real-world physical interaction. Chat rooms, message boards, and other types of communities are now meeting social needs for those who would rather stay home alone, yet still develop communities of online friends.

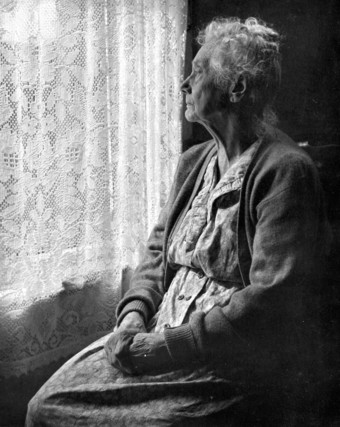

Social Isolation

Older adults are particularly susceptible to social isolation.

4.1.6: Feral Children

A feral child is a human child who has lived isolated from human contact from a very young age.

Learning Objective

Analyze the differences between the fictional and real-life depictions of feral children

Key Points

- Legendary and fictional feral children are often depicted as growing up with relatively normal human intelligence and skills and an innate sense of culture or civilization.

- In reality, feral children lack the basic social skills that are normally learned in the process of enculturation. They almost always have impaired language ability and mental function. These impairments highlight the role of socialization in human development.

- The impaired ability to learn language after having been isolated for so many years is often attributed to the existence of a critical period for language learning, and is taken as evidence in favor of the critical period hypothesis.

Key Terms

- critical period

-

A critical period refers to the window of time during which a human needs to experience a particular environmental stimulus in order for proper development to occur.

- enculturation

-

The process by which an individual adopts the behaviour patterns of the culture in which he or she is immersed.

- feral child

-

A child who is raised without human contact as a result of being abandoned, allegedly often raised by wild animals.

Example

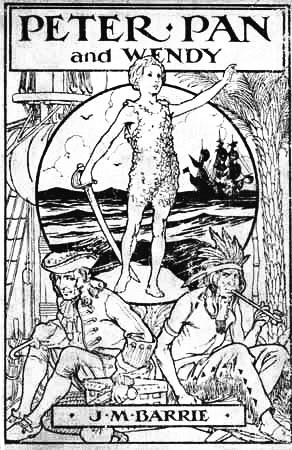

- Peter Pan is a well-known example of a fictional feral child who is raised without adult supervision or assistance. Whereas Peter Pan’s upbringing is glorified, all real cases involve some form of serious child abuse.

A feral child is a human child who has lived isolated from human contact from a very young age, and has no (or little) experience of human care, loving or social behavior, and, crucially, of human language. Some feral children have been confined in isolation by other people, usually their own parents. In some cases, this child abandonment was due to the parents rejecting a child’s severe intellectual or physical impairment. Feral children may have experienced severe child abuse or trauma before being abandoned or running away.

Depictions of Feral Children

Myths, legends, and fictional stories have depicted feral children reared by wild animals such as wolves and bears. Legendary and fictional feral children are often depicted as growing up with relatively normal human intelligence and skills and an innate sense of culture or civilization, coupled with a healthy dose of survival instincts. Their integration into human society is also made to seem relatively easy. These mythical children are often depicted as having superior strength, intelligence, and morals compared to “normal” humans. The implication is that because of their upbringing they represent humanity in a pure and uncorrupted state, similar to the noble savage.

Feral Children in Reality

In reality, feral children lack the basic social skills that are normally learned in the process of enculturation. For example, they may be unable to learn to use a toilet, have trouble learning to walk upright, and display a complete lack of interest in the human activity around them. They often seem mentally impaired and have almost insurmountable trouble learning human language. The impaired ability to learn language after having been isolated for so many years is often attributed to the existence of a critical period for language learning at an early age, and is taken as evidence in favor of the critical period hypothesis. It is theorized that if language is not developed, at least to a degree, during this critical period, a child can never reach his or her full language potential. The fact that feral children lack these abilities pinpoints the role of socialization in human development.

Examples of Feral Children

Famous examples of feral children include Ibn Tufail’s Hayy, Ibn al-Nafis’ Kamil, Rudyard Kipling’s Mowgli, Edgar Rice Burroughs’ Tarzan, J. M. Barrie’s Peter Pan, and the legends of Atalanta, Enkidu and Romulus and Remus. Tragically, feral children are not just fictional. Several cases have been discovered in which caretakers brutally isolated their children and in doing so prevented normal development.

A real-life example of a feral child is Danielle Crockett, known as “The Girl in the Window”. The officer who found Danielle reported it was “the worst case of child neglect he had seen in 27 years”. Doctors and therapists diagnosed Danielle with environmental autism, yet she was still adopted by Bernie and Diane Lierow. Danielle could not speak or respond to others nor eat solid food. Today, Danielle lives in Tennessee with her parents and has made remarkable progress. She communicates through the PECS system and loves to swim and ride horses.

Peter Pan

Peter Pan is an example of a fictional feral child.

4.1.7: Institutionalized Children

Institutionalized children may develop institutional syndrome, which refers to deficits or disabilities in social and life skills.

Learning Objective

Discuss both the processes of institutionalization and deinstitutionalization, as they relate to issues juveniles may have

Key Points

- The term “institutionalization” can be used both in regard to the process of committing an individual to a mental hospital or prison, and to institutional syndrome.

- Juvenile wards are sections of psychiatric hospitals or psychiatric wards set aside for children and adolescents with mental illness.

- Deinstitutionalization is the process of replacing long-stay psychiatric hospitals with less isolated community mental health service for those diagnosed with a mental disorder.

Key Terms

- Institutional syndrome

-

In clinical and abnormal psychology, institutional syndrome refers to deficits or disabilities in social and life skills, which develop after a person has spent a long period living in mental hospitals, prisons, or other remote institutions.

- mental illness

-

Mental illness is a broad generic label for a category of illnesses that may include affective or emotional instability, behavioral dysregulation, and/or cognitive dysfunction or impairment.

- deinstitutionalization

-

The process of abolishing a practice that has been considered a norm.

In clinical and abnormal psychology, institutional syndrome refers to deficits or disabilities in social and life skills, which develop after a person has spent a long period living in mental hospitals, prisons, or other remote institutions. In other words, individuals in institutions may be deprived of independence and of responsibility, to the point that once they return to “outside life” they are often unable to manage many of its demands. It has also been argued that institutionalized individuals become psychologically more prone to mental health problems.

The term institutionalization can be used both in regard to the process of committing an individual to a mental hospital or prison, or to institutional syndrome; thus a person being “institutionalized” may mean either that he/she has been placed in an institution, or that he/she is suffering the psychological effects of having been in an institution for an extended period of time.

Juvenile wards are sections of psychiatric hospitals or psychiatric wards set aside for children and/or adolescents with mental illness. However, there are a number of institutions specializing only in the treatment of juveniles, particularly when dealing with drug abuse, self-harm, eating disorders, anxiety, depression or other mental illness.

Psychiatric Wards

Many state hospitals have mental health branches, such as the Northern Michigan Asylum.

Deinstitutionalization is the process of replacing long-stay psychiatric hospitals with less isolated community mental health service for those diagnosed with a mental disorder or developmental disability. Deinstitutionalization can have multiple definitions; the first focuses on reducing the population size of mental institutions. This can be accomplished by releasing individuals from institutions, shortening the length of stays, and reducing both admissions and readmission. The second definition refers to reforming mental hospitals’ institutional processes so as to reduce or eliminate reinforcement of dependency, hopelessness, learned helplessness, and other maladaptive behaviors.

4.2: The Self and Socialization

4.2.1: Dimensions of Human Development

The dimensions of human development are divided into separate, consecutive stages of life from birth to old age.

Learning Objective

Analyze the differences between the various stages of human life – prenatal, toddler, early and late childhood, adolescence, early and middle adulthood and old age

Key Points

- The stages of human development are: prenatal development, toddler, early childhood, late childhood, adolescence, early adulthood, middle adulthood, and old age.

- Prenatal development is the process in which a human embryo gestates during pregnancy, from fertilization until birth. From birth until the first year, the child is referred to as an infant. Babies between ages of 1 and 2 are called “toddlers”.

- In the phase of early childhood, children attend preschool, broaden their social horizons and become more engaged with those around them.

- In late childhood, intelligence is demonstrated through logical and systematic manipulation of symbols related to concrete objects.

- Adolescence is the period of life between the onset of puberty and the full commitment to an adult social role.

- In early adulthood, a person must learn how to form intimate relationships. Middle adulthood refers to the period between ages 40 to 60.The final stage is old age, which refers to those over 60–80 years.

- In early adulthood, the person must learn how to form intimate relationships, both in friendship and love.

- Middle adulthood generally refers to the period between ages 40 to 60. During this period, middle-aged adults experience a conflict between generativity and stagnation.

- The last and final stage is old age, which refers to those over 60–80 years.

Key Terms

- diurnal

-

Happening or occurring during daylight, or primarily active during that time.

- Prenatal development

-

Prenatal development is the process in which a human embryo gestates during pregnancy, from fertilization until birth.

The dimensions of human development are divided into separate but consecutive stages in human life. They are characterized by prenatal development, toddler, early childhood, late childhood, adolescence, early adulthood, middle adulthood, and old age.

Prenatal development is the process during which a human embryo gestates during pregnancy, from fertilization until birth. The terms prenatal development, fetal development, and embryology are often used interchangeably. The embryonic period in humans begins at fertilization and from birth until the first year, the child is referred to as an infant. The majority of a newborn infant’s time is spent in sleep. At first, this sleep is evenly spread throughout the day and night but after a couple of months, infants generally become diurnal.

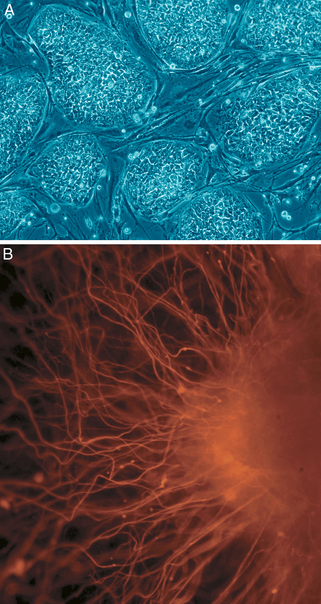

Human Embryogenesis

The first few weeks of embryogenesis in humans begin with the fertilizing of the egg and end with the closing of the neural tube.

Babies between ages of 1 and 2 are called “toddlers. ” In this stage, intelligence is demonstrated through the use of symbols, language use matures, and memory and imagination are developed. In the phase of early childhood, children attend preschool, broaden their social horizons and become more engaged with those around them. In late childhood, intelligence is demonstrated through logical and systematic manipulation of symbols related to concrete objects. Children go through the transition from the world at home to that of school and peers. If children can discover pleasure in intellectual stimulation, being productive, seeking success, they will develop a sense of competence.

Adolescence is the period of life between the onset of puberty and the full commitment to an adult social role. In early adulthood, the person must learn how to form intimate relationships, both in friendship and love. The development of this skill relies on the resolution of other stages. It may be hard to establish intimacy if one has not developed trust or a sense of identity. If this skill is not learned, the alternative is alienation, isolation, a fear of commitment, and the inability to depend on others

Middle adulthood generally refers to the period between ages 40 to 60. During this period, middle-aged adults experience a conflict between generativity and stagnation. They may either feel a sense of contributing to the next generation and their community or a sense of purposelessness. The last and final stage is old age, which refers to those over 60–80 years. During old age, people frequently experience a conflict between integrity and despair.

4.2.2: Sociological Theories of the Self

Sociological theories of the self attempt to explain how social processes such as socialization influence the development of the self.

Learning Objective

Interpret Mead’s theory of self in term of the differences between “I” and “me”

Key Points

- One of the most important sociological approaches to the self was developed by American sociologist George Herbert Mead. Mead conceptualizes the mind as the individual importation of the social process.

- This process is characterized by Mead as the “I” and the “me. ” The “me” is the social self and the “I” is the response to the “me. ” The “I” is the individual’s impulses. The “I” is self as subject; the “me” is self as object.

- For Mead, existence in a community comes before individual consciousness. First one must participate in the different social positions within society and only subsequently can one use that experience to take the perspective of others and thus become self-conscious.

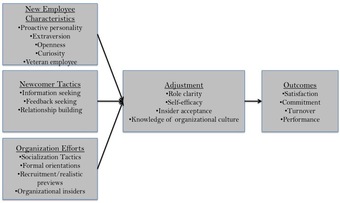

- Primary Socialization occurs when a child learns the attitudes, values, and actions appropriate to individuals as members of a particular culture.

- Secondary socialization refers to the process of learning the appropriate behavior as a member of a smaller group within the larger society.

- Group socialization is the theory that an individual’s peer groups, rather than parental figures, influences his or her personality and behavior in adulthood.

- Organizational socialization is the process whereby an employee learns the knowledge and skills necessary to assume his or her organizational role.

- In the social sciences, institutions are the structures and mechanisms of social order and cooperation governing the behavior of a set of individuals within a given human collectivity. Institutions include the family, religion, peer group, economic systems, legal systems, penal systems, language and the media.

Key Terms

- community

-

A group sharing a common understanding and often the same language, manners, tradition and law. See civilization.

- socialization

-

The process of learning one’s culture and how to live within it.

- generalized other

-

the general notion that a person has regarding the common expectations of others within his or her social group

- The self

-

The self is the individual person, from his or her own perspective. Self-awareness is the capacity for introspection and the ability to reconcile oneself as an individual separate from the environment and other individuals.

Example

- The processes of socialization are most easily seen in children. As they learn more about the world around them, they begin to reflect the social norms to which they are exposed. This is the quintessential example of socialization, though the same process applies to any newcomer to a given society.

Sociological theories of the self attempt to explain how social processes such as socialization influence the development of the self. One of the most important sociological approaches to the self was developed by American sociologist George Herbert Mead. Mead conceptualizes the mind as the individual importation of the social process. Mead presented the self and the mind in terms of a social process. As gestures are taken in by the individual organism, the individual organism also takes in the collective attitudes of others, in the form of gestures, and reacts accordingly with other organized attitudes.

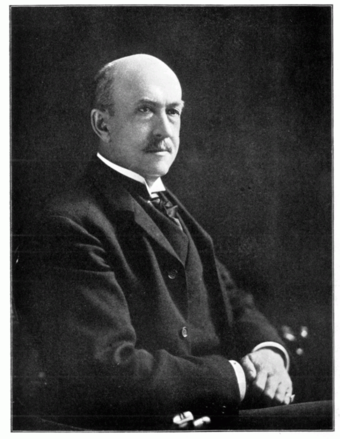

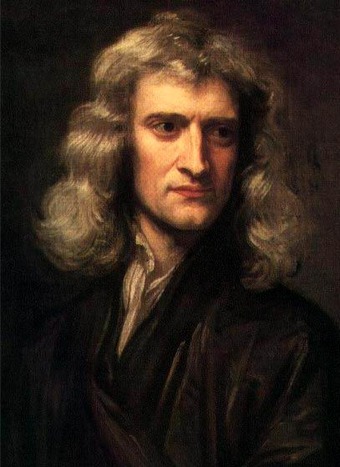

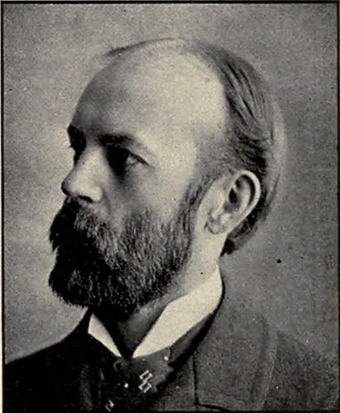

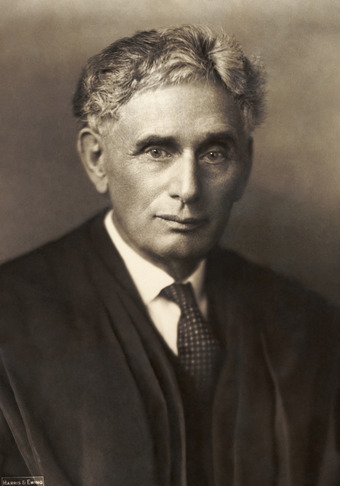

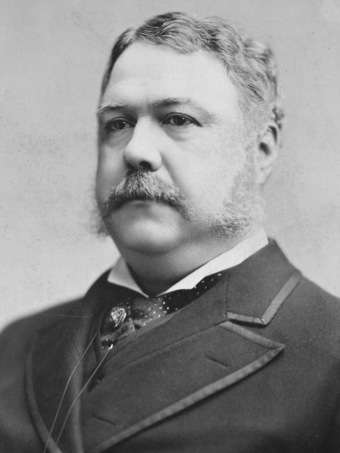

George Herbert Mead

George Herbert Mead (1863–1931) was an American philosopher, sociologist, and psychologist, primarily affiliated with the University of Chicago, where he was one of several distinguished pragmatists. He is regarded as one of the founders of social psychology and the American sociological tradition in general.

This process is characterized by Mead as the “I” and the “me. ” The “me” is the social self and the “I” is the response to the “me. ” In other words, the “I” is the response of an individual to the attitudes of others, while the “me” is the organized set of attitudes of others which an individual assumes. The “me” is the accumulated understanding of the “generalized other,” i.e. how one thinks one’s group perceives oneself. The “I” is the individual’s impulses. The “I” is self as subject; the “me” is self as object. The “I” is the knower, the “me” is the known. The mind, or stream of thought, is the self-reflective movements of the interaction between the “I” and the “me. ” These dynamics go beyond selfhood in a narrow sense, and form the basis of a theory of human cognition. For Mead the thinking process is the internalized dialogue between the “I” and the “me. “

Understood as a combination of the “I” and the “me,” Mead’s self proves to be noticeably entwined within a sociological existence. For Mead, existence in a community comes before individual consciousness. First one must participate in the different social positions within society and only subsequently can one use that experience to take the perspective of others and become self-conscious.

4.2.3: Psychological Approaches to the Self

The psychology of self is the study of either the cognitive or affective representation of one’s identity.

Learning Objective

Discuss the development of a person’s identity in relation to both the Kohut and Jungian self

Key Points

- The earliest formulation of the self in modern psychology derived from the distinction between the self as I, the subjective knower, and the self as Me, the object that is known.

- Heinz Kohut, an American psychologist, theorized that the self was bipolar, and was comprised of two systems of narcissistic perfection, one of which contained ambitions and the other of which contained ideals.

- In Jungian theory, derived from the psychologist C.G. Jung, the Self is one of several archetypes. It signifies the coherent whole, unifying both the consciousness and unconscious mind of a person.

- Social constructivists claim that timely and sensitive intervention by adults when a child is on the edge of learning a new task could help children learn new tasks.

- Attachment theory focuses on open, intimate, emotionally meaningful relationships.

- The nativism versus empiricism debate focuses on the relationship between innateness and environmental influence in regard to any particular aspect of development.

- A nativist account of development would argue that the processes in question are innate, that is, they are specified by the organism’s genes. An empiricist perspective would argue that those processes are acquired in interaction with the environment.

Key Terms

- archetype

-

according to the Swiss psychologist Carl Jung, a universal pattern of thought, present in an individual’s unconscious, inherited from the past collective experience of humanity

- affective

-

relating to, resulting from, or influenced by the emotions

- cognitive

-

the part of mental functions that deals with logic, as opposed to affective functions which deal with emotions

Example

- One can see how developmental psychology has become de rigeur in changes in parenting tactics. Since the publication of these studies, parents and caregivers pay even more attention to affection and encouraging hands on play. Encouraging a child to complete an art project and hugging her upon its completion is an example of how these theories are implemented in everyday life.

Psychology of the Self

The psychology of the self is the study of the cognitive or affective representation of one’s identity. In modern psychology, the earliest formulation of the self derived from the distinction between the self as “I,” the subjective knower, and the self as “me,” the object that is known. Put differently, let us say an individual wanted to think about their “self” as an analytic object. They might ask themselves the question, “what kind of person am I? ” That person is still, in that moment, thinking from some perspective, which is also considered the “self. ” Thus, in this case, the “self” is both what is doing the thinking, and also, at the same time, the object that is being thought about. It is from this dualism that the concept of the self initially emerged in modern psychology. Current psychological thought suggests that the self plays an integral part in human motivation, cognition, affect, and social identity.

The Kohut Self

Heinz Kohut, an American psychologist, theorized a bipolar self that was comprised of two systems of narcissistic perfection, one of which contained ambitions and the other of which contained ideals. Kohut called the pole of ambitions the narcissistic self (later called the grandiose self). He called the pole of ideals the idealized parental imago. According to Kohut, the two poles of the self represented natural progressions in the psychic life of infants and toddlers.

The Jungian Self

In Jungian theory, derived from the psychologist C.G. Jung , the Self is one of several archetypes. It signifies the coherent whole, unifying both the conscious and unconscious mind of a person. The Self, according to Jung, is the end product of individuation, which is defined as the process of integrating one’s personality. For Jung, the Self could be symbolized by either the circle (especially when divided into four quadrants), the square, or the mandala. He also believed that the Self could be symbolically personified in the archetypes of the Wise Old Woman and Wise Old Man.

Carl Gustav Jung

According to Jung, the Self is one of several archetypes.

In contrast to earlier theorists, Jung believed that an individual’s personality had a center. While he considered the ego to be the center of an individual’s conscious identity, he considered the Self to be the center of an individual’s total personality. This total personality included within it the ego, consciousness, and the unconscious mind. To Jung, the Self is both the whole and the center. While Jung perceived the ego to be a self-contained, off-centered, smaller circle contained within the whole, he believed that the Self was the greater circle. In addition to being the center of the psyche, Jung also believed the Self was autonomous, meaning that it exists outside of time and space. He also believed that the Self was the source of dreams, and that the Self would appear in dreams as an authority figure that could either perceive the future or guide an individual’s present.

4.3: Theories of Socialization

4.3.1: Theories of Socialization

Socialization is the means by which human infants begin to acquire the skills necessary to perform as functioning members of their society.

Learning Objective

Discuss the different types and theories of socialization

Key Points

- Group socialization is the theory that an individual’s peer groups, rather than parental figures, influences his or her personality and behavior in adulthood.

- Gender socialization refers to the learning of behavior and attitudes considered appropriate for a given sex.

- Cultural socialization refers to parenting practices that teach children about their racial history or heritage and, sometimes, is referred to as pride development.

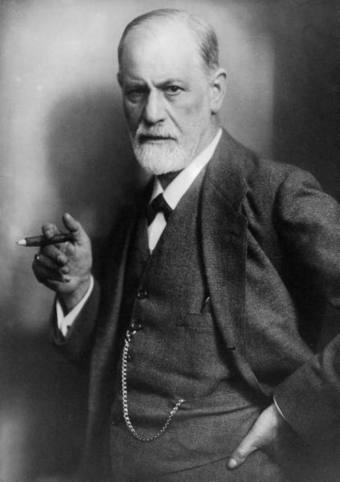

- Sigmund Freud proposed that the human psyche could be divided into three parts: Id, ego, and super-ego.

- Piaget’s theory of cognitive development is a comprehensive theory about the nature and development of human intelligence.

- Positive Adult Development is one of the four major forms of adult developmental study that can be identified. The other three forms are directionless change, stasis, and decline.

Key Term

- socialization

-

The process of learning one’s culture and how to live within it.

Example

- Primary Socialization occurs when a child learns the attitudes, values, and actions appropriate to individuals as members of a particular culture. For example if a child saw his/her mother expressing a discriminatory opinion about a minority group, then that child may think this behavior is acceptable and could continue to have this opinion about minority groups.

“Socialization” is a term used by sociologists, social psychologists, anthropologists, political scientists, and educationalists to refer to the lifelong process of inheriting and disseminating norms, customs, and ideologies, providing an individual with the skills and habits necessary for participating within his or her own society. Socialization is thus “the means by which social and cultural continuity are attained.”

Socialization is the means by which human infants begin to acquire the skills necessary to perform as a functioning member of their society and is the most influential learning process one can experience. Unlike other living species, whose behavior is biologically set, humans need social experiences to learn their culture and to survive. Although cultural variability manifests in the actions, customs, and behaviors of whole social groups, the most fundamental expression of culture is found at the individual level. This expression can only occur after an individual has been socialized by his or her parents, family, extended family, and extended social networks.

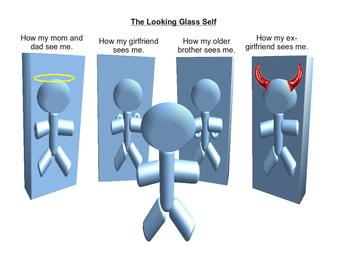

The looking-glass self is a social psychological concept, created by Charles Horton Cooley in 1902, stating that a person’s self grows out of society’s interpersonal interactions and the perceptions of others. The term refers to people shaping themselves based on other people’s perception, which leads people to reinforce other people’s perspectives on themselves. People shape themselves based on what other people perceive and confirm other people’s opinion on themselves.

George Herbert Mead developed a theory of social behaviorism to explain how social experience develops an individual’s personality. Mead’s central concept is the self: the part of an individual’s personality composed of self-awareness and self-image. Mead claimed that the self is not there at birth, rather, it is developed with social experience.

Sigmund Freud was an Austrian neurologist who founded the discipline of psychoanalysis, a clinical method for treating psychopathology through dialogue between a patient and a psychoanalyst. In his later work, Freud proposed that the human psyche could be divided into three parts: Id, ego, and super-ego. The id is the completely unconscious, impulsive, child-like portion of the psyche that operates on the “pleasure principle” and is the source of basic impulses and drives; it seeks immediate pleasure and gratification. The ego acts according to the reality principle (i.e., it seeks to please the id’s drive in realistic ways that will benefit in the long term rather than bringing grief). Finally, the super-ego aims for perfection. It comprises that organized part of the personality structure, mainly but not entirely unconscious that includes the individual’s ego ideals, spiritual goals, and the psychic agency that criticizes and prohibits his or her drives, fantasies, feelings, and actions.

Different Forms of Socialization

Group socialization is the theory that an individual’s peer groups, rather than parental figures, influences his or her personality and behavior in adulthood. Adolescents spend more time with peers than with parents. Therefore, peer groups have stronger correlations with personality development than parental figures do. For example, twin brothers, whose genetic makeup are identical, will differ in personality because they have different groups of friends, not necessarily because their parents raised them differently.

Gender socialization Henslin (1999) contends that “an important part of socialization is the learning of culturally defined gender roles” (p. 76). Gender socialization refers to the learning of behavior and attitudes considered appropriate for a given sex. Boys learn to be boys, and girls learn to be girls. This “learning” happens by way of many different agents of socialization. The family is certainly important in reinforcing gender roles, but so are one’s friends, school, work, and the mass media. Gender roles are reinforced through “countless subtle and not so subtle ways,” said Henslin (1999, p. 76).

Cultural socialization refers to parenting practices that teach children about their racial history or heritage and, sometimes, is referred to as “pride development. ” Preparation for bias refers to parenting practices focused on preparing children to be aware of, and cope with, discrimination. Promotion of mistrust refers to the parenting practices of socializing children to be wary of people from other races. Egalitarianism refers to socializing children with the belief that all people are equal and should be treated with a common humanity.

4.3.2: Cooley

In 1902, Charles Horton Cooley created the concept of the looking-glass self, which explored how identity is formed.

Learning Objective

Discuss Cooley’s idea of the “looking-glass self” and how people use socialization to create a personal identity and develop empathy for others

Key Points

- The looking-glass self is a social psychological concept stating that a person’s self grows out of society’s interpersonal interactions and the perceptions of others.

- There are three components of the looking-glass self: We imagine how we appear to others, we imagine the judgment of that appearance, and we develop our self (identity) through the judgments of others.

- George Herbert Mead described self as “taking the role of the other,” the premise for which the self is actualized. Through interaction with others, we begin to develop an identity about who we are, as well as empathy for others.

Key Terms

- George Herbert Mead

-

(1863–1931) An American philosopher, sociologist, and psychologist, primarily affiliated with the University of Chicago, where he was one of several distinguished pragmatists.

- Looking-Glass self

-

The looking-glass self is a social psychological concept, created by Charles Horton Cooley in 1902, stating that a person’s self grows out of society’s interpersonal interactions and the perceptions of others.

- Charles Horton Cooley

-

Charles Horton Cooley (August 17, 1864-May 8, 1929) was an American sociologist and the son of Thomas M. Cooley. He studied and went on to teach economics and sociology at the University of Michigan, and he was a founding member and the eighth president of the American Sociological Association.

Example

- An example of the looking-self concept is computer technology. Using computer technology, people can create an avatar, a customized symbol that represents the computer user. For example, in the virtual world Second Life the computer-user can create a humanlike avatar that reflects the user in regard to race, age, physical makeup, status and the like. By selecting certain physical characteristics or symbols, the avatar reflects how the creator seeks to be perceived in the virtual world and how the symbols used in the creation of the avatar influence others’ actions toward the computer-user.

The looking-glass self is a social psychological concept created by Charles Horton Cooley in 1902. It states that a person’s self grows out of society’s interpersonal interactions and the perceptions of others. The term refers to people shaping their identity based on the perception of others, which leads the people to reinforce other people’s perspectives on themselves. People shape themselves based on what other people perceive and confirm other people’s opinion of themselves.

There are three main components of the looking-glass self:

- First, we imagine how we must appear to others.

- Second, we imagine the judgment of that appearance.

- Finally, we develop our self through the judgments of others.

In hypothesizing the framework for the looking glass self, Cooley said, “the mind is mental” because “the human mind is social. ” In other words, the mind’s mental ability is a direct result of human social interaction. Beginning as children, humans begin to define themselves within the context of their socializations. The child learns that the symbol of his/her crying will elicit a response from his/her parents, not only when they are in need of necessities, such as food, but also as a symbol to receive their attention. George Herbert Mead described the self as “taking the role of the other,” the premise for which the self is actualized. Through interaction with others, we begin to develop an identity about who we are, as well as empathy for others.

An example of the looking-self concept is computer technology. Using computer technology, people can create an avatar, a customized symbol that represents the computer user. For example, in the virtual world Second Life, the computer-user can create a human-like avatar that reflects the user in regard to race, age, physical makeup, status, and the like. By selecting certain physical characteristics or symbols, the avatar reflects how the creator seeks to be perceived in the virtual world and how the symbols used in the creation of the avatar influence others’ actions toward the computer user.

4.3.3: Mead

For Mead, the self arises out of the social act of communication, which is the basis for socialization.

Learning Objective

Discuss Mead’s theory of social psychology in terms of two concepts – pragmatism and social behaviorism

Key Points

- George Herbert Mead was an American philosopher, sociologist, and psychologist and one of several distinguished pragmatists.

- The two most important roots of Mead’s work are the philosophy of pragmatism and social behaviorism.

- Pragmatism is a wide-ranging philosophical position that states that people define the social and physical “objects” they encounter in the world according to their use for them.

- One of his most influential ideas was the emergence of mind and self from the communication process between organisms, discussed in the book, Mind, Self and Society, also known as social behaviorism.

Key Terms

- symbolic interactionism

-

Symbolic interactionism is the study of the patterns of communication, interpretation, and adjustment between individuals.

- social behaviorism

-

Discussed in the book, Mind, Self and Society, social behaviorism refers to the emergence of mind and self from the communication process between organisms.

- pragmatism

-

The theory that problems should be met with practical solutions rather than ideological ones; a concentration on facts rather than emotions or ideals.

Example

- In Pragmatism, nothing practical or useful is held to be necessarily true, nor is anything which helps to survive merely in the short term. For example, to believe my cheating spouse is faithful may help me feel better now, but it is certainly not useful from a more long-term perspective because it doesn’t accord with the facts (and is therefore not true).

George Herbert Mead was an American philosopher, sociologist, and psychologist, primarily affiliated with the University of Chicago, where he was one of several distinguished pragmatists. He is regarded as one of the founders of social psychology and the American sociological tradition in general.

The two most important roots of Mead’s work, and of symbolic interactionism in general, are the philosophy of pragmatism and social behaviorism. Pragmatism is a wide ranging philosophical position from which several aspects of Mead’s influences can be identified. There are four main tenets of pragmatism: First, to pragmatists true reality does not exist “out there” in the real world, it “is actively created as we act in and toward the world. Second, people remember and base their knowledge of the world on what has been useful to them and are likely to alter what no longer “works. ” Third, people define the social and physical “objects” they encounter in the world according to their use for them. Lastly, if we want to understand actors, we must base that understanding on what people actually do. In Pragmatism nothing practical or useful is held to be necessarily true, nor is anything which helps to survive merely in the short term. For example, to believe my cheating spouse is faithful may help me feel better now, but it is certainly not useful from a more long-term perspective because it doesn’t align with the facts (and is therefore not true).

Mead was a very important figure in twentieth century social philosophy. One of his most influential ideas was the emergence of mind and self from the communication process between organisms, discussed in the book, Mind, Self and Society, also known as social behaviorism. For Mead, mind arises out of the social act of communication. Mead’s concept of the social act is relevant, not only to his theory of mind, but also to all facets of his social philosophy. His theory of “mind, self, and society” is, in effect, a philosophy of the act from the standpoint of a social process involving the interaction of many individuals, just as his theory of knowledge and value is a philosophy of the act from the standpoint of the experiencing individual in interaction with an environment.

Mead is a major American philosopher by virtue of being, along with John Dewey, Charles Peirce, and William James, one of the founders of pragmatism. He also made significant contributions to the philosophies of nature, science, and history, to philosophical anthropology, and to process philosophy. Dewey and Alfred North Whitehead considered Mead a thinker of the first rank. He is a classic example of a social theorist whose work does not fit easily within conventional disciplinary boundaries.

George Herbert Mead

George Herbert Mead (1863–1931) was an American philosopher, sociologist, and psychologist, primarily affiliated with the University of Chicago, where he was one of several distinguished pragmatists. He is regarded as one of the founders of social psychology and the American sociological tradition in general.

4.3.4: Freud

According to Freud, human behavior, experience, and cognition are largely determined by unconscious drives and events in early childhood.

Learning Objective

Discuss Freud’s “id”, “ego” and “super-ego” and his six basic principles of psychoanalysis and how psychoanalysis is used today as a treatment for a variety of psychological disorders

Key Points

- Psychoanalysis is a clinical method for treating psychopathology through dialogue between a patient and a psychoanalyst.

- The specifics of the analyst’s interventions typically include confronting and clarifying the patient’s pathological defenses, wishes, and guilt.

- Freud named his new theory the Oedipus complex after the famous Greek tragedy Oedipus Rex by Sophocles. The Oedipus conflict was described as a state of psychosexual development and awareness.

- The id is the completely unconscious, impulsive, child-like portion of the psyche that operates on the “pleasure principle” and is the source of basic impulses and drives.

- The ego acts according to the reality principle (i.e., it seeks to please the id’s drive in realistic ways that will benefit in the long term rather than bringing grief).

- The super-ego aims for perfection. It comprises that organized part of the personality structure.

- The super-ego aims for perfection. It comprises that organised part of the personality structure

Key Terms

- Oedipus complex

-

In Freudian theory, the complex of emotions aroused in a child by an unconscious sexual desire for the parent of the opposite sex.

- the unconscious

-

For Freud, the unconscious refers to the mental processes of which individuals make themselves unaware.

Example

- The most common problems treatable with psychoanalysis include: phobias, conversions, compulsions, obsessions, anxiety, attacks, depressions, sexual dysfunctions, a wide variety of relationship problems (such as dating and marital strife), and a wide variety of character problems (painful shyness, meanness, obnoxiousness, workaholism, hyperseductiveness, hyperemotionality, hyperfastidiousness).

Sigmund Freud was an Austrian neurologist who founded the discipline of psychoanalysis. Interested in philosophy as a student, Freud later decided to become a neurological researcher in cerebral palsy, Aphasia, and microscopic neuroanatomy. Freud went on to develop theories about the unconscious mind and the mechanism of repression and established the field of verbal psychotherapy by creating psychoanalysis, a clinical method for treating psychopathology through dialogue between a patient and a psychoanalyst. The most common problems treatable with psychoanalysis include phobias, conversions, compulsions, obsessions, anxiety, attacks, depressions, sexual dysfunctions, a wide variety of relationship problems (such as dating and marital strife), and a wide variety of character problems (painful shyness, meanness, obnoxiousness, workaholism, hyperseductiveness, hyperemotionality, hyperfastidiousness).

The Basic Tenets of Psychoanalysis

The basic tenets of psychoanalysis include the following:

- First, human behavior, experience, and cognition are largely determined by irrational drives.

- Those drives are largely unconscious.

- Attempts to bring those drives into awareness meet psychological resistance in the form of defense mechanisms.

- Besides the inherited constitution of personality, one’s development is determined by events in early childhood.

- Conflicts between conscious view of reality and unconscious (repressed) material can result in mental disturbances, such as neurosis, neurotic traits, anxiety, depression etc.

- The liberation from the effects of the unconscious material is achieved through bringing this material into the consciousness.

Psychoanalysis as Treatment

Freudian psychoanalysis refers to a specific type of treatment in which the “analysand” (the analytic patient) verbalizes thoughts, including free associations, fantasies, and dreams, from which the analyst induces the unconscious conflicts. This causes the patient’s symptoms and character problems, and interprets them for the patient to create insight for resolution of the problems. The specifics of the analyst’s interventions typically include confronting and clarifying the patient’s pathological defenses, wishes, and guilt. Through the analysis of conflicts, including those contributing to resistance and those involving transference onto the analyst of distorted reactions, psychoanalytic treatment can hypothesize how patients unconsciously are their own worst enemies: how unconscious, symbolic reactions that have been stimulated by experience are causing symptoms.

The Id, The Ego, Super-Ego

Freud hoped to prove that his model was universally valid and thus turned to ancient mythology and contemporary ethnography for comparative material. Freud named his new theory the Oedipus complex after the famous Greek tragedy Oedipus Rex by Sophocles. The Oedipus conflict was described as a state of psychosexual development and awareness. In his later work, Freud proposed that the human psyche could be divided into three parts: Id, ego, and super-ego. The id is the completely unconscious, impulsive, child-like portion of the psyche that operates on the “pleasure principle” and is the source of basic impulses and drives; it seeks immediate pleasure and gratification. The ego acts according to the reality principle (i.e., it seeks to please the id’s drive in realistic ways that will benefit in the long term rather than bringing grief). Finally, the super-ego aims for perfection. It comprises that organized part of the personality structure, mainly but not entirely unconscious, that includes the individual’s ego, ideals, spiritual goals, and the psychic agency that criticizes and prohibits his or her drives, fantasies, feelings, and actions.

4.3.5: Piaget

Piaget’s theory of cognitive development is a comprehensive theory about the nature and development of human intelligence.

Learning Objective

Analyze the differences between accommodation and assimilation, in relation to Piaget’s stages

Key Points

- Jean Piaget was a French-speaking Swiss developmental psychologist and philosopher known for his epistemological studies with children. His theory of cognitive development and epistemological view are together called “genetic epistemology,” the study of the origins of knowledge.

- Piaget argued that all people undergo a series of stages and transformations. Transformations refer to all manners of changes that a thing or person can experience, while states refer to the conditions or the appearances in which things or persons can be found between transformations.

- Piaget identified four stages of cognitive development: sensorimotor, pre-operational, concrete operational, and formal operational. Through these stages, children progress in their thinking and logical processes.

- Piaget’s theory of cognitive development is a comprehensive theory about the nature and development of human intelligence that explains how individuals perceive and adapt to new information through the processes of assimilation and accommodation.

- Assimilation is the process of taking one’s environment and new information and fitting it into pre-existing cognitive schemas. Accommodation is the process of taking one’s environment and new information, and altering one’s pre-existing schemas in order to fit in the new information.

- Object permanence is the understanding that objects continue to exist even when they cannot be seen, heard, or touched.

- Object permanence is the understanding that objects continue to exist even when they cannot be seen, heard, or touched.

- The concrete operational stage is the third of four stages of cognitive development in Piaget’s theory.

- The final stage is known as formal operational stage (adolescence and into adulthood): Intelligence is demonstrated through the logical use of symbols related to abstract concepts.

Key Terms

- accommodation

-

Accommodation, unlike assimilation, is the process of taking one’s environment and new information, and altering one’s pre-existing schemas in order to fit in the new information.

- object permanence

-

The understanding (typically developed during early infancy) that an object still exists even when it disappears from sight, or other senses.

- genetic epistemology

-

Genetic epistemology is a study of the origins of knowledge. The discipline was established by Jean Piaget.

Jean Piaget was a French-speaking Swiss developmental psychologist and philosopher known for his epistemological studies with children. His theory of cognitive development and epistemological view are together called “genetic epistemology. ” He believed answers for the epistemological questions at his time could be better addressed by looking at their genetic components. This led to his experiments with children and adolescents in which he explored the thinking and logic processes used by children of different ages.

Piaget’s theory of cognitive development is a comprehensive theory about the nature and development of human intelligence. Piaget believed that reality is a dynamic system of continuous change and as such, it is defined in reference to the two conditions that define dynamic systems. Specifically, he argued that reality involves transformations and states. Transformations refer to all manners of changes that a thing or person can undergo. States refer to the conditions or the appearances in which things or persons can be found between transformations.

Piaget explains the growth of characteristics and types of thinking as the result of four stages of development. The stages are as follows:

- The sensorimotor stage is the first of the four stages in cognitive development that “extends from birth to the acquisition of language. ” In this stage, infants construct an understanding of the world by coordinating experiences with physical actions–in other words, infants gain knowledge of the word from the physical actions they perform. The development of object permanence is one of the most important accomplishments of this stage.

- The pre-operational stage is the second stage of cognitive development. It begins around the end of the second year. During this stage, the child learns to use and to represent objects by images, words, and drawings. The child is able to form stable concepts, as well as mental reasoning and magical beliefs.

- The third stage is called the “concrete operational stage” and occurs approximately between the ages of 7 and 11 years. In this stage, children develop the appropriate use of logic and are able to think abstractly, make rational judgments about concrete phenomena, and systematically manipulate symbols related to concrete objects.

- The final stage is known as the “formal operational stage” (adolescence and into adulthood). Intelligence is demonstrated through the logical use of symbols related to abstract concepts. At this point, the person is capable of hypothetical and deductive reasoning.

When studying the field of education Piaget identified two processes: accommodation and assimilation. Assimilation describes how humans perceive and adapt to new information. It is the process of taking one’s environment and new information and fitting it into pre-existing cognitive schemas. Accommodation, unlike assimilation, is the process of taking one’s environment and new information and altering one’s pre-existing schemas in order to fit in the new information.

Jean Piaget

Jean Piaget was a French-speaking Swiss developmental psychologist and philosopher known for his epistemological studies with children.

4.3.6: Levinson

Daniel J. Levinson was one of the founders of the field of positive adult development.

Learning Objective

Summarize Daniel Levinson’s theory of positive adult development and how it influenced changes in the perception of development during adulthood

Key Points

- As a theory, positive adult development asserts that development continues after adolescence, long into adulthood.

- In positive adult development research, scientists question not only whether development ceases after adolescence, but also a notion, popularized by many gerontologists, that a decline occurs after late adolescence.

- Positive adult developmental processes are divided into at least six areas of study: hierarchical complexity, knowledge, experience, expertise, wisdom, and spirituality.

Key Terms

- positive adult development

-

Positive adult development is one of the four major forms of adult developmental study that can be identified.

- stasis

-

inactivity; a freezing, or state of motionlessness

- decline

-

downward movement, fall

Daniel Levinson

Daniel J. Levinson, an American psychologist, was one of the founders of the field of positive adult development. He was born in New York City on May 28, 1920, and completed his dissertation at the University of California, Berkeley, in 1947. In this dissertation, he attempted to develop a way of measuring ethnocentrism. In 1950, he moved to Harvard University. From 1966 to 1990, he was a professor of psychology at the Yale University School of Medicine.

Levinson’s two most important books were Seasons of a Man’s Life and Seasons of a Woman’s Life, which continue to be highly influential works. His multidisciplinary approach is reflected in his work on the life structure theory of adult development.

Positive Adult Development

Positive adult development is one of the four major forms of adult developmental study. The other three are directionless change, stasis, and decline. Positive adult developmental processes are divided into the following six areas of study:

- hierarchical complexity

- knowledge

- experience

- expertise

- wisdom

- spirituality

Research in this field questions not only whether development ceases after adolescence, but also the notion, popularized by many gerontologists, that a decline occurs after late adolescence. Research shows that positive development does still occur during adulthood. Recent studies indicate that such development is useful in predicting things such as an individual’s health, life satisfaction, and ability to contribute to society.

Now that there is scientific proof that individuals continue to develop as adults, researchers have begun investigating how to foster such development. Rather than just describing, as phenomenon, the fact that adults continue to develop, researchers are interested in aiding and guiding that development. For educators of adults in formal settings, this has been a priority in many ways already. More recently, researchers have begun to experiment with hypotheses about fostering positive adult development. These methods are used in organizational and educational setting. Some use developmentally-designed, structured public discourse to address complex public issues.

Positive Adult Development

Research in Positive Adult Development questions not only whether development ceases after adolescence, but also the notion, popularized by many gerontologists, that a decline occurs after late adolescence.

4.4: Learning Personality, Morality, and Emotions

4.4.1: Sociology of Emotion

The sociology of emotions applies sociological theorems and techniques to the study of human emotions.

Learning Objective

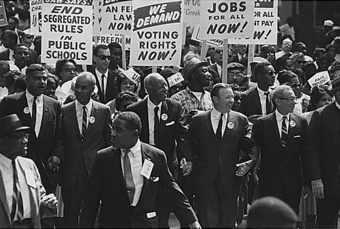

Examine the origins of the sociology of emotions through the work of Marx, Weber, and Simmel, and its development by T. David Kemper, Arlie Hochschild, Randall Collins, and David R. Heise

Key Points

- Emotions impact society on both the micro level (everyday social interactions) and the macro level (social institutions, discourses, and ideologies).

- Ethnomethodology revealed emotional commitments to everyday norms through purposeful breaching of the norms.

- We try to regulate our emotions to fit in with the norms of the situation, based on many, and sometimes conflicting demands upon us.

Key Terms

- ethnomethodology

-

An academic discipline that attempts to understand the social orders people use to make sense of the world through analyzing their accounts and descriptions of their day-to-day experiences.

- The sociology of emotions

-

The sociology of emotion applies sociological theorems and techniques to the study of human emotions.

Examples

- An example of individuals emotions’ impacting social interactions and institutions is how a Board of Directors will fail to be productive if the members are angry with one another.

- An example of the operations of a guilt society is how Americans expect a husband to feel guilty if he forgets his wife’s birthday. This expectation is the product of a guilt society.

- According to doctrine, many members of the Catholic church believe that people should feel shame for masturbating. This expectation is the product of a shame society.