7.1: Populations and Samples

7.1.1: Populations

In statistics, a population includes all members of a defined group that we are studying for data driven decisions.

Learning Objective

Give examples of a statistical populations and sub-populations

Key Points

- It is often impractical to study an entire population, so we often study a sample from that population to infer information about the larger population as a whole.

- Sometimes a government wishes to try to gain information about all the people living within an area with regard to gender, race, income, and religion. This type of information gathering over a whole population is called a census.

- A subset of a population is called a sub-population.

Key Terms

- heterogeneous

-

diverse in kind or nature; composed of diverse parts

- sample

-

a subset of a population selected for measurement, observation, or questioning to provide statistical information about the population

Populations

When we hear the word population, we typically think of all the people living in a town, state, or country. This is one type of population. In statistics, the word takes on a slightly different meaning.

A statistical population is a set of entities from which statistical inferences are to be drawn, often based on a random sample taken from the population. For example, if we are interested in making generalizations about all crows, then the statistical population is the set of all crows that exist now, ever existed, or will exist in the future. Since in this case and many others it is impossible to observe the entire statistical population, due to time constraints, constraints of geographical accessibility, and constraints on the researcher’s resources, a researcher would instead observe a statistical sample from the population in order to attempt to learn something about the population as a whole.

Sometimes a government wishes to try to gain information about all the people living within an area with regard to gender, race, income, and religion. This type of information gathering over a whole population is called a census .

Census

This is the logo for the Bureau of the Census in the United States.

Sub-Populations

A subset of a population is called a sub-population. If different sub-populations have different properties, so that the overall population is heterogeneous, the properties and responses of the overall population can often be better understood if the population is first separated into distinct sub-populations. For instance, a particular medicine may have different effects on different sub-populations, and these effects may be obscured or dismissed if such special sub-populations are not identified and examined in isolation.

Similarly, one can often estimate parameters more accurately if one separates out sub-populations. For example, the distribution of heights among people is better modeled by considering men and women as separate sub-populations.

7.1.2: Samples

A sample is a set of data collected and/or selected from a population by a defined procedure.

Learning Objective

Differentiate between a sample and a population

Key Points

- A complete sample is a set of objects from a parent population that includes all such objects that satisfy a set of well-defined selection criteria.

- An unbiased (representative) sample is a set of objects chosen from a complete sample using a selection process that does not depend on the properties of the objects.

- A random sample is defined as a sample where each individual member of the population has a known, non-zero chance of being selected as part of the sample.

Key Terms

- census

-

an official count of members of a population (not necessarily human), usually residents or citizens in a particular region, often done at regular intervals

- population

-

a group of units (persons, objects, or other items) enumerated in a census or from which a sample is drawn

- unbiased

-

impartial or without prejudice

What is a Sample?

In statistics and quantitative research methodology, a data sample is a set of data collected and/or selected from a population by a defined procedure.

Typically, the population is very large, making a census or a complete enumeration of all the values in the population impractical or impossible. The sample represents a subset of manageable size. Samples are collected and statistics are calculated from the samples so that one can make inferences or extrapolations from the sample to the population. This process of collecting information from a sample is referred to as sampling.

Types of Samples

A complete sample is a set of objects from a parent population that includes all such objects that satisfy a set of well-defined selection criteria. For example, a complete sample of Australian men taller than 2 meters would consist of a list of every Australian male taller than 2 meters. It wouldn’t include German males, or tall Australian females, or people shorter than 2 meters. To compile such a complete sample requires a complete list of the parent population, including data on height, gender, and nationality for each member of that parent population. In the case of human populations, such a complete list is unlikely to exist, but such complete samples are often available in other disciplines, such as complete magnitude-limited samples of astronomical objects.

An unbiased (representative) sample is a set of objects chosen from a complete sample using a selection process that does not depend on the properties of the objects. For example, an unbiased sample of Australian men taller than 2 meters might consist of a randomly sampled subset of 1% of Australian males taller than 2 meters. However, one chosen from the electoral register might not be unbiased since, for example, males aged under 18 will not be on the electoral register. In an astronomical context, an unbiased sample might consist of that fraction of a complete sample for which data are available, provided the data availability is not biased by individual source properties.

The best way to avoid a biased or unrepresentative sample is to select a random sample, also known as a probability sample. A random sample is defined as a sample wherein each individual member of the population has a known, non-zero chance of being selected as part of the sample. Several types of random samples are simple random samples, systematic samples, stratified random samples, and cluster random samples.

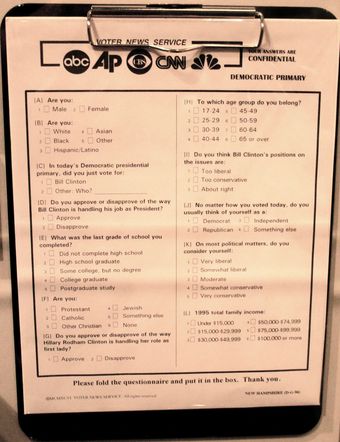

Samples

Online and phone-in polls produce biased samples because the respondents are self-selected. In self-selection bias, those individuals who are highly motivated to respond– typically individuals who have strong opinions– are over-represented, and individuals who are indifferent or apathetic are less likely to respond.

A sample that is not random is called a non-random sample, or a non-probability sampling. Some examples of nonrandom samples are convenience samples, judgment samples, and quota samples.

7.1.3: Random Sampling

A random sample, also called a probability sample, is taken when each individual has an equal probability of being chosen for the sample.

Learning Objective

Categorize a random sample as a simple random sample, a stratified random sample, a cluster sample, or a systematic sample

Key Points

- A simple random sample (SRS) of size

consists of

individuals from the population chosen in such a way that every set on

individuals has an equal chance of being in the selected sample. - Stratified sampling occurs when a population embraces a number of distinct categories and is divided into sub-populations, or strata. At this stage, a simple random sample would be chosen from each stratum and combined to form the full sample.

- Cluster sampling divides the population into groups, or clusters. Some of these clusters are randomly selected. Then, all the individuals in the chosen cluster are selected to be in the sample.

- Systematic sampling relies on arranging the target population according to some ordering scheme and then selecting elements at regular intervals through that ordered list.

Key Terms

- stratum

-

a category composed of people with certain similarities, such as gender, race, religion, or even grade level

- population

-

a group of units (persons, objects, or other items) enumerated in a census or from which a sample is drawn

- cluster

-

a significant subset within a population

Simple Random Sample (SRS)

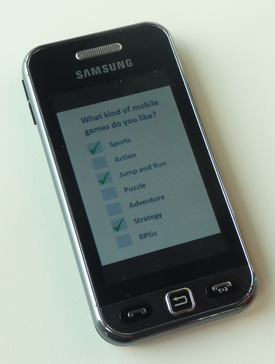

There is a variety of ways in which one could choose a sample from a population. A simple random sample (SRS) is one of the most typical ways. Also commonly referred to as a probability sample, a simple random sample of size n consists of n individuals from the population chosen in such a way that every set of n individuals has an equal chance of being in the selected sample. An example of an SRS would be drawing names from a hat. An online poll in which a person is asked to given their opinion about something is not random because only those people with strong opinions, either positive or negative, are likely to respond. This type of poll doesn’t reflect the opinions of the apathetic .

Online Opinion Polls

Online and phone-in polls also produce biased samples because the respondents are self-selected. In self-selection bias, those individuals who are highly motivated to respond– typically individuals who have strong opinions– are over-represented, and individuals who are indifferent or apathetic are less likely to respond.

Simple random samples are not perfect and should not always be used. They can be vulnerable to sampling error because the randomness of the selection may result in a sample that doesn’t reflect the makeup of the population. For instance, a simple random sample of ten people from a given country will on average produce five men and five women, but any given trial is likely to over-represent one sex and under-represent the other. Systematic and stratified techniques, discussed below, attempt to overcome this problem by using information about the population to choose a more representative sample.

In addition, SRS may also be cumbersome and tedious when sampling from an unusually large target population. In some cases, investigators are interested in research questions specific to subgroups of the population. For example, researchers might be interested in examining whether cognitive ability as a predictor of job performance is equally applicable across racial groups. SRS cannot accommodate the needs of researchers in this situation because it does not provide sub-samples of the population. Stratified sampling, which is discussed below, addresses this weakness of SRS.

Stratified Random Sample

When a population embraces a number of distinct categories, it can be beneficial to divide the population in sub-populations called strata. These strata must be in some way important to the response the researcher is studying. At this stage, a simple random sample would be chosen from each stratum and combined to form the full sample.

For example, let’s say we want to sample the students of a high school to see what type of music they like to listen to, and we want the sample to be representative of all grade levels. It would make sense to divide the students into their distinct grade levels and then choose an SRS from each grade level. Each sample would be combined to form the full sample.

Cluster Sample

Cluster sampling divides the population into groups, or clusters. Some of these clusters are randomly selected. Then, all the individuals in the chosen cluster are selected to be in the sample. This process is often used because it can be cheaper and more time-efficient.

For example, while surveying households within a city, we might choose to select 100 city blocks and then interview every household within the selected blocks, rather than interview random households spread out over the entire city.

Systematic Sample

Systematic sampling relies on arranging the target population according to some ordering scheme and then selecting elements at regular intervals through that ordered list. Systematic sampling involves a random start and then proceeds with the selection of every th element from then onward. In this case,

. It is important that the starting point is not automatically the first in the list, but is instead randomly chosen from within the first to the th element in the list. A simple example would be to select every 10th name from the telephone directory (an ‘every 10th‘ sample, also referred to as ‘sampling with a skip of 10’).

7.1.4: Random Assignment of Subjects

Random assignment helps eliminate the differences between the experimental group and the control group.

Learning Objective

Discover the importance of random assignment of subjects in experiments

Key Points

- Researchers randomly assign participants in a study to either the experimental group or the control group. Dividing the participants randomly reduces group differences, thereby reducing the possibility that confounding factors will influence the results.

- By randomly assigning subjects to groups, researchers are able to feel confident that the groups are the same in terms of all variables except the one which they are manipulating.

- A randomly assigned group may statistically differ from the mean of the overall population, but this is rare.

- Random assignment became commonplace in experiments in the late 1800s due to the influence of researcher Charles S. Peirce.

Key Terms

- null hypothesis

-

A hypothesis set up to be refuted in order to support an alternative hypothesis; presumed true until statistical evidence in the form of a hypothesis test indicates otherwise.

- control

-

a separate group or subject in an experiment against which the results are compared where the primary variable is low or nonexistence

Importance of Random Assignment

When designing controlled experiments, such as testing the effects of a new drug, statisticians often employ an experimental design, which by definition involves random assignment. Random assignment, or random placement, assigns subjects to treatment and control (no treatment) group(s) on the basis of chance rather than any selection criteria. The aim is to produce experimental groups with no statistically significant characteristics prior to the experiment so that any changes between groups observed after experimental activities have been completed can be attributed to the treatment effect rather than to other, pre-existing differences among individuals between the groups.

Control Group

Take identical growing plants, randomly assign them to two groups, and give fertilizer to one of the groups. If there are differences between the fertilized plant group and the unfertilized “control” group, these differences may be due to the fertilizer.

In experimental design, random assignment of participants in experiments or treatment and control groups help to ensure that any differences between or within the groups are not systematic at the outset of the experiment. Random assignment does not guarantee that the groups are “matched” or equivalent; only that any differences are due to chance.

Random assignment is the desired assignment method because it provides control for all attributes of the members of the samples—in contrast to matching on only one or more variables—and provides the mathematical basis for estimating the likelihood of group equivalence for characteristics one is interested in, both for pretreatment checks on equivalence and the evaluation of post treatment results using inferential statistics.

Random Assignment Example

Consider an experiment with one treatment group and one control group. Suppose the experimenter has recruited a population of 50 people for the experiment—25 with blue eyes and 25 with brown eyes. If the experimenter were to assign all of the blue-eyed people to the treatment group and the brown-eyed people to the control group, the results may turn out to be biased. When analyzing the results, one might question whether an observed effect was due to the application of the experimental condition or was in fact due to eye color.

With random assignment, one would randomly assign individuals to either the treatment or control group, and therefore have a better chance at detecting if an observed change were due to chance or due to the experimental treatment itself.

If a randomly assigned group is compared to the mean, it may be discovered that they differ statistically, even though they were assigned from the same group. To express this same idea statistically–if a test of statistical significance is applied to randomly assigned groups to test the difference between sample means against the null hypothesis that they are equal to the same population mean (i.e., population mean of differences = 0), given the probability distribution, the null hypothesis will sometimes be “rejected”–that is, deemed implausible. In other words, the groups would be sufficiently different on the variable tested to conclude statistically that they did not come from the same population, even though they were assigned from the same total group. In the example above, using random assignment may create groups that result in 20 blue-eyed people and 5 brown-eyed people in the same group. This is a rare event under random assignment, but it could happen, and when it does, it might add some doubt to the causal agent in the experimental hypothesis.

History of Random Assignment

Randomization was emphasized in the theory of statistical inference of Charles S. Peirce in “Illustrations of the Logic of Science” (1877–1878) and “A Theory of Probable Inference” (1883). Peirce applied randomization in the Peirce-Jastrow experiment on weight perception. Peirce randomly assigned volunteers to a blinded, repeated-measures design to evaluate their ability to discriminate weights. His experiment inspired other researchers in psychology and education, and led to a research tradition of randomized experiments in laboratories and specialized textbooks in the nineteenth century.

7.1.5: Surveys or Experiments?

Surveys and experiments are both statistical techniques used to gather data, but they are used in different types of studies.

Learning Objective

Distinguish between when to use surveys and when to use experiments

Key Points

- A survey is a technique that involves questionnaires and interviews of a sample population with the intention of gaining information, such as opinions or facts, about the general population.

- An experiment is an orderly procedure carried out with the goal of verifying, falsifying, or establishing the validity of a hypothesis.

- A survey would be useful if trying to determine whether or not people would be interested in trying out a new drug for headaches on the market. An experiment would test the effectiveness of this new drug.

Key Term

- placebo

-

an inactive substance or preparation used as a control in an experiment or test to determine the effectiveness of a medicinal drug

What is a Survey?

Survey methodology involves the study of the sampling of individual units from a population and the associated survey data collection techniques, such as questionnaire construction and methods for improving the number and accuracy of responses to surveys.

Statistical surveys are undertaken with a view towards making statistical inferences about the population being studied, and this depends strongly on the survey questions used. Polls about public opinion, public health surveys, market research surveys, government surveys, and censuses are all examples of quantitative research that use contemporary survey methodology to answers questions about a population. Although censuses do not include a “sample,” they do include other aspects of survey methodology, like questionnaires, interviewers, and nonresponse follow-up techniques. Surveys provide important information for all kinds of public information and research fields, like marketing research, psychology, health, and sociology.

Since survey research is almost always based on a sample of the population, the success of the research is dependent on the representativeness of the sample with respect to a target population of interest to the researcher.

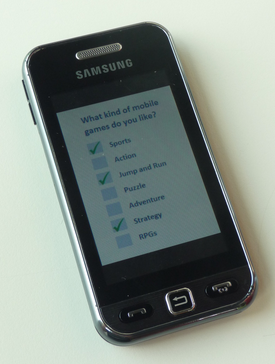

What is an Experiment?

An experiment is an orderly procedure carried out with the goal of verifying, falsifying, or establishing the validity of a hypothesis. Experiments provide insight into cause-and-effect by demonstrating what outcome occurs when a particular factor is manipulated. Experiments vary greatly in their goal and scale, but always rely on repeatable procedure and logical analysis of the results in a method called the scientific method . A child may carry out basic experiments to understand the nature of gravity, while teams of scientists may take years of systematic investigation to advance the understanding of a phenomenon. Experiments can vary from personal and informal (e.g. tasting a range of chocolates to find a favorite), to highly controlled (e.g. tests requiring a complex apparatus overseen by many scientists that hope to discover information about subatomic particles). Uses of experiments vary considerably between the natural and social sciences.

Scientific Method

This flow chart shows the steps of the scientific method.

In statistics, controlled experiments are often used. A controlled experiment generally compares the results obtained from an experimental sample against a control sample, which is practically identical to the experimental sample except for the one aspect whose effect is being tested (the independent variable). A good example of this would be a drug trial, where the effects of the actual drug are tested against a placebo.

When is One Technique Better Than the Other?

Surveys and experiments are both techniques used in statistics. They have similarities, but an in depth look into these two techniques will reveal how different they are. When a businessman wants to market his products, it’s a survey he will need and not an experiment. On the other hand, a scientist who has discovered a new element or drug will need an experiment, and not a survey, to prove its usefulness. A survey involves asking different people about their opinion on a particular product or about a particular issue, whereas an experiment is a comprehensive study about something with the aim of proving it scientifically. They both have their place in different types of studies.

7.2: Sample Surveys

7.2.1: The Literary Digest Poll

Incorrect polling techniques used during the 1936 presidential election led to the demise of the popular magazine, The Literary Digest.

Learning Objective

Critique the problems with the techniques used by the Literary Digest Poll

Key Points

- As it had done in 1920, 1924, 1928 and 1932, The Literary Digest conducted a straw poll regarding the likely outcome of the 1936 presidential election. Before 1936, it had always correctly predicted the winner. It predicted Landon would beat Roosevelt.

- In November, Landon carried only Vermont and Maine; President F. D. Roosevelt carried the 46 other states. Landon’s electoral vote total of eight is a tie for the record low for a major-party nominee since the American political paradigm of the Democratic and Republican parties began in the 1850s.

- The polling techniques used were to blame, even though they polled 10 million people and got a response from 2.4 million.They polled mostly their readers, who had more money than the typical American during the Great Depression. Higher income people were more likely to vote Republican.

- Subsequent statistical analysis and studies have shown it is not necessary to poll ten million people when conducting a scientific survey. A much lower number, such as 1,500 persons, is adequate in most cases so long as they are appropriately chosen.

- This debacle led to a considerable refinement of public opinion polling techniques and later came to be regarded as ushering in the era of modern scientific public opinion research.

Key Terms

- bellwether

-

anything that indicates future trends

- straw poll

-

a survey of opinion which is unofficial, casual, or ad hoc

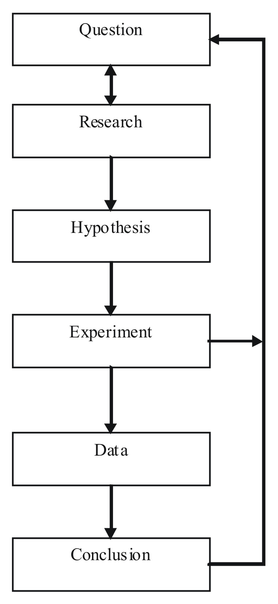

The Literary Digest

The Literary Digest was an influential general interest weekly magazine published by Funk & Wagnalls. Founded by Isaac Kaufmann Funk in 1890, it eventually merged with two similar weekly magazines, Public Opinion and Current Opinion.

The Literary Digest

Cover of the February 19, 1921 edition of The Literary Digest.

History

Beginning with early issues, the emphasis of The Literary Digest was on opinion articles and an analysis of news events. Established as a weekly news magazine, it offered condensations of articles from American, Canadian, and European publications. Type-only covers gave way to illustrated covers during the early 1900s. After Isaac Funk’s death in 1912, Robert Joseph Cuddihy became the editor. In the 1920s, the covers carried full-color reproductions of famous paintings . By 1927, The Literary Digest climbed to a circulation of over one million. Covers of the final issues displayed various photographic and photo-montage techniques. In 1938, it merged with the Review of Reviews, only to fail soon after. Its subscriber list was bought by Time.

Presidential Poll

The Literary Digest is best-remembered today for the circumstances surrounding its demise. As it had done in 1920, 1924, 1928 and 1932, it conducted a straw poll regarding the likely outcome of the 1936 presidential election. Before 1936, it had always correctly predicted the winner.

The 1936 poll showed that the Republican candidate, Governor Alfred Landon of Kansas, was likely to be the overwhelming winner. This seemed possible to some, as the Republicans had fared well in Maine, where the congressional and gubernatorial elections were then held in September, as opposed to the rest of the nation, where these elections were held in November along with the presidential election, as they are today. This outcome seemed especially likely in light of the conventional wisdom, “As Maine goes, so goes the nation,” a saying coined because Maine was regarded as a “bellwether” state which usually supported the winning candidate’s party.

In November, Landon carried only Vermont and Maine; President Franklin Delano Roosevelt carried the 46 other states . Landon’s electoral vote total of eight is a tie for the record low for a major-party nominee since the American political paradigm of the Democratic and Republican parties began in the 1850s. The Democrats joked, “As goes Maine, so goes Vermont,” and the magazine was completely discredited because of the poll, folding soon thereafter.

1936 Presidential Election

This map shows the results of the 1936 presidential election. Red denotes states won by Landon/Knox, blue denotes those won by Roosevelt/Garner. Numbers indicate the number of electoral votes allotted to each state.

In retrospect, the polling techniques employed by the magazine were to blame. Although it had polled ten million individuals (of whom about 2.4 million responded, an astronomical total for any opinion poll), it had surveyed firstly its own readers, a group with disposable incomes well above the national average of the time, shown in part by their ability still to afford a magazine subscription during the depths of the Great Depression, and then two other readily available lists: that of registered automobile owners and that of telephone users. While such lists might come close to providing a statistically accurate cross-section of Americans today, this assumption was manifestly incorrect in the 1930s. Both groups had incomes well above the national average of the day, which resulted in lists of voters far more likely to support Republicans than a truly typical voter of the time. In addition, although 2.4 million responses is an astronomical number, it is only 24% of those surveyed, and the low response rate to the poll is probably a factor in the debacle. It is erroneous to assume that the responders and the non-responders had the same views and merely to extrapolate the former on to the latter. Further, as subsequent statistical analysis and study have shown, it is not necessary to poll ten million people when conducting a scientific survey . A much lower number, such as 1,500 persons, is adequate in most cases so long as they are appropriately chosen.

George Gallup’s American Institute of Public Opinion achieved national recognition by correctly predicting the result of the 1936 election and by also correctly predicting the quite different results of the Literary Digest poll to within about 1%, using a smaller sample size of 50,000. This debacle led to a considerable refinement of public opinion polling techniques and later came to be regarded as ushering in the era of modern scientific public opinion research.

7.2.2: The Year the Polls Elected Dewey

In the 1948 presidential election, the use of quota sampling led the polls to inaccurately predict that Dewey would defeat Truman.

Learning Objective

Criticize the polling methods used in 1948 that incorrectly predicted that Dewey would win the presidency

Key Points

- Many polls, including Gallup, Roper, and Crossley, wrongfully predicted the outcome of the election due to their use of quota sampling.

- Quota sampling is when each interviewer polls a certain number of people in various categories that are representative of the whole population, such as age, race, sex, and income.

- One major problem with quota sampling includes the possibility of missing an important representative category that is key to how people vote. Another is the human element involved.

- Truman, as it turned out, won the electoral vote by a 303-189 majority over Dewey, although a swing of just a few thousand votes in Ohio, Illinois, and California would have produced a Dewey victory.

- One of the most famous blunders came when the Chicago Tribune wrongfully printed the inaccurate headline, “Dewey Defeats Truman” on November 3, 1948, the day after Truman defeated Dewey.

Key Terms

- quota sampling

-

a sampling method that chooses a representative cross-section of the population by taking into consideration each important characteristic of the population proportionally, such as income, sex, race, age, etc.

- margin of error

-

An expression of the lack of precision in the results obtained from a sample.

- quadrennial

-

happening every four years

1948 Presidential Election

The United States presidential election of 1948 was the 41stquadrennial presidential election, held on Tuesday, November 2, 1948. Incumbent President Harry S. Truman, the Democratic nominee, successfully ran for election against Thomas E. Dewey, the Republican nominee.

This election is considered to be the greatest election upset in American history. Virtually every prediction (with or without public opinion polls) indicated that Truman would be defeated by Dewey. Both parties had severe ideological splits, with the far left and far right of the Democratic Party running third-party campaigns. Truman’s surprise victory was the fifth consecutive presidential win for the Democratic Party, a record never surpassed since contests against the Republican Party began in the 1850s. Truman’s feisty campaign style energized his base of traditional Democrats, most of the white South, Catholic and Jewish voters, and—in a surprise—Midwestern farmers. Thus, Truman’s election confirmed the Democratic Party’s status as the nation’s majority party, a status it would retain until the conservative realignment in 1968.

Incorrect Polls

As the campaign drew to a close, the polls showed Truman was gaining. Though Truman lost all nine of the Gallup Poll’s post-convention surveys, Dewey’s Gallup lead dropped from 17 points in late September to 9% in mid-October to just 5 points by the end of the month, just above the poll’s margin of error. Although Truman was gaining momentum, most political analysts were reluctant to break with the conventional wisdom and say that a Truman victory was a serious possibility. The Roper Poll had suspended its presidential polling at the end of September, barring “some development of outstanding importance,” which, in their subsequent view, never occurred. Dewey was not unaware of his slippage, but he had been convinced by his advisers and family not to counterattack the Truman campaign.

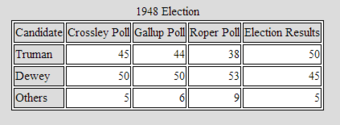

Let’s take a closer look at the polls. The Gallup, Roper, and Crossley polls all predicted a Dewey win. The actual results are shown in the following table: . How did this happen?

1948 Election

The table shows the results of three polls against the actual results in the 1948 presidential election. Notice that Dewey was ahead in all three polls, but ended up losing the election.

The Crossley, Gallup, and Roper organizations all used quota sampling. Each interviewer was assigned a specified number of subjects to interview. Moreover, the interviewer was required to interview specified numbers of subjects in various categories, based on residential area, sex, age, race, economic status, and other variables. The intent of quota sampling is to ensure that the sample represents the population in all essential respects.

This seems like a good method on the surface, but where does one stop? What if a significant criterion was left out–something that deeply affected the way in which people vote? This would cause significant error in the results of the poll. In addition, quota sampling involves a human element. Pollsters, in reality, were left to poll whomever they chose. Research shows that the polls tended to overestimate the Republican vote. In earlier years, the margin of error was large enough that most polls still accurately predicted the winner, but in 1948, their luck ran out. Quota sampling had to go.

Mistake in the Newspapers

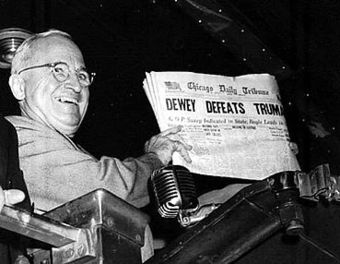

One of the most famous blunders came when the Chicago Tribune wrongfully printed the inaccurate headline, “Dewey Defeats Truman” on November 3, 1948, the day after incumbent United States President Harry S. Truman beat Republican challenger and Governor of New York Thomas E. Dewey.

The paper’s erroneous headline became notorious after a jubilant Truman was photographed holding a copy of the paper during a stop at St. Louis Union Station while returning by train from his home in Independence, Missouri to Washington, D.C .

Dewey Defeats Truman

President Truman holds up the newspaper that wrongfully reported his defeat.

Truman, as it turned out, won the electoral vote by a 303-189 majority over Dewey, although a swing of just a few thousand votes in Ohio, Illinois, and California would have produced a Dewey victory.

7.2.3: Using Chance in Survey Work

When conducting a survey, a sample can be chosen by chance or by more methodical methods.

Learning Objective

Distinguish between probability samples and non-probability samples for surveys

Key Points

- A probability sampling is one in which every unit in the population has a chance (greater than zero) of being selected in the sample, and this probability can be accurately determined.

- Probability sampling includes simple random sampling, systematic sampling, stratified sampling, and cluster sampling. These various ways of probability sampling have two things in common: every element has a known nonzero probability of being sampled, and random selection is involved at some point.

- Non-probability sampling is any sampling method wherein some elements of the population have no chance of selection (these are sometimes referred to as ‘out of coverage’/’undercovered’), or where the probability of selection can’t be accurately determined.

Key Terms

- purposive sampling

-

occurs when the researchers choose the sample based on who they think would be appropriate for the study; used primarily when there is a limited number of people that have expertise in the area being researched

- nonresponse

-

the absence of a response

In order to conduct a survey, a sample from the population must be chosen. This sample can be chosen using chance, or it can be chosen more systematically.

Probability Sampling for Surveys

A probability sampling is one in which every unit in the population has a chance (greater than zero) of being selected in the sample, and this probability can be accurately determined. The combination of these traits makes it possible to produce unbiased estimates of population totals, by weighting sampled units according to their probability of selection.

Let’s say we want to estimate the total income of adults living in a given street by using a survey with questions. We visit each household in that street, identify all adults living there, and randomly select one adult from each household. (For example, we can allocate each person a random number, generated from a uniform distribution between 0 and 1, and select the person with the highest number in each household). We then interview the selected person and find their income. People living on their own are certain to be selected, so we simply add their income to our estimate of the total. But a person living in a household of two adults has only a one-in-two chance of selection. To reflect this, when we come to such a household, we would count the selected person’s income twice towards the total. (The person who is selected from that household can be loosely viewed as also representing the person who isn’t selected. )

Income in the United States

Graph of United States income distribution from 1947 through 2007 inclusive, normalized to 2007 dollars. The data is from the US Census, which is a survey over the entire population, not just a sample.

In the above example, not everybody has the same probability of selection; what makes it a probability sample is the fact that each person’s probability is known. When every element in the population does have the same probability of selection, this is known as an ‘equal probability of selection’ (EPS) design. Such designs are also referred to as ‘self-weighting’ because all sampled units are given the same weight.

Probability sampling includes: Simple Random Sampling, Systematic Sampling, Stratified Sampling, Probability Proportional to Size Sampling, and Cluster or Multistage Sampling. These various ways of probability sampling have two things in common: every element has a known nonzero probability of being sampled, and random selection is involved at some point.

Non-Probability Sampling for Surveys

Non-probability sampling is any sampling method wherein some elements of the population have no chance of selection (these are sometimes referred to as ‘out of coverage’/’undercovered’), or where the probability of selection can’t be accurately determined. It involves the selection of elements based on assumptions regarding the population of interest, which forms the criteria for selection. Hence, because the selection of elements is nonrandom, non-probability sampling does not allow the estimation of sampling errors. These conditions give rise to exclusion bias, placing limits on how much information a sample can provide about the population. Information about the relationship between sample and population is limited, making it difficult to extrapolate from the sample to the population.

Let’s say we visit every household in a given street and interview the first person to answer the door. In any household with more than one occupant, this is a non-probability sample, because some people are more likely to answer the door (e.g. an unemployed person who spends most of their time at home is more likely to answer than an employed housemate who might be at work when the interviewer calls) and it’s not practical to calculate these probabilities.

Non-probability sampling methods include accidental sampling, quota sampling, and purposive sampling. In addition, nonresponse effects may turn any probability design into a non-probability design if the characteristics of nonresponse are not well understood, since nonresponse effectively modifies each element’s probability of being sampled.

7.2.4: How Well Do Probability Methods Work?

Even when using probability sampling methods, bias can still occur.

Learning Objective

Analyze the problems associated with probability sampling

Key Points

- Undercoverage occurs when some groups in the population are left out of the process of choosing the sample.

- Nonresponse occurs when an individual chosen for the sample can’t be contacted or does not cooperate.

- Response bias occurs when a respondent lies about his or her true beliefs.

- The wording of questions–especially if they are leading questions– can affect the outcome of a survey.

- The larger the sample size, the more accurate the survey.

Key Terms

- undercoverage

-

Occurs when a survey fails to reach a certain portion of the population.

- nonresponse

-

the absence of a response

- response bias

-

Occurs when the answers given by respondents do not reflect their true beliefs.

Probability vs. Non-probability Sampling

In earlier sections, we discussed how samples can be chosen. Failure to use probability sampling may result in bias or systematic errors in the way the sample represents the population. This is especially true of voluntary response samples–in which the respondents choose themselves if they want to be part of a survey– and convenience samples–in which individuals easiest to reach are chosen.

However, even probability sampling methods that use chance to select a sample are prone to some problems. Recall some of the methods used in probability sampling: simple random samples, stratified samples, cluster samples, and systematic samples. In these methods, each member of the population has a chance of being chosen for the sample, and that chance is a known probability.

Problems With Probability Sampling

Random sampling eliminates some of the bias that presents itself in sampling, but when a sample is chosen by human beings, there are always going to be some unavoidable problems. When a sample is chosen, we first need an accurate and complete list of the population. This type of list is often not available, causing most samples to suffer from undercoverage. For example, if we chose a sample from a list of households, we will miss those who are homeless, in prison, or living in a college dorm. In another example, a telephone survey calling landline phones will potentially miss those who are unlisted, those who only use a cell phone, and those who do not have a phone at all. Both of these examples will cause a biased sample in which poor people, whose opinions may very well differ from those of the rest of the population, are underrepresented.

Another source of bias is nonresponse, which occurs when a selected individual cannot be contacted or refuses to participate in the survey. Many people do not pick up the phone when they do not know the person who is calling . Nonresponse is often higher in urban areas, so most researchers conducting surveys will substitute other people in the same area to avoid favoring rural areas. However, if the people eventually contacted differ from those who are rarely at home or refuse to answer questions for one reason or another, some bias will still be present.

Ringing Phone

This image shows a ringing phone that is not being answered.

A third example of bias is called response bias. Respondents may not answer questions truthfully, especially if the survey asks about illegal or unpopular behavior. The race and sex of the interviewer may influence people to respond in a way that is more extreme than their true beliefs. Careful training of pollsters can greatly reduce response bias.

Finally, another source of bias can come in the wording of questions. Confusing or leading questions can strongly influence the way a respondent answers questions.

Conclusion

When reading the results of a survey, it is important to know the exact questions asked, the rate of nonresponse, and the method of survey before you trust a poll. In addition, remember that a larger sample size will provide more accurate results.

7.2.5: The Gallup Poll

The Gallup Poll is a public opinion poll that conducts surveys in 140 countries around the world.

Learning Objective

Examine the pros and cons of the way in which the Gallup Poll is conducted

Key Points

- The Gallup Poll measures and tracks the public’s attitudes concerning virtually every political, social, and economic issues of the day in 140 countries around the world.

- The Gallup Polls have been traditionally known for their accuracy in predicting presidential elections in the United States from 1936 to 2008. They were only incorrect in 1948 and 1976.

- Today, Gallup samples people using both landline telephones and cell phones. They have gained much criticism for not adapting quickly enough for a society that is growing more and more towards using only their cell phones over landlines.

Key Terms

- Objective

-

not influenced by the emotions or prejudices

- public opinion polls

-

surveys designed to represent the beliefs of a population by conducting a series of questions and then extrapolating generalities in ratio or within confidence intervals

Overview of the Gallup Organization

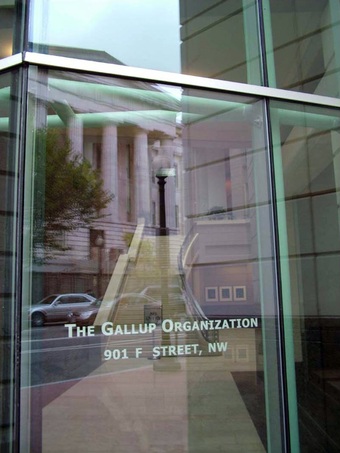

Gallup, Inc. is a research-based performance-management consulting company. Originally founded by George Gallup in 1935, the company became famous for its public opinion polls, which were conducted in the United States and other countries. Today, Gallup has more than 40 offices in 27 countries. The world headquarters are located in Washington, D.C. , while the operational headquarters are in Omaha, Nebraska. Its current Chairman and CEO is Jim Clifton.

The Gallup Organization

The Gallup, Inc. world headquarters in Washington, D.C. The National Portrait Gallery can be seen in the reflection.

History of Gallup

George Gallup founded the American Institute of Public Opinion, the precursor to the Gallup Organization, in Princeton, New Jersey in 1935. He wished to objectively determine the opinions held by the people. To ensure his independence and objectivity, Dr. Gallup resolved that he would undertake no polling that was paid for or sponsored in any way by special interest groups such as the Republican and Democratic parties, a commitment that Gallup upholds to this day.

In 1936, Gallup successfully predicted that Franklin Roosevelt would defeat Alfred Landon for the U.S. presidency; this event quickly popularized the company. In 1938, Dr. Gallup and Gallup Vice President David Ogilvy began conducting market research for advertising companies and the film industry. In 1958, the modern Gallup Organization was formed when George Gallup grouped all of his polling operations into one organization. Since then, Gallup has seen huge expansion into several other areas.

The Gallup Poll

The Gallup Poll is the division of Gallup that regularly conducts public opinion polls in more than 140 countries around the world. Gallup Polls are often referenced in the mass media as a reliable and objective audience measurement of public opinion. Gallup Poll results, analyses, and videos are published daily on Gallup.com in the form of data-driven news. The poll loses about $10 million a year but gives the company the visibility of a very well-known brand.

Historically, the Gallup Poll has measured and tracked the public’s attitudes concerning virtually every political, social, and economic issue of the day, including highly sensitive and controversial subjects. In 2005, Gallup began its World Poll, which continually surveys citizens in more than 140 countries, representing 95% of the world’s adult population. General and regional-specific questions, developed in collaboration with the world’s leading behavioral economists, are organized into powerful indexes and topic areas that correlate with real-world outcomes.

Reception of the Poll

The Gallup Polls have been recognized in the past for their accuracy in predicting the outcome of United States presidential elections, though they have come under criticism more recently. From 1936 to 2008, Gallup correctly predicted the winner of each election–with the notable exceptions of the 1948 Thomas Dewey-Harry S. Truman election, when nearly all pollsters predicted a Dewey victory, and the 1976 election, when they inaccurately projected a slim victory by Gerald Ford over Jimmy Carter. For the 2008 U.S. presidential election, Gallup correctly predicted the winner, but was rated 17th out of 23 polling organizations in terms of the precision of its pre-election polls relative to the final results. In 2012, Gallup’s final election survey had Mitt Romney 49% and Barack Obama 48%, compared to the election results showing Obama with 51.1% to Romney’s 47.2%. Poll analyst Nate Silver found that Gallup’s results were the least accurate of the 23 major polling firms Silver analyzed, having the highest incorrect average of being 7.2 points away from the final result. Frank Newport, the Editor-in-Chief of Gallup, responded to the criticism by stating that Gallup simply makes an estimate of the national popular vote rather than predicting the winner, and that their final poll was within the statistical margin of error.

In addition to the poor results of the poll in 2012, many people are criticizing Gallup on their sampling techniques. Gallup conducts 1,000 interviews per day, 350 days out of the year, among both landline and cell phones across the U.S., for its health and well-being survey. Though Gallup surveys both landline and cell phones, they conduct only 150 cell phone samples out of 1000, making up 15%. The population of the U.S. that relies only on cell phones (owning no landline connections) makes up more than double that number, at 34%. This fact has been a major criticism in recent times of the reliability Gallup polling, compared to other polls, in its failure to compensate accurately for the quick adoption of “cell phone only” Americans.

7.2.6: Telephone Surveys

Telephone surveys can reach a wide range of people very quickly and very inexpensively.

Learning Objective

Identify the advantages and disadvantages of telephone surveys

Key Points

- About 95% of people in the United States have a telephone (see, so conducting a poll by calling people is a good way to reach nearly every part of the population.

- Calling people by telephone is a quick process, allowing researches to gain a lot of data in a short amount of time.

- In certain polls, the interviewer or interviewee (or both) may wish to remain anonymous, which can be achieved if the poll is conducted via telephone by a third party.

- Non-response bias is one of the major problems with telephone surveys as many people do not answer calls from people they do not know.

- Due to certain uncontrollable factors (e.g., unlisted phone numbers, people who only use cell phones, or instances when no one is home/available to take pollster calls), undercoverage can negatively affect the outcome of telephone surveys.

Key Terms

- undercoverage

-

Occurs when a survey fails to reach a certain portion of the population.

- response bias

-

Occurs when the answers given by respondents do not reflect their true beliefs.

- non-response bias

-

Occurs when the sample becomes biased because some of those initially selected refuse to respond.

A telephone survey is a type of opinion poll used by researchers. As with other methods of polling, their are advantages and disadvantages to utilizing telephone surveys.

Advantages

- Large scale accessibility. About 95% of people in the United States have a telephone (see ), so conducting a poll by via telephone is a good way to reach most parts of the population.

- Efficient data collection. Conducting calls via telephone produces a quick process, allowing researches to gain a large amount of data in a short amount of time. Previously, pollsters physically had to go to each interviewee’s home (which, obviously, was more time consuming).

- Inexpensive. Phone interviews are not costly (e.g., telephone researchers do not pay for travel).

- Anonymity. In certain polls, the interviewer or interviewee (or both) may wish to remain anonymous, which can be achieved if the poll is conducted over the phone by a third party.

Disadvantages

- Lack of visual materials. Depending on what the researchers are asking, sometimes it may be helpful for the respondent to see a product in person, which of course, cannot be done over the phone.

- Call screening. As some people do not answer calls from strangers, or may refuse to answer the poll, poll samples are not always representative samples from a population due to what is known as non-response bias. In this type of bias, the characteristics of those who agree to be interviewed may be markedly different from those who decline. That is, the actual sample is a biased version of the population the pollster wants to analyze. If those who refuse to answer, or are never reached, have the same characteristics as those who do answer, then the final results should be unbiased. However, if those who do not answer have different opinions, then the results have bias. In terms of election polls, studies suggest that bias effects are small, but each polling firm has its own techniques for adjusting weights to minimize selection bias.

- Undercoverage. Undercoverage is a highly prevalent source of bias. If the pollsters only choose telephone numbers from a telephone directory, they miss those who have unlisted landlines or only have cell phones (which is is becoming more the norm). In addition, if the pollsters only conduct calls between 9:00 a.m and 5:00 p.m, Monday through Friday, they are likely to miss a huge portion of the working population—those who may have very different opinions than the non-working population.

7.3.7: Chance Error and Bias

Chance error and bias are two different forms of error associated with sampling.

Learning Objective

Differentiate between random, or chance, error and bias

Key Points

- The error that is associated with the unpredictable variation in the sample is called a random, or chance, error. It is only an “error” in the sense that it would automatically be corrected if we could survey the entire population.

- Random error cannot be eliminated completely, but it can be reduced by increasing the sample size.

- A sampling bias is a bias in which a sample is collected in such a way that some members of the intended population are less likely to be included than others.

- There are various types of bias, including selection from a specific area, self-selection, pre-screening, and exclusion.

Key Terms

- bias

-

(Uncountable) Inclination towards something; predisposition, partiality, prejudice, preference, predilection.

- random sampling

-

a method of selecting a sample from a statistical population so that every subject has an equal chance of being selected

- standard error

-

A measure of how spread out data values are around the mean, defined as the square root of the variance.

Sampling Error

In statistics, a sampling error is the error caused by observing a sample instead of the whole population. The sampling error can be found by subtracting the value of a parameter from the value of a statistic. The variations in the possible sample values of a statistic can theoretically be expressed as sampling errors, although in practice the exact sampling error is typically unknown.

In sampling, there are two main types of error: systematic errors (or biases) and random errors (or chance errors).

Random/Chance Error

Random sampling is used to ensure that a sample is truly representative of the entire population. If we were to select a perfect sample (which does not exist), we would reach the same exact conclusions that we would have reached if we had surveyed the entire population. Of course, this is not possible, and the error that is associated with the unpredictable variation in the sample is called random, or chance, error. This is only an “error” in the sense that it would automatically be corrected if we could survey the entire population rather than just a sample taken from it. It is not a mistake made by the researcher.

Random error always exists. The size of the random error, however, can generally be controlled by taking a large enough random sample from the population. Unfortunately, the high cost of doing so can be prohibitive. If the observations are collected from a random sample, statistical theory provides probabilistic estimates of the likely size of the error for a particular statistic or estimator. These are often expressed in terms of its standard error:

Bias

In statistics, sampling bias is a bias in which a sample is collected in such a way that some members of the intended population are less likely to be included than others. It results in a biased sample, a non-random sample of a population in which all individuals, or instances, were not equally likely to have been selected. If this is not accounted for, results can be erroneously attributed to the phenomenon under study rather than to the method of sampling.

There are various types of sampling bias:

- Selection from a specific real area. For example, a survey of high school students to measure teenage use of illegal drugs will be a biased sample because it does not include home-schooled students or dropouts.

- Self-selection bias, which is possible whenever the group of people being studied has any form of control over whether to participate. Participants’ decision to participate may be correlated with traits that affect the study, making the participants a non-representative sample. For example, people who have strong opinions or substantial knowledge may be more willing to spend time answering a survey than those who do not.

- Pre-screening of trial participants, or advertising for volunteers within particular groups. For example, a study to “prove” that smoking does not affect fitness might recruit at the local fitness center, but advertise for smokers during the advanced aerobics class and for non-smokers during the weight loss sessions.

- Exclusion bias, or exclusion of particular groups from the sample. For example, subjects may be left out if they either migrated into the study area or have moved out of the area.

7.3: Sampling Distributions

7.3.1: What Is a Sampling Distribution?

The sampling distribution of a statistic is the distribution of the statistic for all possible samples from the same population of a given size.

Learning Objective

Recognize the characteristics of a sampling distribution

Key Points

- A critical part of inferential statistics involves determining how far sample statistics are likely to vary from each other and from the population parameter.

- The sampling distribution of a statistic is the distribution of that statistic, considered as a random variable, when derived from a random sample of size

. - Sampling distributions allow analytical considerations to be based on the sampling distribution of a statistic rather than on the joint probability distribution of all the individual sample values.

- The sampling distribution depends on: the underlying distribution of the population, the statistic being considered, the sampling procedure employed, and the sample size used.

Key Terms

- inferential statistics

-

A branch of mathematics that involves drawing conclusions about a population based on sample data drawn from it.

- sampling distribution

-

The probability distribution of a given statistic based on a random sample.

Suppose you randomly sampled 10 women between the ages of 21 and 35 years from the population of women in Houston, Texas, and then computed the mean height of your sample. You would not expect your sample mean to be equal to the mean of all women in Houston. It might be somewhat lower or higher, but it would not equal the population mean exactly. Similarly, if you took a second sample of 10 women from the same population, you would not expect the mean of this second sample to equal the mean of the first sample.

Houston Skyline

Suppose you randomly sampled 10 people from the population of women in Houston, Texas between the ages of 21 and 35 years and computed the mean height of your sample. You would not expect your sample mean to be equal to the mean of all women in Houston.

Inferential statistics involves generalizing from a sample to a population. A critical part of inferential statistics involves determining how far sample statistics are likely to vary from each other and from the population parameter. These determinations are based on sampling distributions. The sampling distribution of a statistic is the distribution of that statistic, considered as a random variable, when derived from a random sample of size

. It may be considered as the distribution of the statistic for all possible samples from the same population of a given size. Sampling distributions allow analytical considerations to be based on the sampling distribution of a statistic rather than on the joint probability distribution of all the individual sample values.

The sampling distribution depends on: the underlying distribution of the population, the statistic being considered, the sampling procedure employed, and the sample size used. For example, consider a normal population with mean

and variance

. Assume we repeatedly take samples of a given size from this population and calculate the arithmetic mean for each sample. This statistic is then called the sample mean. Each sample has its own average value, and the distribution of these averages is called the “sampling distribution of the sample mean. ” This distribution is normal since the underlying population is normal, although sampling distributions may also often be close to normal even when the population distribution is not.

An alternative to the sample mean is the sample median. When calculated from the same population, it has a different sampling distribution to that of the mean and is generally not normal (but it may be close for large sample sizes).

7.3.2: Properties of Sampling Distributions

Knowledge of the sampling distribution can be very useful in making inferences about the overall population.

Learning Objective

Describe the general properties of sampling distributions and the use of standard error in analyzing them

Key Points

- In practice, one will collect sample data and, from these data, estimate parameters of the population distribution.

- Knowing the degree to which means from different samples would differ from each other and from the population mean would give you a sense of how close your particular sample mean is likely to be to the population mean.

- The standard deviation of the sampling distribution of a statistic is referred to as the standard error of that quantity.

- If all the sample means were very close to the population mean, then the standard error of the mean would be small.

- On the other hand, if the sample means varied considerably, then the standard error of the mean would be large.

Key Terms

- inferential statistics

-

A branch of mathematics that involves drawing conclusions about a population based on sample data drawn from it.

- sampling distribution

-

The probability distribution of a given statistic based on a random sample.

Sampling Distributions and Inferential Statistics

Sampling distributions are important for inferential statistics. In practice, one will collect sample data and, from these data, estimate parameters of the population distribution. Thus, knowledge of the sampling distribution can be very useful in making inferences about the overall population.

For example, knowing the degree to which means from different samples differ from each other and from the population mean would give you a sense of how close your particular sample mean is likely to be to the population mean. Fortunately, this information is directly available from a sampling distribution. The most common measure of how much sample means differ from each other is the standard deviation of the sampling distribution of the mean. This standard deviation is called the standard error of the mean.

Standard Error

The standard deviation of the sampling distribution of a statistic is referred to as the standard error of that quantity. For the case where the statistic is the sample mean, and samples are uncorrelated, the standard error is:

Where

is the sample standard deviation and

is the size (number of items) in the sample. An important implication of this formula is that the sample size must be quadrupled (multiplied by 4) to achieve half the measurement error. When designing statistical studies where cost is a factor, this may have a role in understanding cost-benefit tradeoffs.

If all the sample means were very close to the population mean, then the standard error of the mean would be small. On the other hand, if the sample means varied considerably, then the standard error of the mean would be large. To be specific, assume your sample mean is 125 and you estimated that the standard error of the mean is 5. If you had a normal distribution, then it would be likely that your sample mean would be within 10 units of the population mean since most of a normal distribution is within two standard deviations of the mean.

More Properties of Sampling Distributions

- The overall shape of the distribution is symmetric and approximately normal.

- There are no outliers or other important deviations from the overall pattern.

- The center of the distribution is very close to the true population mean.

A statistical study can be said to be biased when one outcome is systematically favored over another. However, the study can be said to be unbiased if the mean of its sampling distribution is equal to the true value of the parameter being estimated.

Finally, the variability of a statistic is described by the spread of its sampling distribution. This spread is determined by the sampling design and the size of the sample. Larger samples give smaller spread. As long as the population is much larger than the sample (at least 10 times as large), the spread of the sampling distribution is approximately the same for any population size

7.3.3: Creating a Sampling Distribution

Learn to create a sampling distribution from a discrete set of data.

Learning Objective

Differentiate between a frequency distribution and a sampling distribution

Key Points

- Consider three pool balls, each with a number on it.

- Two of the balls are selected randomly (with replacement), and the average of their numbers is computed.

- The relative frequencies are equal to the frequencies divided by nine because there are nine possible outcomes.

- The distribution created from these relative frequencies is called the sampling distribution of the mean.

- As the number of samples approaches infinity, the frequency distribution will approach the sampling distribution.

Key Terms

- sampling distribution

-

The probability distribution of a given statistic based on a random sample.

- frequency distribution

-

a representation, either in a graphical or tabular format, which displays the number of observations within a given interval

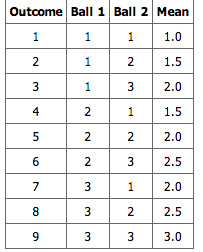

We will illustrate the concept of sampling distributions with a simple example. Consider three pool balls, each with a number on it. Two of the balls are selected randomly (with replacement), and the average of their numbers is computed. All possible outcomes are shown below.

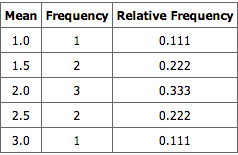

Pool Ball Example 1

This table shows all the possible outcome of selecting two pool balls randomly from a population of three.

Notice that all the means are either 1.0, 1.5, 2.0, 2.5, or 3.0. The frequencies of these means are shown below. The relative frequencies are equal to the frequencies divided by nine because there are nine possible outcomes.

Pool Ball Example 2

This table shows the frequency of means for

.

The figure below shows a relative frequency distribution of the means. This distribution is also a probability distribution since the

-axis is the probability of obtaining a given mean from a sample of two balls in addition to being the relative frequency.

Relative Frequency Distribution

Relative frequency distribution of our pool ball example.

The distribution shown in the above figure is called the sampling distribution of the mean. Specifically, it is the sampling distribution of the mean for a sample size of 2 (

). For this simple example , the distribution of pool balls and the sampling distribution are both discrete distributions. The pool balls have only the numbers 1, 2, and 3, and a sample mean can have one of only five possible values.

There is an alternative way of conceptualizing a sampling distribution that will be useful for more complex distributions. Imagine that two balls are sampled (with replacement), and the mean of the two balls is computed and recorded. This process is repeated for a second sample, a third sample, and eventually thousands of samples. After thousands of samples are taken and the mean is computed for each, a relative frequency distribution is drawn. The more samples, the closer the relative frequency distribution will come to the sampling distribution shown in the above figure. As the number of samples approaches infinity , the frequency distribution will approach the sampling distribution. This means that you can conceive of a sampling distribution as being a frequency distribution based on a very large number of samples. To be strictly correct, the sampling distribution only equals the frequency distribution exactly when there is an infinite number of samples.

7.3.4: Continuous Sampling Distributions

When we have a truly continuous distribution, it is not only impractical but actually impossible to enumerate all possible outcomes.

Learning Objective

Differentiate between discrete and continuous sampling distributions

Key Points

- In continuous distributions, the probability of obtaining any single value is zero.

- Therefore, these values are called probability densities rather than probabilities.

- A probability density function, or density of a continuous random variable, is a function that describes the relative likelihood for this random variable to take on a given value.

Key Term

- probability density function

-

any function whose integral over a set gives the probability that a random variable has a value in that set

In the previous section, we created a sampling distribution out of a population consisting of three pool balls. This distribution was discrete, since there were a finite number of possible observations. Now we will consider sampling distributions when the population distribution is continuous.

What if we had a thousand pool balls with numbers ranging from 0.001 to 1.000 in equal steps? Note that although this distribution is not really continuous, it is close enough to be considered continuous for practical purposes. As before, we are interested in the distribution of the means we would get if we sampled two balls and computed the mean of these two. In the previous example, we started by computing the mean for each of the nine possible outcomes. This would get a bit tedious for our current example since there are 1,000,000 possible outcomes (1,000 for the first ball multiplied by 1,000 for the second.) Therefore, it is more convenient to use our second conceptualization of sampling distributions, which conceives of sampling distributions in terms of relative frequency distributions– specifically, the relative frequency distribution that would occur if samples of two balls were repeatedly taken and the mean of each sample computed.

Probability Density Function

When we have a truly continuous distribution, it is not only impractical but actually impossible to enumerate all possible outcomes. Moreover, in continuous distributions, the probability of obtaining any single value is zero. Therefore, these values are called probability densities rather than probabilities.

A probability density function, or density of a continuous random variable, is a function that describes the relative likelihood for this random variable to take on a given value. The probability for the random variable to fall within a particular region is given by the integral of this variable’s density over the region .

Probability Density Function

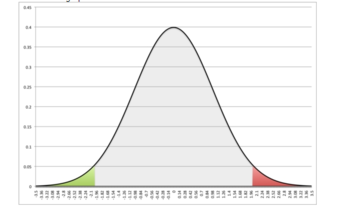

Boxplot and probability density function of a normal distribution

.

7.3.5: Mean of All Sample Means (μ x)

The mean of the distribution of differences between sample means is equal to the difference between population means.

Learning Objective

Discover that the mean of the distribution of differences between sample means is equal to the difference between population means

Key Points

- Statistical analysis are very often concerned with the difference between means.

- The mean of the sampling distribution of the mean is μM1−M2 = μ1−2.

- The variance sum law states that the variance of the sampling distribution of the difference between means is equal to the variance of the sampling distribution of the mean for Population 1 plus the variance of the sampling distribution of the mean for Population 2.

Key Term

- sampling distribution

-

The probability distribution of a given statistic based on a random sample.

Statistical analyses are, very often, concerned with the difference between means. A typical example is an experiment designed to compare the mean of a control group with the mean of an experimental group. Inferential statistics used in the analysis of this type of experiment depend on the sampling distribution of the difference between means.

The sampling distribution of the difference between means can be thought of as the distribution that would result if we repeated the following three steps over and over again:

- Sample n1 scores from Population 1 and n2 scores from Population 2;

- Compute the means of the two samples ( M1 and M2);

- Compute the difference between means M1−M2. The distribution of the differences between means is the sampling distribution of the difference between means.

The mean of the sampling distribution of the mean is:

μM1−M2 = μ1−2,

which says that the mean of the distribution of differences between sample means is equal to the difference between population means. For example, say that mean test score of all 12-year olds in a population is 34 and the mean of 10-year olds is 25. If numerous samples were taken from each age group and the mean difference computed each time, the mean of these numerous differences between sample means would be 34 – 25 = 9.

The variance sum law states that the variance of the sampling distribution of the difference between means is equal to the variance of the sampling distribution of the mean for Population 1 plus the variance of the sampling distribution of the mean for Population 2. The formula for the variance of the sampling distribution of the difference between means is as follows:

.

Recall that the standard error of a sampling distribution is the standard deviation of the sampling distribution, which is the square root of the above variance.

Let’s look at an application of this formula to build a sampling distribution of the difference between means. Assume there are two species of green beings on Mars. The mean height of Species 1 is 32, while the mean height of Species 2 is 22. The variances of the two species are 60 and 70, respectively, and the heights of both species are normally distributed. You randomly sample 10 members of Species 1 and 14 members of Species 2.

The difference between means comes out to be 10, and the standard error comes out to be 3.317.

μM1−M2 = 32 – 22 = 10

Standard error equals the square root of (60 / 10) + (70 / 14) = 3.317.

The resulting sampling distribution as diagramed in , is normally distributed with a mean of 10 and a standard deviation of 3.317.

Sampling Distribution of the Difference Between Means

The distribution is normally distributed with a mean of 10 and a standard deviation of 3.317.

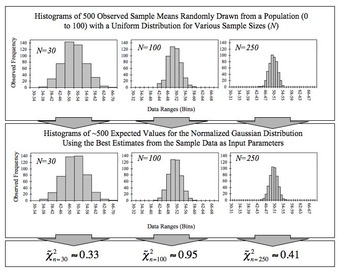

7.3.6: Shapes of Sampling Distributions

The overall shape of a sampling distribution is expected to be symmetric and approximately normal.

Learning Objective

Give examples of the various shapes a sampling distribution can take on

Key Points

- The concept of the shape of a distribution refers to the shape of a probability distribution.

- It most often arises in questions of finding an appropriate distribution to use to model the statistical properties of a population, given a sample from that population.

- A sampling distribution is assumed to have no outliers or other important deviations from the overall pattern.

- When calculated from the same population, the sample median has a different sampling distribution to that of the mean and is generally not normal; although, it may be close for large sample sizes.

Key Terms

- normal distribution

-

A family of continuous probability distributions such that the probability density function is the normal (or Gaussian) function.

- skewed

-