Learning Objectives

- Describe operational and adaptive definitions of learning

- Relate classical and instrumental conditioning to prediction and control

- Describe acquisition, extinction, and spontaneous recovery, showing how the phenomenon of spontaneous recovery indicates that extinction does not result in unlearning

- Describe how research findings related to Pavlov’s stimulus substitution model of classical conditioning resulted in the current predictive learning model

- Describe how classical conditioning relates to the acquisition of word meaning , prejudice, and emotional reactions

- Describe Skinners’ and the adaptive learning schemas of control learning

- Provide examples of short-term and long-term stimulus-response chains

- Describe Skinner’s schema for categorizing intermittent schedules of reinforcement

Predictive Learning and Human Potential

Mostly Nurture: The Role of Learning in Fulfilling One’s Potential

Psychology studies how heredity (nature) and experience (nurture) interact to influence behavior. In the previous chapter, we related Maslow’s hierarchy of human needs to very different human conditions. Whether we are growing up in the rain forest or in a technologically-enhanced urban setting, the bottom of the pyramid remains the same. We need to eat and drink and require protection from the elements. Deprivation of food or water will result in our becoming more active as we search for the needed substance. Unpleasant weather conditions will result in our becoming more active to remove the source of unpleasantness. Our senses enable us to detect appetitive and aversive stimuli in our internal and external environments. Our physical structure enables us to move, grasp, and manipulate objects. Our nervous system connects our sensory and motor systems.

Human beings inherit some sensory-motor connections enhancing the likelihood of our survival. Infants inherit two reflexes that increase the likelihood of successful nursing. A reflex is a simple inherited behavior characteristic of the members of a species. Human infants inherit rooting and sucking reflexes. If a nipple is placed in the corner of an infant’s mouth it will center the nipple (i.e., root). The infant will then suck on a nipple in the center of its mouth. Birth mothers’ breasts fill with milk and swell resulting in discomfort that is relieved by an infant’s nursing. This increases the likelihood that the mother will attempt to nurse the infant. This happy combination of inherited characteristics has enabled human infants to survive throughout the millennia.

Human mothers eventually stop producing milk and human infants eventually require additional nutrients in order to survive. This creates the need to identify and locate sources of nutrients. Humans started out in Africa and have migrated to practically every location on Earth’s land. Given the variability in types of food and their locations, it would be impossible for humans to depend upon the very slow biological evolution process to identify and locate nutrients. We cannot inherit reflexes to address all the possibilities. Another more rapid and flexible type of adaptive sensory-motor mechanism must be involved.

We described foraging trips conducted by members of the Nukak tribe. Foods consisted of fruits and honey and small wild animals including fish and birds. The Nukak changed locations every few days in order to locate new food supplies. Where they settled and looked changed with the seasons. Hunting and gathering included the use of tools assembled with natural elements. Clearly, prior experience (i.e., nurture) affected their behavior. This is what we mean by learning.

Operational Definition of Learning

All sciences rely upon operational definitions in order to establish a degree of consistency in the use of terminology. Operational definitions describe the procedures used to measure the particular term. One does not directly observe learning. It has to be inferred from observations of behavior. The operational definition describes how one objectively determines whether a behavioral observation is an example of the process.

The most common operational definitions of learning are variations on the one provided in Kimble’s revision of Hilgard and Marquis’ Conditioning and Learning (1961). According to Kimble, “Learning is a relatively permanent change in behavior potentiality which occurs as a result of practice.” Let us parse this definition. First, it should be noted that learning is inferred only when we see a change in behavior resulting from appropriate experience. Excluded are other possible causes of behavior change including maturation, which is non-experiential. Fatigue and drugs do not produce “relatively permanent” changes. Kimble includes the word “potentiality” after behavior to emphasize the fact that even if learning has occurred, this does not guarantee a corresponding behavior change.

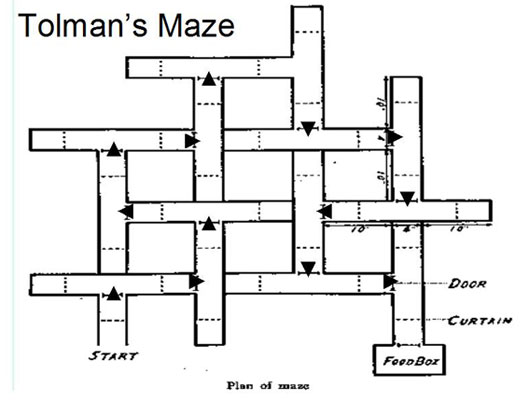

The fact that prior learning may not be reflected in performance is based on a classic experiment conducted by Tolman and Honzik in 1930. They studied laboratory rats under conditions resembling the hunting and gathering of the Nukak. Three different groups were placed in a complex maze and the number of errors (i.e., wrong turns) was recorded (see Figure 5.1).

Figure 5.1. Maze used in Tolman and Honzik’s 1930 study with rats (Jensen, 2006).

A Hungry No Reward (HNR) group was simply placed in the start box and removed from the maze after reaching the end. A Hungry Reward (HR) group received food at the end and was permitted to eat prior to being removed. The third, No Reward -> Reward group, began the same as the No Reward group and was switched to being treated the same as the Regular Reward group after ten days (HNR-R).

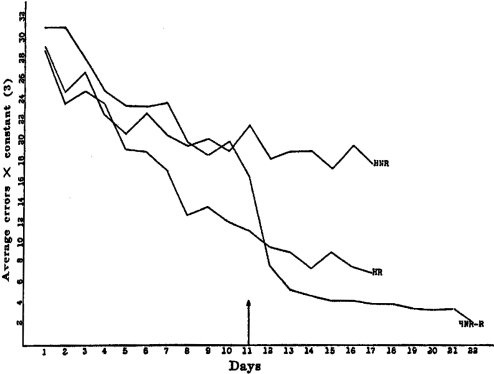

Before considering the third group, let us see how the results for the first two enable us to conclude that learning occurred in the Regular Reward group (see Figure 5.2). The HNR and HR groups were treated the same with one exception, the second received food at the end. Therefore, if the results differ, we can conclude that it must be this experience that made the difference. The average number of errors did not drop significantly below chance performance over the course of the experiment for the HNR group. In comparison, the HR group demonstrated a steady and substantial decline in errors, exactly the pattern one would expect if learning were occurring. This decline in errors as the result of experience fulfills the operational definition of learning. It would not be possible to conclude that the experience made a difference in the HR group without the HNR control condition. One could argue that something else was responsible for the decline taking place (e.g., a change in the lab conditions, maturation, etc.).

Figure 5.2. Results from Tolman & Honzik’s study (Jensen, 2006).

It is possible to conclude that the rewarded group learned the maze based upon a comparison of its results with the no reward group. A related, seemingly logical conclusion would be that the group not receiving food, failed to learn the maze. Tolman and Honzik’s third group was like the no reward Group for the first 10 days and like the rewarded group for the remaining days. This group enabled the test of whether or not the absence of food resulted in the absence of learning. It is important to understand the rationale for this condition. If the HNR-R group had not learned anything about the maze on the first 10 days, the number of errors would be expected to gradually decline from there on, the pattern demonstrated from the start by the HR condition. However, if the HNR-R group had been learning the maze, a more dramatic decline in errors would be expected once the food was introduced. This dramatic decline in errors is indeed what occurred, leading to the conclusion that the rats had learned the maze despite the fact that it was not evident in their behavior. This result has been described as “latent learning” (i.e., learning that is not reflected in performance). Learning is but one of several factors affecting how an individual behaves. Tolman and Honzik’s results imply that incentive motivation (food in this instance) was necessary in order for the animals to display what they had learned. Thus, we see the need to include the word “potentiality” in the operational definition of learning. During the first 10 trials, the rats clearly acquired the potential to negotiate the maze. These results may remind you of those cited in Chapter 1 with young children. You may recall that some scored higher on IQ tests when they received extrinsic rewards for correct answers. Just like Tolman and Honzik’s rats, they had the potential to perform better but needed an incentive.

Learning as an Adaptive Process

The operational definition tells us how to measure learning but does not tell us what is learned or why it is important. I attempted to achieve this by defining learning as an adaptive processwhereby individuals acquire the ability to predict and control the environment (Levy, 2013). There is nothing the Nukak can do to cause or stop it from raining. Over time, however, they may be able to use environmental cues such as dark skies or perhaps even cues related to the passage of time to predict the occurrence of rain. The Nukak can control the likelihood of discovering food by exploring their environment. They can obtain fruit from trees by reaching for and grasping it. The abilities to predict rain and obtain food certainly increase the likelihood of survival for the Nukak. That is, these abilities are adaptive.

The adaptive learning definition enables us to appreciate why it is necessary to turn our attention to two famous researchers whose contributions have enormously influenced the study of learning for decades, Ivan Pavlov and B. F. Skinner. Pavlov’s procedures, called classical conditioning, investigated learning under circumstances where it was possible to predict events but not control them. Skinner investigated learning under circumstances where control was possible. These two researchers created apparatuses and experimental procedures to study the details of adaptive learning. They identified many important learning phenomena and introduced technical vocabularies which have stood the test of time. We will describe Pavlov’s contributions to the study of predictive learning in this chapter and Skinner’s contributions to the study of control learning next chapter.

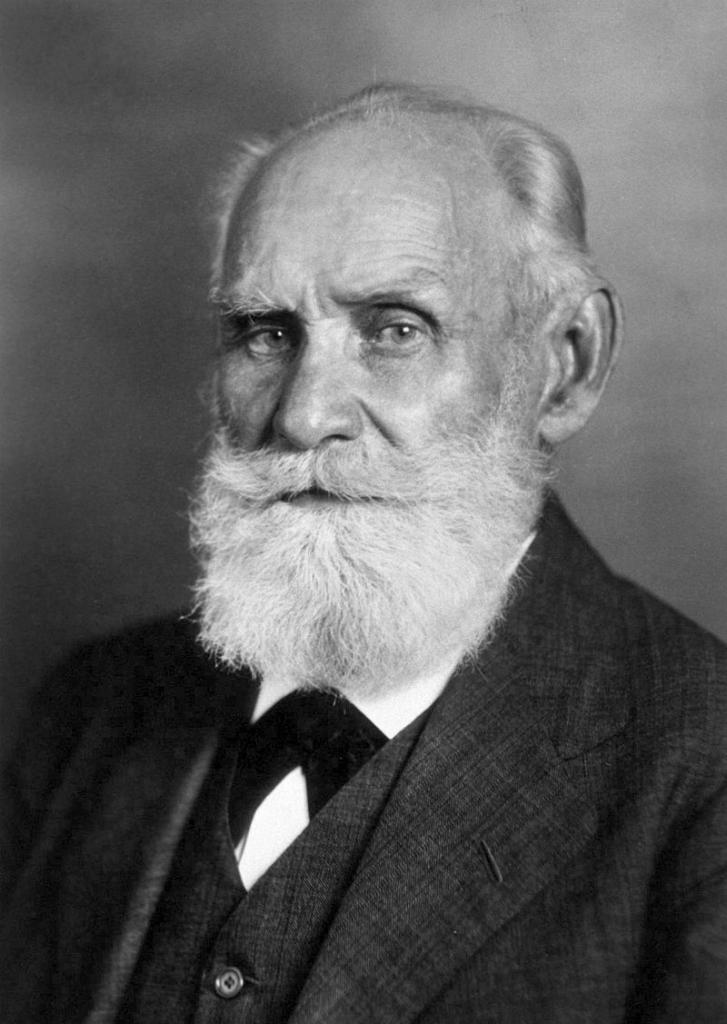

Figure 5.3 Ivan Pavlov.

One cannot overstate the significance of the contributions Ivan Pavlov made to the study of predictive learning. Pavlov introduced a level of rigor and precision of measurement of both the independent and dependent variables in animal learning that did not exist at the time. In 1904, Pavlov, a physiologist, was awarded the Nobel Prize in Medicine for his research investigating the digestive process in dogs. He became fascinated by an observation he and his laboratory assistants made while conducting this research. One of the digestive processes they studied was salivation. Saliva contains enzymes that initiate the process of breaking down what one eats into basic nutrients required to fuel and repair the body. The subjects frequently started salivating before being placed in the experimental apparatus. Pavlov described this salivation as a “psychic secretion” since it was not being directly elicited by food. He considered the phenomenon so important that within a few years he abandoned his research program in digestion and dedicated the rest of his professional career to systematically studying the details of this basic learning process.

This is a wonderful example of what has been described as serendipity, or accidental discovery in science. Dogs have been domesticated for thousands of years. A countless number of people probably observed dogs appearing to predict (i.e., anticipate or expect) food. Pavlov, however, recognized the significance of the observation as an example of a fundamental learning process. We often think of science as requiring new observations. Pavlov’s “discovery” of the classical conditioning process is an example of how this is not necessarily the case. One of the characteristics of an exceptional scientist is to recognize the significance of commonly occurring observations.

We will now review the apparatus, methods, and terminology Pavlov developed for studying predictive learning. He adapted an experimental apparatus designed for one scientific field of inquiry (the physiology of digestion) to an entirely different field (adaptive learning). Pavlov made a small surgical incision in the dog’s cheek and implanted a tube permitting saliva to be directly collected in a graduated test tube. The amount of saliva could then be accurately measured and graphed as depicted in figure 5.3. Predictive learning was inferred when salivation occurred to a previously neutral stimulus as the result of appropriate experience.

Animals inherit the tendency to make simple responses (i.e., reflexes) to specific types of stimulation. Pavlov’s salivation research was based on the reflexive eliciting of salivation by food (e.g., meat powder). This research was adapted to the study of predictive learning by including a neutral stimulus. By neutral, we simply mean that this stimulus did not initially elicit any behavior related to food. Pavlov demonstrated that if a neutral stimulus preceded a biologically significant stimulus on several occasions, one would see a new response occurring to the previously neutral stimulus. Figure 5.4 uses the most popular translation of Pavlov’s (who wrote in Russian) terminology. The reflexive behavior was referred to as the unconditioned response (UR). The stimulus that reflexively elicited this response was referred to as the unconditioned stimulus (US). A novel stimulus, by virtue of being paired in a predictive relationship with the food (US), acquires the capacity to elicit a food-related, conditioned response (CR). Once acquiring this capacity, the novel stimulus is considered a conditioned stimulus (CS).

Figure 5.4 Pavlov’s Classical Conditioning Procedures and Terminology.

BasicPredictive Learning Phenomena

In Chapter 1, we discussed the assumption of determinism as it applied to the discipline of psychology. If predictive learning is a lawful process, controlled empirical investigation has the potential to establish reliable cause-effect relationships. We will see this is the case as we review several basic classical conditioning phenomena. Many of these phenomena were discovered and named by Pavlov himself, starting with the acquisition process described above.

Acquisition

The term acquisition refers to a procedure or process whereby one stimulus is presented in a predictive relationship with another stimulus. Predictive learning (classical conditioning) is inferred from the occurrence of a new response to the first stimulus. Keeping in mind that mentalistic terms are inferences based upon behavioral observations, it is as though the individual learns to predict if this happens, then that happens.

Extinction

The term extinction refers to a procedure or process whereby a previously established predictive stimulus is no longer followed by the second stimulus. This typically results in a weakening in the strength of the prior learned response. It is as though the individual learns what used to happen, doesn’t happen anymore. Extinction is commonly misused as a term describing only the result of the procedure or process. That is, it is often used like the term schizophrenia, which is defined exclusively on the dependent variable (symptom) side. Extinction is actually more like influenza, in that it is a true explanation standing for the relationship between a specific independent variable (the procedure) and dependent variable (the change in behavior).

Spontaneous Recovery

The term spontaneous recovery refers to an increase in the strength of the prior learned response after an extended time period lapses between extinction trials. The individual acts as though, perhaps what used to happen, still does.

Is Extinction Unlearning or Inhibitory Learning?

Pavlov was an excellent example of someone whom today would be considered a behavioral neuroscientist. In fact, the full title of his classic book (1927) is Conditioned reflexes: An investigation of the physiological activity of the cerebral cortex. Behavioral neuroscientists study behavior in order to infer underlying brain mechanisms. Thus, Pavlov did not perceive himself as converting from a physiologist into a psychologist when he abandoned his study of digestion to explore the intricacies of classical conditioning. As implied by his “psychic secretion” metaphor, he believed he was continuing to study physiology, turning his attention from studying the digestive system to studying the brain.

One question of interest to Pavlov was the nature of the extinction process. Pavlov assumed that acquisition produced a connection between a sensory neuron representing the conditioned stimulus and a motor neuron eliciting salivation. The reduction in responding resulting from the extinction procedure could result from either breaking this bond (i.e., unlearning) or counteracting it with a competing response. The fact that spontaneous recovery occurs indicates that the bond is not broken during the extinction process. Extinction must involve learning an inhibitory response counteracting the conditioned response. The individual appears to learns that one stimulus no longer predicts another. The conclusion that extinction does not permanently eliminate a previously learned association has important practical and clinical implications. It means that someone who has received treatment for a problem and improved is not the same as a person never requiring treatment in the first place (c.f., Bouton, 2000; Bouton and Nelson, 1998). For example, even if someone has quit smoking, there is a greater likelihood of that person’s relapsing than a non-smoker’s acquiring the habit.

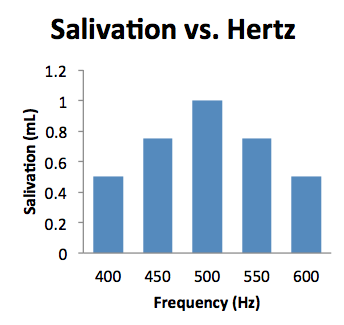

Stimulus Generalization and Discrimination

Just imagine if you had to learn to make the same response over and over again to each new situation. Fortunately, this is often not necessary. Stimulus generalization refers to the fact that a previously acquired response will occur in the presence of stimuli other than the original one, the likelihood being a function of the degree of similarity. In Figure 5.5, we see that a response learned to a 500 Hz frequency tone occurs to other stimuli, the percentage of times depending upon how close the frequency is to 500. It is as though the individual predicts what happens after one event will happen after similar events.

Figure 5.5 Stimulus generalization gradient.

The fact that generalization occurs, significantly increases the efficiency of individual learning experiences. However, there are usually limits on the appropriateness of making the same response in different situations. For example, new fathers often beam the first time they hear their infant say “dada.” They are less thrilled when they hear their child call the mailman “dada!” Usually it is necessary to conduct additional teaching so that the child only says “dada” in the presence of the father. Stimulus discrimination occurs when one stimulus (the S+, e.g., a tone or the father) is predictive of a second stimulus (e.g., food or the word “dada”) but a different stimulus (the S-, e.g., a light or the mailman) is never followed by that second stimulus. Eventually the individual responds to the S+ (tone or father) and not to the S- (light or mailman) as though learning if this happens then that happens, but if this other thing happens that does not happen.

Pavlov’s Stimulus Substitution Model of Classical Conditioning

For most of the 20th century, Pavlov’s originally proposed stimulus substitution model of classical conditioning was widely accepted. Pavlov viewed conditioning as a mechanistic (automatic) result of pairing neutral and biologically significant events in time. He believed that the established conditioned stimulus became a substitute for the original unconditioned stimulus. There were four assumptions underlying this stimulus substitution model:

- Classical conditioning requires a biologically significant stimulus (i.e., US)

- Temporal contiguity between a neutral stimulus and unconditioned stimulus is necessary for the neutral stimulus to become a conditioned stimulus

- Temporal contiguity between a neutral stimulus and unconditioned stimulus is sufficient for the neutral stimulus to become a conditioned stimulus

- The conditioned response will always resemble if not be identical to the unconditioned response.

Does Classical Conditioning Require a Biologically Significant Stimulus?

Higher-order conditioning is a procedure or process whereby a previously neutral stimulus is presented in a predictive relationship with a second, previously established, predictive stimulus. Learning is inferred from the occurrence of a new response in the presence of this previously neutral stimulus. For example, after pairing the tone with food, it is possible to place the tone in the position of the US by presenting a light immediately before it occurs. Research indicates that a conditioned response (salivation in this case) will occur to the light even though it was not paired with a biologically significant stimulus (food).

Is Temporal Contiguity Necessary for Conditioning?

Human beings have speculated about the learning process since at least the time of the early Greek philosophers. Aristotle, in the fourth century B.C., proposed three laws of association that he believed applied to human thought and memory. The law of contiguity stated that objects or events occurring close in time (temporal contiguity) or space (spatial contiguity) became associated. The law of similarity stated that we tended to associate objects or events having features in common such that observing one event will prompt recall of similar events. The law of frequency stated that the more often we experienced objects or events, the more likely we would be to remember them. In a sense, Pavlov created a methodology permitting empirical testing of Aristotle’s laws. The law that applies in this section is the law of temporal contiguity. Timing effects, like many variables studied scientifically, lend themselves to parametric studies in which the independent variable consists of different values on a dimension. It has been demonstrated that in human eyelid conditioning in which a light is followed by a puff of air to the eye is strongest when the puff occurs approximately 500 milliseconds (½ -second) after the light. The strength of conditioning at shorter or longer intervals drop off within tenths of a second. Thus, temporal contiguity appears critical in human eyelid conditioning, consistent with Pavlov’s second assumption.

An Exception – Acquired Taste Aversion

Acquired taste aversion is the only apparent exception to the necessity of temporal contiguity in predictive learning (classical conditioning). This exception can be understood as an evolutionary adaptation to protect animals from food poisoning. Just imagine if members of the Nukak got sick after eating a particular food and continued to eat the same substance. There is a good chance the tribe members (and tribe!) would not survive for long. It would be advantageous to avoid foods one ate prior to becoming ill, even if the symptoms did not appear for several minutes or even hours. The phenomenon of acquired taste aversion has been studied extensively. The time intervals used sometimes differ by hours rather than seconds or tenths of seconds. For example, rats were made sick by being exposed to X-rays after drinking sweet water (Smith & Roll, 1967). Rats have a strong preference for sweet water, drinking it approximately 80 per cent of the time when being given a choice with ordinary tap water. If the rat became sick within ½-hour, sweet-water drinking was totally eliminated. With intervals of 1 to 6 hours, it was reduced from 80 to 10 per cent. There was even evidence of an effect after a 24-hour delay! Pavlov’s dogs would not associate a tone with presentation of food an hour later, let alone 24 hours. The acquired aversion to sweet water can be interpreted as either an exception to the law of temporal contiguity or contiguity must be considered on a time scale of different orders of magnitude (hours rather than seconds).

Is Temporal Contiguity Sufficient for Conditioning?

Pavlov believed not only that temporal contiguity between CS and US was necessary for conditioning to occur; he also believed that it was all that is necessary (i.e., that it was sufficient). Rescorla (1966, 1968, 1988) has demonstrated that the correlation between CS and US (i.e., the extent to which the CS predicted the US) was more important then temporal contiguity. For example, if the only time one gets shocked is in the presence of the tone, then the tone correlates with shock (i.e., is predictive of the shock). If one is shocked the same amount whether the tone is present or not, the tone does not correlate with shock (i.e., provides no predictive information). Rescorla demonstrated that despite temporal contiguity between tone and shock in both instances, classical conditioning would be strong in the first case and not occur in the second.

Another example of the lack of predictive learning despite temporal contiguity between two events is provided in a study by Leon Kamin (1969). A blocking group received a tone (CS 1) followed by shock (US) in the first phase and a control group was simply placed in the chamber (see figure 5.13). The groups were identical from then on. During the second phase, a compound stimulus consisting of the light and a tone (CS 2) was followed by shock. During a test phase, each component was presented by itself to determine the extent of conditioning.

In the blocking group, conditioning occurred to the tone and not the light. Conditioning occurred to both elements of the compound in the control group. It is as though the prior experience with the tone resulted in the blocking group subjects not paying attention to the light in the second phase. The light was redundant. It did not provide additional information.

A novel and fun demonstration of blocking in college students involved a computerized video game (Arcediano, Matute, and Miller, 1997). Subjects tried to protect the earth from invasion by Martians with a laser gun (the space bar). Unfortunately, the enterprising Martians had developed an anti-laser shield. If the subject fired when the shield was in place, their laser-gun would be ineffective permitting a bunch of Martians to land and do their mischief. A flashing light preceded implementation of the laser-shield for subjects in the blocking group. A control group did not experience a predictive stimulus for the laser-shield. Subsequently, both groups experienced a compound stimulus consisting of the flashing light and a complex tone. The control group associated the tone with activation of the laser-shield whereas, due to their prior history with the light, the blocking group did not. For them, the tone was redundant.

The blocking procedure demonstrates that temporal contiguity between events, even in a predictive relationship, is not sufficient for learning to occur. In the second phase of the blocking procedure, the compound stimulus precedes the US. According to Pavlov, since both components are contiguous with the US, both should become associated with it and eventually elicit CRs. The combination of Rescorla’s (1966) and Kamin’s (1969) findings lead to the conclusion that learning occurs when individuals obtain new information enabling them to predict events they were unable to previously predict. Kamin suggested that this occurs only when we are surprised. That is, as long as events are proceeding as expected, we do not learn. Once something unexpected occurs, individuals search for relevant information. Many of our activities may be described as “habitual” (Kirsch, Lynn, Vigorito, and Miller, 2004) or “automatic” (Aarts and Dijksterhuis, (2000). We have all had the experience of riding a bike or driving as though we are on “auto pilot.” We are not consciously engaged in steering as long as events are proceeding normally. Once something unexpected occurs we snap to attention and focus on the immediate environmental circumstances. This provides the opportunity to acquire new information. This is a much more active and adaptive understanding of predictive learning than that provided by Pavlov’s stimulus substitution model (see Rescorla, 1988).

Must The Conditioned Response Resemble the Unconditioned Response?

We will now examine the fourth assumption of that model, that the conditioned response always resembles the unconditioned response. Meat powder reflexively elicits salivation and Pavlov observed the same reaction to a conditioned stimulus predictive of meat powder. Puffs of air reflexively elicit eye blinks and taps on the knee elicit knee jerks. The conditioned responses are similar to the unconditioned responses in research involving puffs of air and knee taps as unconditioned stimuli. It is understandable that Pavlov and others believed for so long that the conditioned response must resemble if not be identical to the unconditioned response. However, Zener (1937) took movies of dogs undergoing salivary conditioning and disagreed with this conclusion. He observed, “Despite Pavlov’s assertions, the dog does not appear to be eating an imaginary food. It is a different response, anthropomorphically describable as looking for, expecting, the fall of food with a readiness to perform the eating behavior which will occur when the food falls.”

Kimble (1961, p. 54) offered the possible interpretation that “the function of the conditioned response is to prepare the organism for the occurrence of the unconditioned stimulus.” Research by Shepard Siegel (1975, 1977, 1984, 2005) has swung the pendulum toward widespread acceptance of this interpretation of the nature of the conditioned response. Siegel’s research involved administration of a drug as the unconditioned stimulus. For example, rats were injected with insulin in the presence of a novel stimulus (Siegel, 1975). Insulin is a drug that lowers blood sugar level and is often used to treat diabetics. Eventually, a conditioned response was developed to the novel stimulus (now a CS). However, rather than lowering blood sugar level, the blood sugar level increased to the CS. Siegel described this increase as a compensatory response in preparation for the effect of insulin. He argued that it was similar to other homeostatic mechanisms designed to maintain optimal levels of biological processes (e.g., temperature, white blood cell count, fluid levels, etc.). Similar compensatory responses have been demonstrated with morphine, a drug having analgesic properties (Siegel, 1977) and with caffeine (Siegel, 2005). Siegel (2008) has gone so far as to suggest that “the learning researcher is a homeostasis researcher.”

Siegel has developed a fascinating and influential model of drug tolerance and overdose effects based upon his findings concerning the acquisition of compensatory responses (Siegel, 1983). He suggested that many so-called heroin overdoses are actually the result of the same dosage being consumed differently or in a different environment. Such an effect has actually been demonstrated experimentally with rats. Whereas 34 percent of rats administered a higher than usual dosage of heroin in the same cage died, 64 percent administered the same dosage in a different cage died (Siegel, Hinson, Krank, & McCully, 1982). As an experiment, this study has high internal validity but obviously could not be replicated with human subjects. In a study with high external validity, Siegel interviewed survivors of suspected heroin overdoses. Most insisted they had taken the usual quantity but indicated that they had used a different technique or consumed the drug in a different environment (Siegel, 1984). This combination of high external validity and high internal validity results makes a compelling case for Siegel’s learning model of drug tolerance and overdose effects.

Drug-induced compensatory responses are consistent with the interpretation that the conditioned response constitutes preparation for the unconditioned stimulus. Combining this interpretation with the conclusions reached regarding the necessity of predictiveness for classical conditioning to occur leads to the following alternative to Pavlov’s stimulus substitution model: Classical conditioning is an adaptive process whereby individuals acquire the ability to predict future events and prepare for their occurrence.

Control Learning and Human Potential

Just as predictive learning had a pioneer at the turn of the 20th century in Ivan Pavlov, control learning had its own in Edward Thorndike. Whereas Pavlov would probably be considered a behavioral neuroscientist today, Thorndike would most likely be considered a comparative psychologist. He studied several different species of animals including chicks, cats, and dogs and published his doctoral dissertation (1898) as well as a book (1911) entitled Animal Intelligence.

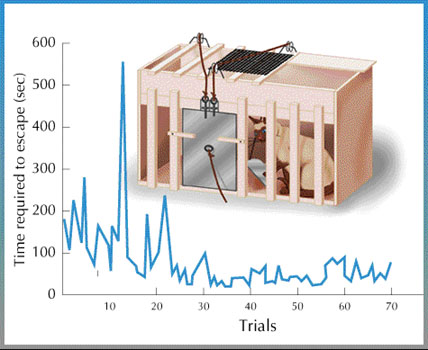

Figure 5.6 Thorndike’s puzzle box.

Thorndike created mazes and puzzle boxes for use with a variety of species, including fishes, cats, dogs, and chimpanzees (Imada and Imada, 1983). Figure 5.17 provides a sketch of a cat in a puzzle box that could be rigged up in a variety of ways. A sequence of responses was required in order to open the door leading to visible food (e.g., pulling a string, pressing a latch, etc.). A reduction in the amount of time taken to open the door indicated that control learning had occurred.

Figure 5.7 B. F. Skinner.

B.F. Skinner (1938) was a second pioneer in the study of control learning. Similar to Pavlov and Thorndike, he developed an iconic apparatus (see Figure 5.8), the operant chamber, more popularly referred to as a Skinner box. In predictive learning, there is usually a connection between a biologically significant stimulus (e.g., food or shock) and the response being studied (salivation or an increase in heart rate). In control learning, the connection between the response and food is arbitrary. There is not a genetic relationship between completing a maze or pressing a bar and the occurrence of food; there is a genetic relationship between food and salivation.

Skinner boxes have many applications and are in widespread usage not only to study adaptive learning, but also to study perception, motivation, animal cognition, psychophysiology, and psychopharmacology. Perhaps their best known application is the study of different schedules of reinforcement. Unlike a maze or puzzle box, the subject can repeatedly make a response in a Skinner-box. As we will see, the pattern of an individual’s rate of responding is sensitive to the pattern of consequences over an extended period of time.

Figure 5.8 Rat in a Skinner box.

As described above, research in predictive learning involves detecting the correlation between environmental events. Individuals acquire the ability to predict the occurrence or non-occurrence of appetitive or aversive stimuli. According to Skinner (1938), research in control learning (i.e., instrumental or operant conditioning) involves detecting contingencies between one’s behavior and subsequent events (i.e., consequences). This is the same distinction made in Chapter 1 between correlational and experimental research. Correlational research involves systematic observation of patterns of events as they occur in nature. Experimental research requires active manipulation of nature in order to determine if there is an effect. It is as though the entire animal kingdom is comprised of intuitive scientists detecting correlations among events and manipulating the environment in order to determine cause and effect. This capability enables adaptation to our diverse environmental niches. It is impossible for humans to rely upon genetic evolution to adapt to their modern conditions. We are like rats in a Skinner box, evaluating the effects of our behavior in order to adapt.

Skinner’s Contingency Schema

Another major contribution made by Skinner was the schema he developed to organize contingencies between behavior and consequences. He described four basic contingencies based on two considerations: did the consequence involve adding or removing a stimulus; did the consequence result in an increase or decrease in the frequency of the preceding behavior (see Figure 5.19). The four possibilities are: positive reinforcement in which adding a (presumably appetitive) stimulus increases the frequency of behavior; positive punishment in which adding a (presumably aversive) stimulus decreases the frequency of behavior; negative reinforcement in which removing a (presumably aversive) stimulus increases the frequency of behavior; negative punishment in which removing a (presumably appetitive) stimulus decreases the frequency of behavior.

Figure 5.9 Operant conditioning contingencies.

From a person’s perspective, positive reinforcement is what we ordinarily think of as receiving a reward for a particular behavior (If I do this, something good happens). Positive punishment is what we usually think of as punishment (If I do this, something bad happens). Negative reinforcement is often confused with punishment but, by definition, results in an increase in behavior. It is what we usually consider to be escaping or avoiding an aversive event (If I do this, something bad is removed, or If I do this, something bad does not happen). Examples of negative punishment would be response cost (e.g., a fine) or time out (If I do this, something good is removed, or If I do this, something good does not happen). Everyday examples would be: a child is given a star for cleaning up after playing and keeps cleaning up (positive reinforcement); a child is yelled at for teasing and the behavior decreases (positive punishment); a child raises an umbrella after (escape) it starts to rain, or before (avoidance) stepping out into the rain (both negative reinforcement); a child’s allowance is taken away for fighting (response cost), or a child is placed in the corner for fighting while others are permitted to play (time out) and fighting decreases (both negative punishment).

Skinner’s hedonic motivation “carrots and sticks” schema of contingencies between behaviors and consequences is familiar and intuitive. These principles are arguably the most powerful explanatory tools the discipline of psychology has provided for human behavior. They have been applied with a diversity of individuals and groups (e.g., autistic children, schizophrenic adults, normal school children, etc.) in a diversity of settings (e.g., hospitals, schools, industry, etc.) for every conceivable behavior (toilet training, academic performance, wearing seat belts, etc., etc., etc.). We will consider examples of control learning applications later.

Skinner’s control learning schema may be expanded to include predictive learning, thus forming a more comprehensive adaptive learning overview (Levy, 2013). Individuals acquire the ability to predict and control the occurrence and non-occurrence of appetitive and aversive events. This adaptive learning overview provides an intuitively plausible, if simplistic, portrayal of the human condition. Some things feel good (like food) and some things feel bad (like shock). We are constantly trying to maximize feeling good and minimize feeling bad. This requires being able to predict, and where possible control, events in our lives. This is one way of answering the existential question: What’s it all about?

Basic Control Learning Phenomena

Acquisition

Acquisition of a control response is different from acquisition of a predictive response. In predictive learning, two correlated events are independent of the individual’s behavior. In control learning, a specific response is required in order for an event to occur. In predictive learning, the response that is acquired is related to the second event (e.g., a preparatory response such as salivation for food). In control learning, the required response is usually arbitrary. For example, there is no “natural” relationship between bar-pressing and food for a rat or between much of our behavior and its consequences (e.g., using knives and forks when eating). This poses the question, how does the individual “discover” the required behavior?

From an adaptive learning perspective, a Skinner box has much in common with Thorndike’s puzzle box. The animal is in an enclosed space and a specific arbitrary response is required to obtain an appetitive stimulus. Still, the two apparatuses pose different challenges and were used in different ways by the investigators. Thorndike’s cats and dogs could see and smell large portions of food outside the box. The food in the Skinner box is tiny and released from a mechanical device hidden from view. Thorndike was interested in acquisition of a single response and recorded the amount of time it took for it to be acquired. Skinner developed a way to speed up acquisition of the initial response and then recorded how different variables influenced its rate of occurrence.

Thorndike’s and Skinner’s subjects were made hungry by depriving them of food before placing them in the apparatus. Since Thorndike’s animals could see and smell the food outside the puzzle box, they were immediately motivated to determine how to open the door to get out. It usually took about 2 minutes for one of Thorndike’s cats to initially make the necessary response. Unless there is residue of a food pellet in the food magazine in a Skinner box, there is no reason for a rat to engage in food-related behavior. One would need to be extremely patient to wait for a rat to discover that pressing the bar on the wall will result in food being delivered in the food magazine.

In order to speed up this process, the animal usually undergoes magazine training in which food pellets are periodically dropped into the food chamber (magazine). This procedure accomplishes two important objectives: rats have an excellent sense of smell, so they are likely to immediately discover the location of food; there is a distinct click associated with the operation of the food delivery mechanism that can be associated with the availability of food in the magazine. This makes it much easier for the animal to know when food is dispensed. Magazine training is completed when the rat, upon hearing the click, immediately goes to the food. Once magazine training is completed it is possible to use the shaping procedure to “teach” bar pressing. This involves dispensing food after successive approximations to bar pressing. One would first wait until the rat is in the vicinity of the bar before providing food. Then the rat would need to be closer, center itself in front of the bar, lift its paw, touch the bar, and finally press the bar. Common examples of behaviors frequently established through shaping with humans are: tying shoes, toilet training, bike riding, printing, reading, and writing.

In applied settings and the lab, it is possible to accelerate the shaping process by prompting the required behavior. A prompt is any stimulus that increases the likelihood of a desired response. It can be physical, gestural, or verbal. It is often effective to use these in sequence. For example, if we were trying to get a dog to roll over on command, you might start by saying “roll over” followed by physically rolling the dog. Then you might gradually eliminate the physical prompt (referred to as fading), saying “roll over” and using less force. This would be continued until you were no longer touching the dog, but simply gesturing. Imitative prompts, in which the gesture matches the desired response, are particularly common and effective with children (e.g., the game “peek-a-boo”). Getting back to our dog example, eventually, you could use fading on the gesture. Then it would be sufficient to simply say the words “roll over.” The combination of shaping, prompting, and fading is a very powerful teaching strategy for non-verbal individuals. Once words have been acquired for all the necessary components of a skill, it can be taught exclusively through the use of language. For example, “Please clean up your room by putting your toys in the chest and your clothes in the dresser.” Skinner (1986) describes the importance of speech to human accomplishments and considers plausible environmental contingencies favoring the evolutionary progression from physical to gestural to verbal prompts. He emphasizes that “sounds are effective in the dark, around corners, and when listeners are not looking.” In the following chapter we will consider speech and language in greater depth.

Learned and Unlearned Appetitive and Aversive Stimuli

We share with the rest of the animal kingdom the need to eat and survive long enough to reproduce if our species is to continue. Similar to the distinction made in the previous chapter between primary and secondary drives, and Pavlov’s distinction between unconditioned stimuli (biologically significant events) and conditioned stimuli, Skinner differentiated between unconditioned reinforcers (and punishers) and conditioned reinforcers (and punishers). Things related to survival such as food, water, sexual stimulation, removal of pain, and temperature regulation are reinforcing as the result of heredity. We do not need to learn to “want” to eat, although we need to learn what to eat. However, what clearly differentiates the human condition from that of other animals, and our lives from the lives of the Nukak, is the number and nature of our conditioned (learned) reinforcers and punishers.

Early in infancy, children see smiles and hear words paired with appetitive events (e.g., nursing). We saw earlier how this would lead to visual and auditory stimuli acquiring meaning. These same pairings will result in the previously neutral stimuli becoming conditioned reinforcers. That is, children growing up in the Colombian rainforest or cities in the industrialized world will increase behaviors followed by smiles and pleasant sounds. The lives of children growing up in these enormously different environments will immediately diverge. Even the feeding experience will be different, with the Nukak child being nursed under changing, sometimes dangerous, and uncomfortable conditions while the developed world child is nursed or receives formula under consistent, relatively safe, and comfortable conditions.

In Chapter 1, we considered how important caring what grade one receives is to success in school. Grades became powerful reinforcers that played a large role in your life, but not the life of a Nukak child. Grades and money are examples of generalized reinforcers. They are paired with or exchangeable for a variety of other extrinsic and social reinforcers. Grades probably have been paired with praise and perhaps extrinsic rewards as you grew up. They also provide information (feedback) concerning how well you are mastering material. Your country’s economy is a gigantic example of the application of generalized reinforcement.

It was previously mentioned that low-performing elementary-school students that receive tangible rewards after correct answers, score higher on IQ tests than students simply instructed to do their best (Edlund, 1972; Clingman & Fowler, 1976). High-performing students do not demonstrate this difference. These findings were related to Tolman and Honzik’s (1930) latent learning study described previously. Obviously, the low-performing students had the potential to perform better on the tests but were not sufficiently motivated by the instructions. Without steps taken to address this motivational difference, it is likely that these students will fall further and further behind and not have the same educational and career opportunities as those who are taught when they are young to always do their best in school.

Parents can play an enormous role in helping their children acquire the necessary attitudes and skills to succeed in and out of school. It is not necessary to provide extrinsic rewards for performance, although such procedures definitely work when administered appropriately. We can use language and reasoning to provide valuable lessons such as “You get out of life what you put into it” and “Anything worth doing is worth doing well.” Doing well in school, including earning good grades, is one application of these more generic guiding principles. In chapter 8, we will review Kohlberg’s model of moral development and discuss the importance of language as a vehicle to provide reasons for desired behavior.

Discriminative Stimuli and Warning Stimuli

The applied Skinnerian operant conditioning literature (sometimes called Applied Behavior Analysis or ABA, not to be confused with the reversal design with the same acronym), often refers to the ABCs: antecedents, behaviors, and consequences. Adaptation usually requires not only learning what to do, but under what conditions (i.e., the antecedents) to do it. The very same behavior may have different consequences in different situations. For example, whereas your friends may pat you on the back and cheer as you jump up and down at a ball game, reactions will most likely be different if you behave in the same way at the library. A discriminative stimulus signals that a particular behavior will be reinforced (i.e., followed by an appetitive stimulus), whereas a warning stimulus signals that a particular behavior will be punished (followed by an aversive event). In the example above, the ball park is a discriminative stimulus for jumping up and down whereas the library is a warning stimulus for the same behavior.

Stimulus-Response Chains

Note that these are the same procedures that establish stimuli as conditioned reinforcers and punishers. Thus, the same stimulus may have more than one function. This is most apparent in a stimulus-response chain; a sequence of behaviors in which each response alters the environment producing the discriminative stimulus for the next response.

Our daily routines consist of many stimulus-response chains. For example:

- Using the phone: sight of phone – pick up receiver; if dial tone – dial, if busy signal – hang up; ring – wait; sound of voice – respond.

- Driving a car: sight of seat – sit; sight of keyhole – insert key; feel of key in ignition – turn key; sound of engine – put car in gear; feel of engaged gear – put foot on accelerator.

We can also describe larger units of behavior extending over longer time intervals as consisting of stimulus-response chains. For example:

- Graduating college: Studying this book – doing well on exam; doing well on all exams and assignments – getting good course grade; getting good grades in required and elective courses – graduating.

- Getting into college: preparing for kindergarten; passing kindergarten; passing 1st grade, etc.

- Life: getting fed; getting through school; getting a job; etc., etc.

Whew, that was fast! If only it were so simple!

Adaptive Learning Applications

Predictive Learning Applications

Classical Conditioning of Emotions

John Watson and Rosalie Rayner (1920) famously (some would say infamously) applied classical conditioning procedures to establish a fear in a young child. Their demonstration was a reaction to an influential case study published by Sigmund Freud (1955, originally published in 1909) concerning a young boy called Little Hans. Freud, based upon correspondence with the 5-year old’s father, concluded that the child’s fear of horses was the result of the psychodynamic defense mechanism of projection. He thought horses, wearing black blinders and having black snouts, symbolically represented the father who wore black-rimmed glasses and had a mustache. Freud interpreted the fear as being the result of an unconscious Oedipal conflict despite the knowledge that Little Hans witnessed a violent accident involving a horse soon before the onset of the fear.

Watson and Rayner read this case history and considered it enormously speculative and unconvincing (Agras, 1985). They felt that Pavlov’s research in classical conditioning provided principles that could more plausibly account for the onset of Little Han’s fear. They set out to test his hypothesis with the 11-month old son of a wet nurse working in the hospital where Watson was conducting research with white rats. The boy is frequently facetiously referred to as Little Albert, after the subject of Freud’s case history.

Albert initially demonstrated no fear and actually approached the white rat. Watson had an interest in child development and eventually published a successful book on this subject (Watson, 1928). He knew that infants innately feared very few things. Among them were painful stimuli, a sudden loss of support, and a startling noise. Watson and Rayner struck a steel rod (the US) from behind Albert while he was with the rat (the CS) 7 times. This was sufficient to result in Albert’s crying and withdrawing (CRs) from the rat when it was subsequently presented. It was also shown that Albert’s fear generalized to other objects including a rabbit, a fur coat and a Santa Claus mask.

Desensitization Procedures

Mary Jones, one of Watson’s students, had the opportunity to undo a young child’s extreme fear of rabbits (Jones, 1924). Peter was fed his favorite food while the rabbit was gradually brought closer and closer to him. Eventually, he was able to hold the rabbit on his lap and play with it. This was an example of the combined use of desensitization and counter-conditioning procedures. The gradual increase in the intensity of the feared stimulus constituted the desensitization component. This procedure was designed to extinguish fear by permitting it to occur in mild form with no distressing following event. Feeding the child in the presence of the feared stimulus constituted counter-conditioning of the fear response by pairing the rabbit with a powerful appetitive stimulus that should elicit a competing response to fear. Direct in vivo (i.e., in the actual situation) desensitization is a very effective technique for addressing anxiety disorders, including shyness and social phobias (Donohue, Van Hasselt, & Hersen, 1994), public speaking anxiety (Newman, Hofmann, Trabeert, Roth, & Taylor, 1994), and even panic attacks (Clum, Clum, & Surls, 1993).

Sometimes, direct in vivo treatment of an anxiety disorder is either not possible (e.g., fear of extremely rare or dangerous events) or inconvenient (e.g., the fear occurs in difficult-to-reach or under difficult-to-control circumstances). In such instances it is possible to administer systematic desensitization (Wolpe, 1958) where the person imagines the fearful event under controlled conditions (e.g., the therapist’s office). Usually the person is taught to relax as a competing response while progressing through a hierarchy (i.e., ordered list) of realistic situations. Sometimes there is an underlying dimension that can serve as the basis for the hierarchy. Jones used distance from the rabbit when working with Peter. One could use steps on a ladder to treat a fear of height. Time from an aversive event can sometimes be used to structure the hierarchy. For example, someone who is afraid of flying might be asked to relax while thinking of planning a vacation for the following year involving flight, followed by ordering tickets 6 months in advance, picking out clothes, packing for the trip, etc. Imaginal systematic desensitization has been found effective for a variety of problems including severe test anxiety (Wolpe & Lazarus, 1966), fear of humiliating jealousy (Ventis, 1973), and anger management (Smith 1973, 577-578). A review of the systematic desensitization literature concluded that “for the first time in the history of psychological treatments, a specific treatment reliably produced measurable benefits for clients across a wide range of distressing problems in which anxiety was of fundamental importance” (Paul, 1969).

In recent years, virtual reality technology (see Figure 5.15) has been used to treat fears of height (Coelho, Waters, Hine, & Wallis, 2009) and flying (Wiederhold, Gevirtz, & Spira, 2001), in addition to other fears (Gorrindo, & James, 2009, Wiederhold, & Wiederhold, 2005). In one case study (Tworus, Szymanska, & Llnicki, 2010), a soldier wounded three times in battle was successfully treated for Post Traumatic Stress Disorder (PTSD). The use of virtual reality is especially valuable in such instances where people have difficulty visualizing scenes and using imaginal desensitization techniques. Later, when we review control learning applications, we will again see how developing technologies enable and enhance treatment of difficult personal and social problems.

Classical Conditioning of Word Meaning

Sticks and stones may break your bones

But words will never hurt you

We all understand that in a sense this old saying is true. We also recognize that words can inflict pain greater than that inflicted by sticks and stones. How do words acquire such power? Pavlov (1928) believed that words, through classical conditioning, acquired the capacity to serve as an indirect “second signal system” distinct from direct experience.

Ostensibly, the meaning of many words is established by pairing them with different experiences. That is, the meaning of a word consists of the learned responses to it (most of which cannot be observed) resulting from the context in which the word is learned. For example, if you close your eyes and think of an orange, you can probably “see”, “smell”, and “taste” the imagined orange. Novelists and poets are experts at using words to produce such rich imagery (DeGrandpre, 2000).

There are different types of evidence supporting the classical conditioning model of word meaning. Razran (1939), a bilingual Russian-American, translated and summarized early research from Russian laboratories. He coined the term “semantic generalization” to describe a different type of stimulus generalization than that described above. In one study it was shown that a conditioned response established to a blue light occurred to the word “blue” and vice versa. In these instances, generalization was based on similarity in meaning rather than similarity on a physical dimension. It has also been demonstrated that responses acquired to a word occurred to synonyms (words having the same meaning) but not homophones (words sounding the same), another example of semantic generalization (Foley & Cofer, 1943).

In a series of studies, Arthur and Carolyn Staats and their colleagues experimentally established meaning using classical conditioning procedures (Staats & Staats, 1957, 1959; Staats, Staats, & Crawford, 1962; Staats, Staats, & Heard, 1959, 1961; Staats, Staats, Heard, & Nims, 1959). In one study (Staats, Staats, & Crawford, 1962), subjects were shown a list of words several times, with the word “LARGE” being followed by either a loud sound or shock. This resulted in heightened galvanic skin responses (GSR) and higher ratings of unpleasantness to “LARGE.” It was also shown that the unpleasantness rating was related to the GSR magnitude. These studies provide compelling support for classical conditioning being a basic process for establishing word meaning. In Chapter 6, we will consider the importance of word meaning in our overall discussion of language as an indirect learning procedure.

Known words can be used to establish the meaning of new words through higher-order conditioning. An important example involves using established words to discourage undesirable acts, reducing the need to rely on punishment. Let us say a parent says “No!” before slapping a child on the wrist as the child starts to stick a finger in an electric outlet. The slap should cause the child to withdraw her/his hand. On a later occasion, the parent says “Hot, no!” as the child reaches for a pot on the stove. The initial pairing with a slap on the wrist would result in the word “no” being sufficient to cause the child to withdraw her/his hand before touching the stove. Saying “Hot, no!” could transfer the withdrawal response to the word “hot.” Here we see the power of classical conditioning principles in helping us understand language acquisition, including the use of words to establish meaning. We also see the power of language as a means of protecting a child from the “school of hard knocks” (and shocks, and burns, etc.).

Let us return to the example of a child about to stick her/his hand in an electric outlet. The parent might say “No!” and slap the child on the wrist, producing a withdrawal response. Now it would theoretically be possible to use the word “no” to attach meaning to another word through higher-order conditioning. For example, the child might be reaching for a pot on a stove and the parent could say “Hot, no!” The word “hot” should now elicit a withdrawal response despite never being paired with shock. This would be an example of what Pavlov meant by a second signal system with words substituting for direct experience.

Evaluative Conditioning

Evaluative conditioning is a term applied to the major research area examining how likes and dislikes are established by pairing objects with positive or negative stimuli in a classical conditioning paradigm (Walther, Weil, & Dusing, 2011). Jan De Houwer and his colleagues have summarized more than three decades of research documenting the effectiveness of such procedures in laboratory studies and in applications to social psychology and consumer science (De Houwer, 2007; De Houwer, Thomas, and Baeyens, 2001; Hofmann, De Houwer, Perugini, Baeyens, and Crombez, 2010). Recently, it has been demonstrated that pairing aversive health-related images with fattening foods resulted in their being considered more negatively. Subjects became more likely to choose healthful fruit rather than snack foods (Hollands, Prestwich, and Marteau, 2011). Similar findings were obtained with alcohol and drinking behavior (Houben, Havermans, and Wiers, 2010). A consumer science study demonstrated that college students preferred a pen previously paired with positive images when asked to make a selection (Dempsey, and Mitchell (2010). Celebrity endorsers have been shown to be very effective, particularly when there was an appropriate connection between the endorser and the product (Till, Stanley, and Priluck, 2008). It has even been shown that pairing the word “I” with positive trait words increased self-esteem. College students receiving this experience were not affected by negative feedback regarding their intelligence (Dijksterhuis, 2004). Sexual imagery has been used for years to promote products such as beer and cars (see Figure 5.16).

When we think of adaptive behavior, we ordinarily do not think of emotions or word meaning. Instead, we usually think of these as conditions that motivate or energize the individual to take action. We now turn our attention to control learning, the type of behavior we ordinarily have in mind when we think of adaptation.

Control Learning Applications

Maintenance of Control Learning

We observed that, even after learning has occurred, individuals remain sensitive to environmental correlations and contingencies. Previously-acquired behaviors will stop occurring if the correlation (in the case of predictive learning) or contingency (in the case of control learning) is eliminated. This is the extinction process. There is a need to understand the factors that maintain learned behavior when it is infrequently reinforced which is often the case under naturalistic conditions.

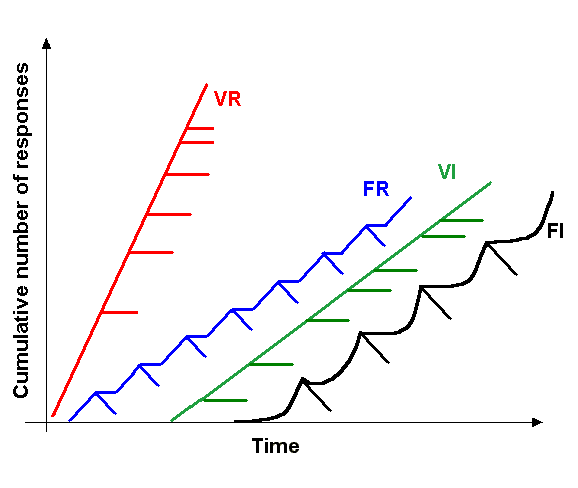

Much of B.F. Skinner’s empirical research demonstrated the effects of different intermittent schedules of reinforcement on the response patterns of pigeons and rats (c.f., Ferster & Skinner, 1957). There are an infinite number of possible intermittent schedules between the extremes of never reinforcing and always reinforcing responses. How can we organize the possibilities in a meaningful way? As he did with control learning contingencies, Skinner developed a useful schema for the categorization of intermittent schedules based on two considerations. The most fundamental distinction was between schedules requiring that a certain number of responses be completed (named ratio schedules) and those requiring only a single response when the opportunity was presented (named interval schedules). I often ask my classes if they can provide “real life” examples of ratio and interval schedules. One astute student preparing to enter the military suggested that officers try to get soldiers to believe that promotions occur according to ratio schedules (i.e., how often one does the right thing) but, in reality, they occur on the basis of interval schedules (i.e., doing the right thing when an officer happened to be observing). Working on a commission basis is a ratio contingency. The more items you sell, the more money you make. Calling one’s friend is an interval contingency. It does not matter how often you try if the person is not home. Only one call is necessary if the friend is available. The other distinction Skinner made is based on whether the response requirement (in ratio schedules) or time requirement (in interval schedules) is constant or not. Contingencies based on constants were called “fixed” and those where the requirements changed were called “variable.” These two distinctions define the four basic reinforcement schedules (see Figure 5.21): fixed ratio (FR), variable ratio (VR), fixed interval (FI), and variable interval (VI).

Contingency

Response Dependent Time Dependent

| FR | FI |

| VR | VI |

F = Fixed (constant pattern)

V = Variable (random pattern)

R = Ratio (number of responses)

I = Interval (time till opportunity)

Figure 5.10 Skinner’s Schema of Intermittent Schedules of Reinforcement.

In an FR schedule, reinforcement occurs after a constant number of responses. For example, an individual on an FR 20 schedule would be reinforced after every twentieth response. In comparison, an individual on a VR 20 schedule would be reinforced, on the average, every twentieth response (e.g., 7 times in 140 responses with no pattern). In a fixed interval schedule, the opportunity for reinforcement is available after the passage of a constant amount of time since the previous reinforced response. For example, in an FI 5- minute schedule the individual will be reinforced for the first response occurring after 5 minutes elapse since the previous reinforcement. A VI 5- minute schedule would include different interval lengths averaging 5 minutes between opportunities.

It is possible to describe the behavioral pattern emerging as a function of exposure to an intermittent schedule of reinforcement as an example of control learning. That is, one can consider what constitutes the most effective (i.e., adaptive) pattern of responding to the different contingencies. The most adaptive outcome would result in obtaining food as soon as possible while expending the least amount of effort. Individuals have a degree of control over the frequency and timing of reward in ratio schedules that they do not possess in interval schedules. For example, if a ratio value is 5 (i.e., FR 5) the quicker one responds the sooner the requirement is completed. If an interval value is 5 minutes it does not matter how rapidly or how often one responds during the interval. It must lapse before the next response is reinforced. In contrast, with interval schedules, only one response is required, so lower rates of responding will reduce effort while possibly delaying reinforcement.

Figure 5.11 Characteristic cumulative response patterns for the four basic schedules.

Figure 5.11 shows the characteristic cumulative response patterns produced by each of the four basic schedules. We may consider the optimal response pattern for each of the four basic schedules and compare this with what actually occurs under laboratory conditions. High rates of responding in ratio schedules will result in receiving food as soon as possible and maximizing the amount of food received per session. Therefore, it is not surprising that ratio schedules result in higher response rates than interval schedules. FR schedules result in an “all-or-none” pattern with distinct post-reinforcement pauses related to the ratio value. In contrast, shorter pauses typically occur randomly with large value VR schedules. This difference is due to the predictability of the FR schedule, whereby with experience the individual can develop an expectancy regarding the amount of effort and time it will take to be rewarded. The amount of effort and time required for the next reward is unpredictable with VR schedules; therefore, one does not observe post-reinforcement pauses.

With an FI schedule, it is possible to receive reinforcement as soon and with as little effort as possible by responding once immediately after the required interval has elapsed. For example, in an FI 5 min schedule, responses occurring before the 5 minutes lapse have no effect on delivery of food. If one waits past 5 minutes, reinforcement is delayed. Thus, there is competition between the desires to obtain food as soon as possible and to conserve responses. The cumulative response pattern that emerges under such schedules has been described as the fixed-interval scallop and is a compromise between these two competing motives. There is an extended pause after reinforcement similar to what occurs with the FR schedule since once again there is a predictive interval length between reinforcements . However, unlike the characteristic burst following the pause in FR responding, the FI schedule results in a gradual increase in response rate until responding is occurring consistently when the reward becomes available. This response pattern results in receiving the food as soon as possible and conserving responses. Repetition of the pattern produces the characteristic scalloped cumulative response graph.

With a VI schedule, it is impossible to predict when the next opportunity for reinforcement will occur. Under these conditions, as with the VR schedule, the individual responds at a constant rate. Unlike the VR schedule, the pace is dependent upon the average interval length. As with the FI schedule, the VI pattern represents a compromise between the desires to obtain the reward as soon as possible and to conserve responses. An example might help clarify why the VI schedule works this way. Imagine you have two tickets to a concert and know two friends who would love to go with you. One of them is much chattier than the other. Let us say the chatty friend talks an average of 30 minutes on the phone, while the other averages only about 5 minutes. Assuming you would like to contact one of your friends as soon as possible, you would most likely try the less chatty one first and more often if receiving busy signals for both.

Applications of Control Learning

Learned Industriousness

The biographies of high-achieving individuals often describe them as enormously persistent. They seem to engage in a lot of practice in their area of specialization, be it the arts, sciences, helping professions, business, athletics, etc. Successful individuals persevere, even after many “failures” occur over extended time periods. Legend has it that when Thomas Edison’s wife asked him what he knew after all the time he had spent trying to determine an effective filament for a light bulb he replied “I’ve just found 10,000 ways that won’t work!” Eisenberger (1992) reviewed the animal and human research literatures and coined the term learned industriousness to apply to the combination of persistence, willingness to expend maximum effort, and self-control (e.g., willingness to postpone gratification in the marshmallow test described in Chapter 1). Eisenberger found that improving an individual on any one of these characteristics carried over to the other two. Intermittent schedule effects have implications regarding learned industriousness.

Contingency Management of Substance-Abuse

An adult selling illegal drugs to support an addictive disorder is highly motivated to surreptitiously commit an illegal act. As withdrawal symptoms become increasingly severe, punishment procedures lose their effectiveness. It would be prudent and desirable to enroll the addict in a drug-rehabilitation program involving medically-monitored withdrawal procedures or medical provision of a legal substitute. Ideally, this would be done on a voluntary basis but it could be court-mandated. Contingency management procedures have been used successfully for years to treat substance abusers. In one of the first such studies, vouchers exchangeable for goods and services were rewarded for cocaine-free urine samples, assessed 3 times per week (Higgins, Delaney, Budney, Bickel, Hughes, & Foerg, 1991). Hopefully, successful treatment of the addiction would be sufficient to eliminate the motive for further criminal activity. A contingency management cash-based voucher program for alcohol abstinence has been implemented using combined urine and breath assessment procedures. It resulted in a doubling, from 35% to 69%, of alcohol-free test results (McDonell, Howell, McPherson, Cameron, Srebnik, Roll, and Ries, 2012).

Self-Control: Manipulating “A”s & “C”s to Affect “B”s

Self-control techniques have been described in previous chapters. Perri and Richards (1977) found that college students systematically using behavior-change techniques were more successful than others in regulating their eating, smoking, and studying habits. Findings such as these constitute the empirical basis for the self-control assignments in this book. In this chapter, we discussed the control learning ABCs. Now we will see how it is possible to manipulate the antecedents and consequences of one’s own behaviors in order to change in a desired way. We saw how prompting may be used to speed the acquisition process. A prompt is any stimulus that increases the likelihood of a behavior. People have been shopping from lists and pasting signs to their refrigerators for years. My students believe it rains “Post-it” notes in my office! Sometimes, it may be sufficient to address a behavioral deficit by placing prompts in appropriate locations. For example, you could put up signs or pictures as reminders to clean your room, organize clutter, converse with your children, exercise, etc. Place healthy foods in the front of the refrigerator and pantry so that you see them first. In the instance of behavioral excesses, your objective is to reduce or eliminate prompts (i.e., “triggers”) for your target behavior. Examples would include restricting eating to one location in your home, avoiding situations where you are likely to smoke, eat or drink to excess, etc. Reduce the effectiveness of powerful prompts by keeping them out of sight and/or creating delays in the amount of time required to consume them. For example, fattening foods could be kept in the back of the refrigerator wrapped in several bags.

Throughout this book we stress the human ability to transform the environment. You have the power to structure your surroundings to encourage desirable and discourage undesirable acts. Sometimes adding prompts and eliminating triggers is sufficient to achieve your personal objectives. If this is the case, it will become apparent as you graph your intervention phase data. If manipulating antecedents is insufficient, you can manipulate the consequences of your thoughts, feelings, or overt acts. If you are addressing a behavioral deficit (e.g., you would like to exercise, or study more), you need to identify a convenient and effective reinforcer (i.e., reward, or appetitive stimulus). Straightforward possibilities include money (a powerful generalized reinforcer that can be earned immediately) and favored activities that can be engaged in daily (e.g., pleasure reading, watching TV, listening to music, engaging in on-line activities, playing video games, texting, etc.). Maximize the likelihood of success by starting with minimal requirements and gradually increasing the performance levels required to earn rewards (i.e., use the shaping procedure). For example, you might start out by walking slowly on a treadmill for brief periods of time, gradually increasing the speed and duration of sessions.

After implementing self-control manipulations of antecedents and consequences, it is important to continue to accurately record the target behavior during the intervention phase. If the results are less than satisfactory, it should be determined whether there is an implementation problem (e.g., the reinforcer is too delayed or not powerful enough) or whether it is necessary to change the procedure. The current research literature is the best source for problem-solving strategies. The great majority of my students are quite successful in attaining their self-control project goals. Recently some have taken advantage of developing technologies in their projects. The smart phone is gradually becoming an all purpose “ABC” device. As antecedents, students are using “to do” lists and alarm settings as prompts. They use the note pad to record behavioral observations. Some applications on smart phones and fitness devices enable you to record monitor and record health habits such as the quality of your sleep and the number of calories you are consuming at meals. As a consequence (i.e., reinforcer), you could use access to games or listening to music, possibly on the phone itself.