1.1: The Study of History

1.1.1: Splitting History

Periodization—the process of categorizing the past into discrete, quantified, named blocks of time in order to facilitate the study and analysis of history—is always arbitrary and rooted in particular regional perspectives, but serves to organize and systematize historical knowledge.

Learning Objective

Analyze the complications inherent to splitting history for the purpose of academic study

Key Points

-

The question of what kind of inquiries

historians pose, what knowledge they seek, and how they interpret the evidence

that they find remains controversial. Historians draw conclusions from the past

approaches to history but in the end, they always write in the context of their

own time, current dominant ideas of how to interpret the past, and even

subjective viewpoints. -

All events that are remembered and preserved in

some original form constitute the historical record. The task of

historians is to identify the sources that can most usefully contribute to

the production of accurate accounts of the past. These sources, known are

primary sources or evidence, were produced at the time under study and

constitute the foundation of historical inquiry. -

Periodization is the process of categorizing the

past into discrete, quantified named blocks of time in order to facilitate the

study and analysis of history. This results in descriptive abstractions that

provide convenient terms for periods of time with relatively stable

characteristics. All systems of periodization are arbitrary. -

The common general split between prehistory, ancient history, Middle Ages,

modern history, and contemporary history is

a Western division of the largest blocks of time agreed upon by Western

historians.

However, even within this largely accepted division the perspective of specific

national developments and experiences often divides Western historians, as some

periodizing labels will be applicable only to particular regions. -

The study of world history emerged as a

distinct academic field in order to examine history from a global perspective rather than a solely national perspective of

investigation. However, the field still struggles

with an inherently Western periodization. - World historians use a thematic approach to look for common patterns that

emerge across all cultures. World history’s periodization, as imperfect

and biased as it is, serves as a way to organize and systematize

knowledge.

Key Terms

- world history

-

(Also global history or transnational history): emerged as a distinct academic field in the 1980s. It examines history from a global perspective. World history should not be confused with comparative history, which, like world history, deals with the history of multiple cultures and nations, but does not do so on a global scale. World history identifies common patterns that emerge across all cultures.

- periodization

-

The process or study of categorizing the past into discrete, quantified named blocks of time in order to facilitate the study and analysis of history. This results in descriptive abstractions that provide convenient terms for periods of time with relatively stable characteristics. However, determining the precise beginning and ending to any period is usually arbitrary.

- primary sources

-

Original sources of information about a topic. In the study of history as an academic discipline, primary sources include artifact, document, diary, manuscript, autobiography, recording, or other source of information that was created at the time under study.

How Do We Write History?

The word history comes ultimately from Ancient Greek historía, meaning “inquiry,” “knowledge from inquiry,” or “judge.” However, the question of what kind of inquiries historians pose, what knowledge they seek, and how they interpret the evidence that they find remains controversial. Historians

draw conclusions from past approaches to history, but in the end, they always write in the context of their own time, current dominant ideas of how to interpret the past, and even subjective viewpoints. Furthermore, current events and developments often trigger which past events, historical periods, or geographical regions are seen as critical and thus should be investigated. Finally, historical studies are designed to provide specific lessons for societies today. In the words of Benedetto Croce, Italian philosopher and historian, “All history is contemporary history.”

All events that are remembered and preserved in some original form constitute the historical record. The task of historians is to identify the sources that can most usefully contribute to the production of accurate accounts of the past. These sources, known are primary sources or evidence, were produced at the time under study and constitute the foundation of historical inquiry. Ideally, a historian will use as many available primary sources as can be accessed, but in practice, sources may have been destroyed or may not be available for research. In some cases, the only eyewitness reports of an event may be memoirs, autobiographies, or oral interviews taken years later. Sometimes, the only evidence relating to an event or person in the distant past was written or copied decades or centuries later. Historians remain cautious when working with evidence recorded years, or even decades or centuries, after an event; this kind of evidence poses the question of to what extent witnesses remember events accurately. However, historians also point out that hardly any historical evidence can be seen as objective, as it is always a product of particular individuals, times, and dominant ideas. This is also why researchers try to find as many records of an event under investigation as possible, and it is not unusual that they find evidence that may present contradictory accounts of the same events.

In general, the sources of historical knowledge can be separated into three categories: what is written, what is said, and what is physically preserved. Historians often consult all three.

Periodization

Periodization is the process of categorizing the past into discrete, quantified, named blocks of time in order to facilitate the study and analysis of history. This results in descriptive abstractions that provide convenient terms for periods of time with relatively stable characteristics.

To the extent that history is continuous and cannot be generalized, all systems of periodization are arbitrary. Moreover, determining the precise beginning and ending to any period is also a matter of arbitrary decisions. Eventually, periodizing labels are a reflection of very particular cultural and geographical perspectives, as well as specific subfields or themes of history (e.g., military history, social history, political history, intellectual history, cultural history, etc.).

Consequently, not only do periodizing blocks inevitably overlap, but they also often seemingly conflict with or contradict one another. Some have a cultural usage (the Gilded Age), others refer to prominent historical events (the inter-war years: 1918–1939), yet others are defined by decimal numbering systems (the 1960s, the 17th century). Other periods are named after influential individuals whose impact may or may not have reached beyond certain geographic regions (the Victorian Era, the Edwardian Era, the Napoleonic Era).

Western Historical Periods

The common general split between prehistory (before written history), ancient history, Middle Ages, modern history, and contemporary history (history within the living memory) is a Western division of the largest blocks of time agreed upon by Western historians and representing the Western point of view. For example, the history of Asia or Africa cannot be neatly categorized following these periods.

However, even within this largely accepted division, the perspective of specific national developments and experiences often divides Western historians, as some periodizing labels will be applicable only to particular regions.

This is especially true of labels derived from individuals or ruling dynasties, such as the Jacksonian Era in the United States, or the Merovingian Period in France. Cultural terms may also have a limited, even if larger, reach. For example, the concept of the Romantic period is largely meaningless outside of Europe and European-influenced cultures; even within those areas, different European regions may mark the beginning and the ending points of Romanticism differently. Likewise, the 1960s, although technically applicable to anywhere in the world according to Common Era numbering, has a certain set of specific cultural connotations in certain countries, including sexual revolution, counterculture, or youth rebellion. However, those never emerged in certain regions (e.g., in Spain under Francisco Franco’s authoritarian regime). Some historians have also noted that the 1960s, as a descriptive historical period, actually began in the late 1950s and ended in the early 1970s, because the cultural and economic conditions that define the meaning of the period dominated longer than the actual decade of the 1960s.

Petrarch by

Andrea del Castagno.

Petrarch, Italian poet and thinker, conceived of the idea of a European “Dark Age,” which later evolved into the tripartite periodization of Western history into Ancient, Middle Ages and Modern.

While world history (also referred to as global history or transnational history) emerged as a distinct academic field of historical study in the 1980s in order to examine history from a global perspective rather than a solely national perspective of investigation, it still struggles with an inherently Western periodization. The common splits used when designing comprehensive college-level world history courses (and thus also used in history textbooks that are usually divided into volumes covering pre-modern and modern eras) are still a result of certain historical developments presented from the perspective of the Western world and particular national experiences. However, even the split between pre-modern and modern eras is problematic because it is complicated by the question of how history educators, textbook authors, and publishers decide to categorize what is known as the early modern era, which is traditionally a period between Renaissance and the end of the Age of Enlightenment. In the end, whether the early modern era is included in the first or the second part of a world history course frequently offered in U.S. colleges is a subjective decision of history educators. As a result, the same questions and choices apply to history textbooks written and published for the U.S. audience.

World historians use a thematic approach to identify common patterns that emerge across all cultures, with two major focal points: integration (how processes of world history have drawn people of the world together) and difference (how patterns of world history reveal the diversity of the human experiences). The periodization of world history, as imperfect and biased as it is, serves as a way to organize and systematize knowledge.

Without it, history would be nothing more than scattered events without a framework designed to help us understand the past.

1.1.2: Dates and Calendars

While various calendars were developed and used across millennia, cultures, and geographical regions, Western historical scholarship has unified the standards of determining dates based on the dominant Gregorian calendar.

Learning Objective

Compare and

contrast different calendars and how they affect our understanding of history

Key Points

-

The first recorded calendars date to the Bronze

Age, including the

Egyptian and Sumerian calendars.

A

larger number of calendar systems of the Ancient Near East became accessible in

the Iron Age and were based on the Babylonian calendar. A

great number of Hellenic calendars also developed in Classical Greece and influenced

calendars outside of the immediate sphere of Greek influence, giving rise to

the various Hindu calendars, as well as to the ancient Roman calendar. -

Despite various calendars used across millennia,

cultures, and geographical regions, Western historical scholarship has unified

the standards of determining dates based on the dominant Gregorian calendar. -

Julius Caesar effected drastic changes in the existing timekeeping system. The New Year in 709 AUC began on January first and ran over 365 days

until December 31. Further adjustments were made under Augustus, who introduced

the concept of the leap year in 737 AUC (4 CE). The resultant Julian calendar

remained in almost universal use in Europe until 1582. -

The Gregorian calendar, also called the Western

calendar and the Christian calendar, is internationally the most widely used

civil calendar today. It is named after Pope Gregory XIII, who introduced it in

October, 1582. The calendar was a refinement to the Julian calendar, amounting to

a 0.002% correction in the length of the year. -

While the European Gregorian calendar eventually

dominated the world and historiography, a number of other calendars have shaped

timekeeping systems that are still influential in some regions of the world. These include the Islamic calendar, various Hindu calendars, and the Mayan calendar. -

A calendar era that is often used as an

alternative naming of the long-accepted anno Domini/before Christ system is Common Era or Current Era,

abbreviated CE.

While both systems are an accepted

standard, the Common Era system is more neutral and inclusive of a non-Christian perspective.

Key Terms

- Mayan calendar

-

A system of calendars used in pre-Columbian Mesoamerica, and in many modern communities in the Guatemalan highlands, Veracruz, Oaxaca and Chiapas, Mexico. The essentials of it are based upon a system that was in common use throughout the region, dating back to at least the fifth century BCE. It shares many aspects with calendars employed by other earlier Mesoamerican civilizations, such as the Zapotec and Olmec, and with contemporary or later calendars, such as the Mixtec and Aztec calendars.

- anno Domini

-

The Medieval Latin term, which means in the year of the Lord but is often translated as in the year of our Lord. Dionysius Exiguus, of Scythia Minor, introduced the system based on this concept in 525, counting the years since the birth of Christ.

- Islamic calendar

-

(Also Muslim calendar or Hijri calendar): A lunar calendar consisting of 12 months in a year of 354 or 355 days. It is used to date events in many Muslim countries (concurrently with the Gregorian calendar), and is used by Muslims everywhere to determine the proper days on which to observe the annual fasting, to attend Hajj, and to celebrate other Islamic holidays and festivals. The first year equals 622 CE, during which time the emigration of Muhammad from Mecca to Medina, known as the Hijra, occurred.

- Gregorian calendar

-

(Also the Western calendar and the Christian calendar): A calendar that is internationally the most widely used civil calendar today. It is named after Pope Gregory XIII, who introduced it in October 1582. The calendar was a refinement to the Julian calendar, amounting to a 0.002% correction in the length of the year.

- Julian calendar

-

A calendar introduced by Julius Caesar in 46 BCE (708 AUC), which was a reform of the Roman calendar. It took effect in 45 BCE (AUC 709), shortly after the Roman conquest of Egypt. It was the predominant calendar in the Roman world, most of Europe, and in European settlements in the Americas and elsewhere, until it was refined and gradually replaced by the Gregorian calendar, promulgated in 1582 by Pope Gregory XIII.

Calendars and Writing History

Methods of timekeeping can be reconstructed for the prehistoric period from at least the Neolithic period. The natural units for timekeeping used by most historical societies are the day, the solar year, and the lunation. The first recorded calendars date to the Bronze Age, and include the Egyptian and Sumerian calendars. A larger number of calendar systems of the Ancient Near East became accessible in the Iron Age and were based on the Babylonian calendar. One of these was calendar of the Persian Empire, which in turn gave rise to the Zoroastrian calendar, as well as the Hebrew calendar.

A great number of Hellenic calendars were developed in Classical Greece and influenced calendars outside of the immediate sphere of Greek influence. These gave rise to the various Hindu calendars, as well as to the ancient Roman calendar, which contained very ancient remnants of a pre-Etruscan ten-month solar year. The Roman calendar was reformed by Julius Caesar in 45 BCE. The Julian calendar was no longer dependent on the observation of the new moon, but simply followed an algorithm of introducing a leap day every four years. This created a dissociation of the calendar month from the lunation. The Gregorian calendar was introduced as a refinement of the Julian calendar in 1582 and is today in worldwide use as the de facto calendar for secular purposes.

Despite various calendars used across millennia, cultures, and geographical regions, Western historical scholarship has unified the standards of determining dates based on the dominant Gregorian calendar. Regardless of what historical period or geographical areas Western historians investigate and write about, they adjust dates from the original timekeeping system to the Gregorian calendar. Occasionally, some historians decide to use both dates: the dates recorded under the original calendar used, and the date adjusted to the Gregorian calendar, easily recognizable to the Western student of history.

Julian Calendar

The old Roman year had 304 days divided into ten months, beginning with March. However, the ancient historian, Livy, gave credit to the second ancient Roman king, Numa Pompilious, for devising a calendar of twelve months. The extra months Ianuarius and Februarius had been invented, supposedly by Numa Pompilious, as stop-gaps. Julius Caesar realized that the system had become inoperable, so he effected drastic changes in the year of his third consulship. The New Year in 709 AUC (ab urbe condita—

year from the founding of the City of Rome)

began on January first and ran over 365 days until December 31. Further adjustments were made under Augustus, who introduced the concept of the leap year in 737 AUC (4 CE). The resultant Julian calendar remained in almost universal use in Europe until 1582. Marcus Terentius Varro introduced the Ab urbe condita epoch, assuming a foundation of Rome in 753 BCE. The system remained in use during the early medieval period until the widespread adoption of the Dionysian era in the Carolingian period. The seven-day week has a tradition reaching back to the Ancient Near East, but the introduction of the planetary week, which remains in modern use, dates to the Roman Empire period.

Gregorian Calendar

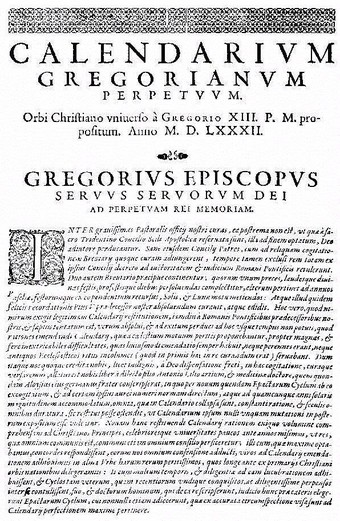

The Gregorian calendar, also called the Western calendar and the Christian calendar, is internationally the most widely used civil calendar today. It is named after Pope Gregory XIII, who introduced it in October, 1582. The calendar was a refinement to the Julian calendar, amounting to a 0.002% correction in the length of the year. The motivation for the reform was to stop the drift of the calendar with respect to the equinoxes and solstices—particularly the vernal equinox, which set the date for Easter celebrations. Transition to the Gregorian calendar would restore the holiday to the time of the year in which it was celebrated when introduced by the early Church. The reform was adopted initially by the Catholic countries of Europe. Protestants and Eastern Orthodox countries continued to use the traditional Julian calendar, and eventually adopted the Gregorian reform for the sake of convenience in international trade. The last European country to adopt the reform was Greece in 1923.

The first page of the papal bull “Inter Gravissimas” by which Pope Gregory XIII introduced his calendar.

During the period between 1582, when the first countries adopted the Gregorian calendar, and 1923, when the last European country adopted it, it was often necessary to indicate the date of some event in both the Julian calendar and in the Gregorian calendar. Even before 1582, the year sometimes had to be double dated because of the different beginnings of the year in various countries.

Calendars Outside of Europe

While the European Gregorian calendar eventually dominated the world and historiography, a number of other calendars have shaped timekeeping systems that are still influential in some regions of the world.

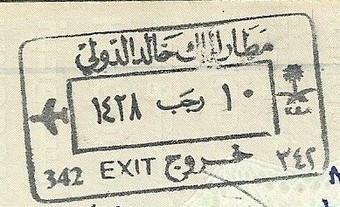

The Islamic calendar determines the first year in 622 CE, during which the emigration of Muhammad from Mecca to Medina, known as the Hijra, occurred. It is used to date events in many Muslim countries (concurrently with the Gregorian calendar), and is used by Muslims everywhere to determine the proper days on which to observe and celebrate Islamic religious practices (e.g., fasting), holidays, and festivals.

Various Hindu calendars developed in the medieval period with Gupta era astronomy as their common basis. Some of the more prominent regional Hindu calendars include the Nepali calendar, Assamese calendar, Bengali calendar, Malayalam calendar, Tamil calendar, the Vikrama Samvat (used in Northern India), and Shalivahana calendar. The common feature of all regional Hindu calendars is that the names of the twelve months are the same (because the names are based in Sanskrit) although the spelling and pronunciation have come to vary slightly from region to region over thousands of years. The month that starts the year also varies from region to region. The Buddhist calendar and the traditional lunisolar calendars of Cambodia, Laos, Myanmar, Sri Lanka, and Thailand are also based on an older version of the Hindu calendar.

Of all the ancient calendar systems, the Mayan and other Mesoamerican systems are the most complex. The Mayan calendar had two years, the 260-day Sacred Round, or tzolkin, and the 365-day Vague Year, or haab.

The essentials of the Mayan calendar are based upon a system that was in common use throughout the region, dating back to at least the fifth century BCE. It shares many aspects with calendars employed by other earlier Mesoamerican civilizations, such as the Zapotec and Olmec, and contemporary or later ones, such as the Mixtec and Aztec calendars. The Mayan calendar is still used in many modern communities in the Guatemalan highlands, Veracruz, Oaxaca and Chiapas, Mexico.

Islamic Calendar stamp issued at King Khaled airport (10 Rajab 1428 / 24 July 2007)

The first year was the Islamic year beginning in AD 622, during which the emigration of Muhammad from Mecca to Medina, known as the Hijra, occurred. Each numbered year is designated either “H” for Hijra or “AH” for the Latin Anno Hegirae (“in the year of the Hijra”). Hence, Muslims typically call their calendar the Hijri calendar.

Anno Domini v. Common Era

The terms anno Domini (AD) and before Christ (BC) are used to label or number years in the Julian and Gregorian calendars. The term anno Domini is Medieval Latin, which means in the year of the Lord, but is often translated as in the year of our Lord. It is occasionally set out more fully as anno Domini nostri Iesu (or Jesu Christi (“in the year of Our Lord Jesus Christ”). Dionysius Exiguus of Scythia Minor introduced the AD system in AD 525, counting the years since the birth of Christ. This calendar era is based on the traditionally recognized year of the conception or birth of Jesus of Nazareth, with AD counting years after the start of this epoch and BC denoting years before the start of the era. There is no year zero in this scheme, so the year AD 1 immediately follows the year 1 BC. This dating system was devised in 525, but was not widely used until after 800.

A calendar era that is often used as an alternative naming of the anno Domini

is Common Era or Current Era, abbreviated CE. The system uses BCE as an abbreviation for “before the Common (or Current) Era.” The CE/BCE designation uses the same numeric values as the AD/BC system so the two notations (CE/BCE and AD/BC) are numerically equivalent. The expression “Common Era” can be found as early as 1708 in English and traced back to Latin usage among European Christians to 1615, as vulgaris aerae, and to 1635 in English as Vulgar Era.

Since the later 20th century, the use of CE and BCE have been popularized in academic and scientific publications, and more generally by authors and publishers wishing to emphasize secularism or sensitivity to non-Christians, because the system does not explicitly make use of religious titles for Jesus, such as “Christ” and Dominus (“Lord”), which are used in the BC/AD notation, nor does it give implicit expression to the Christian creed that Jesus is the Christ. While both systems are thus an accepted standard, the CE/BCE system is more neutral and inclusive of a non-Christian perspective.

1.1.3: The Imperfect Historical Record

While some primary sources are considered more reliable or trustworthy

than others, hardly any historical evidence can be

seen as fully objective since it is always a product of particular individuals,

times, and dominant ideas.

Learning Objective

Explain the consequences of the imperfect historical record

Key Points

-

In

the study of history as an academic discipline, a primary source

is an artifact,

document, diary, manuscript, autobiography, recording, or other source of

information that was created at the time under study. -

History

as an academic discipline is based on primary sources, as evaluated by the

community of scholars for whom primary sources are absolutely fundamental to

reconstructing the past. Ideally, a historian will use as many primary sources that were created during the time under study as can be accessed. In practice however, some sources have been destroyed,

while others are not available for research. -

While

some sources are considered more reliable or trustworthy than others, historians point

out that hardly any historical evidence can be seen as fully objective since it is always a product of particular individuals, times, and dominant ideas. -

Historical

method comprises the techniques and guidelines by which

historians use primary sources and other evidence (including the

evidence of archaeology) to research and write historical accounts of the past. -

Primary

sources may remain in private hands or are located in archives, libraries,

museums, historical societies, and special collections. Traditionally,

historians attempt to answer historical questions through the study of written

documents and oral accounts. They also use such sources as monuments,

inscriptions, and pictures. In general, the sources of historical knowledge can

be separated into three categories: what is written, what is said, and what is physically

preserved. Historians often consult all three. - Historians use various strategies to reconstruct the past when facing a lack of sources, including collaborating with experts from other academic disciplines, most notably archaeology.

Key Terms

- historical method

-

A scholarly method that comprises the techniques and guidelines by which historians use primary sources and other evidence (including the evidence of archaeology) to research and write historical accounts of the past.

- primary source

-

In the study of history as an

academic discipline, an artifact, document, diary, manuscript, autobiography,

recording, or other source of information that was created at the time under

study. It serves as an original source of information about the topic. - secondary source

-

A document or recording that relates or discusses information originally found in a primary source. It contrasts with a primary source, which is an original source of the information being discussed; a primary source can be a person with direct knowledge of a situation, or a document created by such a person. A secondary source involves generalization, analysis, synthesis, interpretation, or evaluation of the original information.

Primary Sources

In the study of history as

an academic discipline, a primary source (also called original source or

evidence) is an artifact, document, diary, manuscript, autobiography, recording,

or other source of information that was created at the time under study. It

serves as an original source of information about the topic. Primary sources

are distinguished from secondary sources, which cite, comment on, or build upon

primary sources. In some cases, a secondary source may also be a primary

source, depending on how it is used. For example, a memoir would be considered

a primary source in research concerning its author or about his or her friends

characterized within it, but the same memoir would be a secondary source if it

were used to examine the culture in which its author lived. “Primary”

and “secondary” should be understood as relative terms, with sources

categorized according to specific historical contexts and what is being

studied.

Using Primary Sources: Historical Method

History as an academic

discipline is based on primary sources, as evaluated by the community of

scholars for whom primary sources are absolutely fundamental to reconstructing

the past. Ideally, a historian will use as many primary sources that

were created by the people involved at the time under study as can be accessed. In practice however, some sources have been destroyed, while others are not

available for research. In some cases, the only eyewitness reports of an event

may be memoirs, autobiographies, or oral interviews taken years later.

Sometimes, the only evidence relating to an event or person in the distant past

was written or copied decades or centuries later. Manuscripts that are sources

for classical texts can be copies or fragments of documents. This is a common problem in classical studies, where sometimes only

a summary of a book or letter, but not the actual book or letter, has survived.

While some sources are considered more reliable or trustworthy than others

(e.g., an original government document containing information about an

event vs. a recording of a witness recalling the same event years later),

historians point out that hardly any historical evidence can be seen as fully

objective as it is always a product of particular individuals, times, and

dominant ideas. This is also why researchers try to find as many records of an

event under investigation as possible, and attempt to resolve evidence that may

present contradictory accounts of the same events.

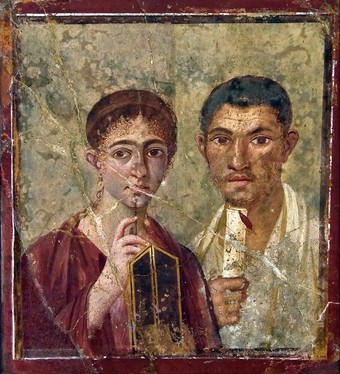

This wall painting (known as

The portrait of Paquius Proculo and currently preserved at the Naples National Archaeological Museum) was found in the Roman city of Pompeii and serves as a complex example of a primary source.

The fresco would not tell much to historians without corresponding textual and archaeological evidence that helps to establish who the portrayed couple might have been.

The man wears a toga, the mark of a Roman citizen, and holds a rotulus, suggesting he is involved in public and/or cultural affairs. The woman holds a stylus and wax tablet, emphasizing that she is educated and literate. It is suspected, based on the physical features of the couple, that they are Samnites, which may explain the desire to show off the status they have reached in Roman society.

Historical

method comprises the techniques and guidelines by which

historians use primary sources and other evidence (including the

evidence of archaeology) to research and write historical accounts of the past. Historians continue to debate what aspects and practices

of investigating primary sources should be considered, and what constitutes a

primary source when developing the most effective historical method. The

question of the nature, and even the possibility, of a sound historical method

is so central that it has been continuously raised in the philosophy of

history as a question of epistemology.

Finding Primary Sources

Primary sources may remain

in private hands or are located in archives, libraries, museums, historical

societies, and special collections. These can be public or private. Some are

affiliated with universities and colleges, while others are government

entities. Materials relating to one area might be spread over a large number of

different institutions. These can be distant from the original source of the

document. For example, the Huntington Library in California houses a large

number of documents from the United Kingdom. While the development of

technology has resulted in an increasing number of digitized sources, most

primary source materials are not digitized and may only be represented online

with a record or finding aid.

Traditionally, historians

attempt to answer historical questions through the study of written documents

and oral accounts. They also use such sources as monuments, inscriptions, and

pictures. In general, the sources of historical knowledge can be separated into

three categories: what is written, what is said, and what is physically

preserved. Historians often consult all three. However, writing is the

marker that separates history from what comes before.

Archaeology is one

discipline that is especially helpful to historians. By dealing with buried

sites and objects, it contributes to the reconstruction of the past.

However, archaeology is constituted by a range of methodologies and approaches

that are independent from history. In other words, archaeology does not

“fill the gaps” within textual sources but often contrasts its

conclusions against those of contemporary textual sources.

Archaeology also provides

an illustrative example of how historians can be helped when written records

are missing. Unearthing artifacts and working with archaeologists to interpret

them based on the expertise of a particular historical era and cultural or

geographical area is one effective way to reconstruct the past. If written

records are missing, historians often attempt to collect oral accounts of

particular events, preferably by eyewitnesses, but sometimes, because of the

passage of time, they are forced to work with the following generations. Thus, the question of the reliability of oral history has been widely debated.

When dealing with many government records, historians usually have to wait for a

specific period of time before documents are declassified and available to

researchers. For political reasons, many sensitive records may be

destroyed, withdrawn from collections, or hidden, which may also encourage

researchers to rely on oral histories. Missing records of events, or

processes that historians believe took place based on very fragmentary

evidence, forces historians to seek information in records that may not be a likely

sources of information. As archival

research is always time-consuming and labor-intensive, this approach poses the

risk of never producing desired results, despite the time and effort invested

in finding informative and reliable resources. In some cases, historians are

forced to speculate (this should be explicitly noted) or simply admit that we

do not have sufficient information to reconstruct particular past events or

processes.

1.1.4: Historical Bias

Biases have been part of historical investigation since the ancient beginnings of the discipline. While more recent scholarly practices attempt to remove earlier biases from history, no piece of historical scholarship can be fully free of biases.

Learning Objective

Identify some examples of historical bias

Key Points

-

Regardless

of whether they are conscious or learned implicitly within cultural contexts, biases

have been part of historical investigation since the ancient beginnings of the

discipline. As such, history provides an excellent example of how biases

change, evolve, and even disappear. -

Early attempts to make history an empirical,

objective discipline (most notably by Voltaire) did not find many followers. Throughout the 18th and 19th

centuries, European historians only strengthened their biases. As Europe

gradually dominated the

world through the self-imposed mission to colonize nearly all the other

continents, Eurocentrism prevailed in history. - Even within the Eurocentric perspective, not all

Europeans were equal; Western historians largely ignored aspects of

history, such as class, gender, or ethnicity. Until the rapid development of social history in the 1960s and 1970s,

mainstream Western historical narratives focused on political and military

history, while cultural or social history was written mostly from the

perspective of the elites. -

The biased approach to history-writing

transferred also to history-teaching. From the origins of national mass

schooling systems in the 19th century, the teaching of history to promote

national sentiment has been a high priority. History textbooks in most countries have been tools to foster nationalism and patriotism and to promote the

most favorable version of national history. -

Germany attempts to be an example of how to

remove nationalistic narratives from history education. The history curriculum in Germany is characterized

by a transnational perspective that emphasizes the all-European heritage, minimizes the idea of national pride, and fosters the notion of civil society centered on democracy, human rights, and peace. -

Despite progress and increased focus on

groups that have been traditionally excluded from mainstream historical

narratives (people of color, women, the working class, the poor,

the disabled, LGBTQI-identified people, etc.), bias remains a component of historical

investigation.

Key Term

- Eurocentrism

-

The practice of viewing the world from a European or generally Western perspective with an implied belief in the pre-eminence of Western culture. It may also be used to describe a view centered on the history or eminence of white people. The term was coined in the 1980s, referring to the notion of European exceptionalism and other Western equivalents, such as American exceptionalism.

Bias in Historical Writing

Bias is an inclination or outlook to present or hold a partial perspective, often accompanied by a refusal to consider the possible merits of alternative points of view. Regardless of whether conscious or learned implicitly within cultural contexts, biases have been part of historical investigation since the ancient beginnings of the discipline. As such, history provides an excellent example of how biases change, evolve, and even disappear.

History as a modern academic discipline based on empirical methods (in this case, studying primary sources in order to reconstruct the past based on available evidence), rose to prominence during the Age of Enlightenment. Voltaire, a French author and thinker, is credited to have developed a fresh outlook on history that broke from the tradition of narrating diplomatic and military events and emphasized customs, social history (the history of ordinary people) and achievements in the arts and sciences. His Essay on Customs traced the progress of world civilization in a universal context, thereby rejecting both nationalism and the traditional Christian frame of reference. Voltaire was also the first scholar to make a serious attempt to write the history of the world, eliminating theological frameworks and emphasizing economics, culture, and political history. He was the first to emphasize the debt of medieval culture to Middle Eastern civilization. Although he repeatedly warned against political bias on the part of the historian, he did not miss many opportunities to expose the intolerance and frauds of the Catholic Church over the ages—

a topic that was Voltaire’s life-long intellectual interest.

Voltaire’s early attempts to make history an empirical, objective discipline did not find many followers. Throughout the 18th and 19th centuries, European historians only strengthened their biases. As Europe gradually benefited from the ongoing scientific progress and dominated the world in the self-imposed mission to colonize nearly all other continents, Eurocentrism prevailed in history. The practice of viewing and presenting the world from a European or generally Western perspective, with an implied belief in the pre-eminence of Western culture, dominated among European historians who contrasted the progressively mechanized character of European culture with traditional hunting, farming and herding societies in many of the areas of the world being newly conquered and colonized. These included the Americas, Asia, Africa and, later, the Pacific and Australasia. Many European writers of this time construed the history of Europe as paradigmatic for the rest of the world. Other cultures were identified as having reached a stage that Europe itself had already passed: primitive hunter-gatherer, farming, early civilization, feudalism and modern liberal-capitalism. Only Europe was considered to have achieved the last stage. With this assumption, Europeans were also presented as racially superior, and European history as a discipline became essentially the history of the dominance of white peoples.

However, even within the Eurocentric perspective, not all Europeans were equal; Western historians largely ignored aspects of history, such as class, gender, or ethnicity. Until relatively recently (particularly the rapid development of social history in the 1960s and 1970s), mainstream Western historical narratives focused on political and military history, while cultural or social history was written mostly from the perspective of the elites. Consequently, what was in fact an experience of a selected few (usually white males of upper classes, with some occasional mentions of their female counterparts), was typically presented as the illustrative experience of the entire society. In the United States, some of the first to break this approach were African American scholars who at the turn of the 20th century wrote histories of black Americans and called for their inclusion in the mainstream historical narrative.

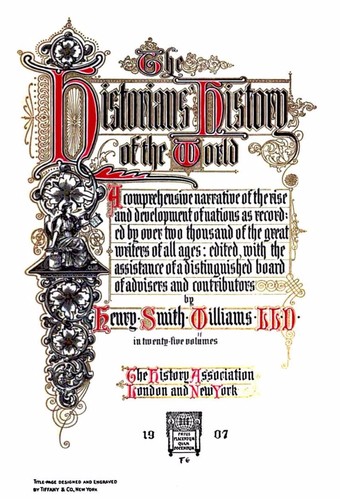

The title page to The Historians’ History of the World:

A Comprehensive Narrative of the Rise and Development of Nations as Recorded by over two thousand of the Great Writers of all Ages, 1907.

The Historians’ History of the World is a 25-volume encyclopedia of world history originally published in English near the beginning of the 20th century. It is quite extensive but its perspective is entirely Western Eurocentric. For example, while four volumes focus on the history of England (with Scotland and Ireland included in one of them), “Poland, the Balkans, Turkey, minor Eastern states, China, Japan” are all described in one volume. It was compiled by Henry Smith Williams, a medical doctor and author, as well as other authorities on history, and published in New York in 1902 by Encyclopædia Britannica and the Outlook Company.

Bias in the Teaching of History

The biased approach to historical writing is present in the teaching of history as well.

From the origins of national mass schooling systems in the 19th century, the teaching of history to promote national sentiment has been a high priority. Until today, in most countries history textbook are tools to foster nationalism and patriotism and promote the most favorable version of national history. In the United States, one of the most striking examples of this approach is the continuous narrative of the United States as a state established on the principles of personal liberty and democracy. Although aspects of U.S. history, such as slavery, genocide of American Indians, or disfranchisement of the large segments of the society for decades after the onset of the American statehood, are now taught in most (yet not all) American schools, they are presented as marginal in the larger narrative of liberty and democracy.

In many countries, history textbooks are sponsored by the national government and are written to put the national heritage in the most favorable light, although academic historians have often fought against the politicization of the textbooks, sometimes with success. Interestingly, the 21st-century Germany attempts to be an example of how to remove nationalistic narratives from history education. As the 20th-century history of Germany is filled with events and processes that are rarely a cause of national pride, the history curriculum in Germany (controlled by the 16 German states) is characterized by a transnational perspective that emphasizes the all-European heritage, minimizes the idea of national pride, and fosters the notion of civil society centered on democracy, human rights, and peace. Yet, even in the rather unusual German case, Eurocentrism continues to dominate.

The challenge to replace national, or even nationalist, perspectives with a more inclusive transnational or global view of human history is also still very present in college-level history curricula.

In the United States after World War I, a strong movement emerged at the university level to teach courses in Western Civilization with the aim to give students a common heritage with Europe. After 1980, attention increasingly moved toward teaching world history or requiring students to take courses in non-western cultures. Yet, world history courses still struggle to move beyond the Eurocentric perspective, focusing heavily on the history of Europe and its links to the United States.

Despite all the progress and much more focus on the groups that have been traditionally excluded from mainstream historical narratives (people of color, women, the working class, the poor, the disabled, LGBTQI-identified people, etc.), bias remains a component of historical investigation, whether it is a product of nationalism, author’s political views, or an agenda-driven interpretation of sources. It is only appropriate to state that the present world history book, while written in accordance with the most recent scholarly and educational practices, has been written and edited by authors trained in American universities and published in the United States. As such, it is also not free from both national (U.S.) and individual (authors’) biases.

1.2: Precursors to Civilization

1.2.1: The Evolution of Humans

Human evolution is an ongoing and complex process that began seven million years ago.

Learning Objective

To understand the process and timeline of human evolution

Key Points

- Humans began to evolve about seven million years ago, and progressed through four stages of evolution. Research shows that the first modern humans appeared 200,000 years ago.

- Neanderthals were a separate species from humans. Although they had larger brain capacity and interbred with humans, they eventually died out.

- A number of theories examine the relationship between environmental conditions and human evolution.

- The main human adaptations have included bipedalism, larger brain size, and reduced sexual dimorphism.

Key Terms

- aridity hypothesis

-

The theory that the savannah was expanding due to increasingly arid conditions, which then drove hominin adaptation.

- turnover pulse hypothesis

-

The theory that extinctions due to environmental conditions hurt specialist species more than generalist ones, leading to greater evolution among specialists.

- Red Queen hypothesis

-

The theory that species must constantly evolve in order to compete with co-evolving animals around them.

- encephalization

-

An evolutionary increase in the complexity and/or size of the brain.

- sexual dimorphism

-

Differences in size or appearance between the sexes of an animal species.

- social brain hypothesis

-

The theory that improving cognitive capabilities would allow hominins to influence local groups and control resources.

- Toba catastrophe theory

-

The theory that there was a near-extinction event for early humans about 70,000 years ago.

- savannah hypothesis

-

The theory that hominins were forced out of the trees they lived in and onto the expanding savannah; as they did so, they began walking upright on two feet.

- hominids

-

A primate of the family Hominidae that includes humans and their fossil ancestors.

- bipedal

-

Describing an animal that uses only two legs for walking.

Human evolution began with primates. Primate development diverged from other mammals about 85 million years ago. Various divergences among apes, gibbons, orangutans occurred during this period, with Homini (including early humans and chimpanzees) separating from Gorillini (gorillas) about 8 millions years ago. Humans and chimps then separated about 7.5 million years ago.

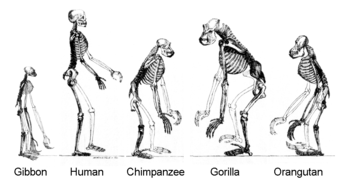

Skeletal structure of humans and other primates.

A comparison of the skeletal structures of gibbons, humans, chimpanzees, gorillas and orangutans.

Generally, it is believed that hominids first evolved in Africa and then migrated to other areas. There were four main stages of human evolution. The first, between four and seven million years ago, consisted of the proto hominins Sahelanthropus, Orrorin and Ardipithecus. These humans may have been bipedal, meaning they walked upright on two legs. The second stage, around four million years ago, was marked by the appearance of Australopithecus, and the third, around 2.7 million years ago, featured Paranthropus.

The fourth stage features the genus Homo, which existed between 1.8 and 2.5 million years ago. Homo habilis, which used stone tools and had a brain about the size of a chimpanzee, was an early hominin in this period. Coordinating fine hand movements needed for tool use may have led to increasing brain capacity. This was followed by Homo erectus and Homo ergaster, who had double the brain size and may have been the first to control fire and use more complex tools. Homo heidelbergensis appeared about 800,000 years ago, and modern humans, Homo sapiens, about 200,000 years ago. Humans acquired symbolic culture and language about 50,000 years ago.

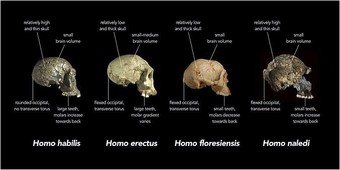

Comparison of skull features among early humans.

A comparison of Homo habilis, Homo erectus, Homo floresiensis and Homo naledi skull features.

Neanderthals

A separate species, Homo neanderthalensis, had a common ancestor with humans about 660,000 years ago, and engaged in interbreeding with Homo sapiens about 45,000 to 80,000 years ago. Although their brains were larger, Neanderthals had fewer social and technological innovations than humans, and they eventually died out.

Theories of Early Human Evolution

The savannah hypothesis states that hominins were forced out of the trees they lived in and onto the expanding savannah; as they did so, they began walking upright on two feet. This idea was expanded in the aridity hypothesis, which posited that the savannah was expanding due to increasingly arid conditions resulting in hominin adaptation. Thus, during periods of intense aridification, hominins also were pushed to evolve and adapt.

The turnover pulse hypothesis states that extinctions due to environmental conditions hurt specialist species more than generalist ones. While generalist species spread out when environmental conditions change, specialist species become more specialized and have a greater rate of evolution. The Red Queen hypothesis states that species must constantly evolve in order to compete with co-evolving animals around them. The social brain hypothesis states that improving cognitive capabilities would allow hominins to influence local groups and control resources. The Toba catastrophe theory states that there was a near-extinction event for early humans about 70,000 years ago.

Human Adaptations

Bipedalism, or walking upright, is one of the main human evolutionary adaptations. Advantages to be found in bipedalism include the freedom of the hands for labor and less physically taxing movement. Walking upright better allows for long distance travel and hunting, for a wider field of vision, a reduction of the amount of skin exposed to the sun, and overall thrives in a savannah environment. Bipedalism resulted in skeletal changes to the legs, knee and ankle joints, spinal vertebrae, toes, and arms. Most significantly, the pelvis became shorter and rounded, with a smaller birth canal, making birth more difficult for humans than other primates. In turn, this resulted in shorter gestation (as babies need to be born before their heads become too large), and more helpless infants who are not fully developed before birth.

Larger brain size, also called encephalization, began in early humans with Homo habilis and continued through the Neanderthal line (capacity of 1,200 – 1,900 cm3). The ability of the human brain to continue to grow after birth meant that social learning and language were possible. It is possible that a focus on eating meat, and cooking, allowed for brain growth. Modern humans have a brain volume of 1250 cm3.

Humans have reduced sexual dimorphism, or differences between males and females, and hidden estrus, which means the female is fertile year-round and shows no special sign of fertility. Human sexes still have some differences between them, with males being slightly larger and having more body hair and less body fat. These changes may be related to pair bonding for long-term raising of offspring.

Other adaptations include lessening of body hair, a chin, a descended larynx, and an emphasis on vision instead of smell.

1.2.2: The Neolithic Revolution

The Neolithic Revolution and invention of agriculture allowed humans to settle in groups, specialize, and develop civilizations.

Learning Objective

Explain the significance of the Neolithic Revolution

Key Points

- During the Paleolithic Era, humans grouped together in small societies and subsisted by gathering plants, and fishing, hunting or scavenging wild animals.

- The Neolithic Revolution references a change from a largely nomadic hunter-gatherer way of life to a more settled, agrarian-based one, with the inception of the domestication of various plant and animal species—depending on species locally available and likely also influenced by local culture.

- There are several competing (but not mutually exclusive) theories as to the factors that drove populations to take up agriculture, including the Hilly Flanks hypothesis, the Feasting model, the Demographic theories, the evolutionary/intentionality theory, and the largely discredited Oasis Theory.

- The shift to agricultural food production supported a denser population, which in turn supported larger sedentary communities, the accumulation of goods and tools, and specialization in diverse forms of new labor.

- The nutritional standards of Neolithic populations were generally inferior to that of hunter-gatherers, and they worked longer hours and had shorter life expectancies.

- Life today, including our governments, specialized labor, and trade, is directly related to the advances made in the Neolithic Revolution.

Key Terms

- Demographic theories

-

Theories about how sedentary populations may have driven agricultural changes.

- specialization

-

A process where laborers focused on one specialty area rather than creating all needed items.

- Feasting model

-

The theory that displays of power through feasting drove agricultural technology.

- Oasis Theory

-

The theory that humans were forced into close association with animals due to changes in climate.

- Paleolithic Era

-

A period of history that spans from 2.5 million to 20,000 years ago, during which time humans evolved, used stone tools, and lived as hunter-gatherers.

- Hunter-gatherer

-

A nomadic lifestyle in which food is obtained from wild plants and animals; in contrast to an agricultural lifestyle, which relies mainly on domesticated species.

- Neolithic Revolution

-

The world’s first historically verifiable advancement in agriculture. It took place around 12,000 years ago.

- Evolutionary/Intentionality theory

-

The theory that domestication was part of an evolutionary process between humans and plants.

- Hilly Flanks hypothesis

-

The theory that agriculture began in the hilly flanks of the Taurus and Zagros mountains, where the climate was not drier, and fertile land supported a variety of plants and animals amenable to domestication.

Before the Rise of Civilization: The Paleolithic Era

The first humans evolved in Africa during the Paleolithic Era, or Stone Age, which spans the period of history from 2.5 million to about 10,000 BCE. During this time, humans lived in small groups as hunter-gatherers, with clear gender divisions for labor. The men hunted animals while the women gathered food, such as fruit, nuts and berries, from the local area. Simple tools made of stone, wood, and bone (such as hand axes, flints and spearheads) were used throughout the period. Fire was controlled, which created heat and light, and allowed for cooking.

Humankind gradually evolved from early members of the genus Homo—

such as Homo habilis,

who used simple stone tools— into fully behaviorally and anatomically modern humans (Homo sapiens) during the Paleolithic era. During the end of the Paleolithic, specifically the Middle and or Upper Paleolithic, humans began to produce the earliest works of art and engage in religious and spiritual behavior, such as burial and ritual. Paleolithic humans were nomads, who often moved their settlements as food became scarce. This eventually resulted in humans spreading out from Africa (beginning roughly 60,000 years ago) and into Eurasia, Southeast Asia, and Australia. By about 40,000 years ago, they had entered Europe, and by about 15,000 years ago, they had reached North America followed by South America.

Stone ball from a set of Paleolithic bolas

Paleoliths (artifacts from the Paleolithic), such as this stone ball, demonstrate some of the stone technologies that the early humans used as tools and weapons.

During about 10,000 BCE, a major change occurred in the way humans lived; this would have a cascading effect on every part of human society and culture. That change was the Neolithic Revolution.

The Neolithic Revolution: From Hunter-Gatherer to Agriculturalist

The beginning of the Neolithic Revolution in different regions has been dated from perhaps 8,000 BCE in the Kuk Early Agricultural Site of Melanesia Kuk to 2,500 BCE in Subsaharan Africa, with some considering the developments of 9,000-7,000 BCE in the Fertile Crescent to be the most important. This transition everywhere is associated with the change from a largely nomadic hunter-gatherer way of life to a more settled, agrarian-based one, due to the inception of the domestication of various plant and animal species—depending on the species locally available, and probably also influenced by local culture.

It is not known why humans decided to begin cultivating plants and domesticating animals. While more labor-intensive, the people must have seen the relationship between cultivation of grains and an increase in population. The domestication of animals provided a new source of protein, through meat and milk, along with hides and wool, which allowed for the production of clothing and other objects.

There are several competing (but not mutually exclusive) theories about the factors that drove populations to take up agriculture. The most prominent of these are:

- The Oasis Theory, originally proposed by Raphael Pumpelly in 1908, and popularized by V. Gordon Childe in 1928, suggests as the climate got drier due to the Atlantic depressions shifting northward, communities contracted to oases where they were forced into close association with animals. These animals were then domesticated together with planting of seeds. However, this theory has little support amongst archaeologists today because subsequent climate data suggests that the region was getting wetter rather than drier.

- The Hilly Flanks hypothesis, proposed by Robert Braidwood in 1948, suggests that agriculture began in the hilly flanks of the Taurus and Zagros mountains, where the climate was not drier, as Childe had believed, and that fertile land supported a variety of plants and animals amenable to domestication.

- The Feasting model by Brian Hayden suggests that agriculture was driven by ostentatious displays of power, such as giving feasts, to exert dominance. This system required assembling large quantities of food, a demand which drove agricultural technology.

- The Demographic theories proposed by Carl Sauer and adapted by Lewis Binford and Kent Flannery posit that an increasingly sedentary population outgrew the resources in the local environment and required more food than could be gathered. Various social and economic factors helped drive the need for food.

- The Evolutionary/Intentionality theory, developed by David Rindos and others, views agriculture as an evolutionary adaptation of plants and humans. Starting with domestication by protection of wild plants, it led to specialization of location and then full-fledged domestication.

Effects of the Neolithic Revolution on Society

The traditional view is that the shift to agricultural food production supported a denser population, which in turn supported larger sedentary communities, the accumulation of goods and tools, and specialization in diverse forms of new labor. Overall a population could increase its size more rapidly when resources were more available. The resulting larger societies led to the development of different means of decision making and governmental organization. Food surpluses made possible the development of a social elite freed from labor, who dominated their communities and monopolized decision-making. There were deep social divisions and inequality between the sexes, with women’s status declining as men took on greater roles as leaders and warriors. Social class was determined by occupation, with farmers and craftsmen at the lower end, and priests and warriors at the higher.

Effects of the Neolithic Revolution on Health

Neolithic populations generally had poorer nutrition, shorter life expectancies, and a more labor-intensive lifestyle than hunter-gatherers. Diseases jumped from animals to humans, and agriculturalists suffered from more anaemia, vitamin deficiencies, spinal deformations, and dental pathologies.

Overall Impact of the Neolithic Revolution on Modern Life

The way we live today is directly related to the advances made in the Neolithic Revolution. From the governments we live under, to the specialized work laborers do, to the trade of goods and food, humans were irrevocably changed by the switch to sedentary agriculture and domestication of animals. Human population swelled from five million to seven billion today.