12.1: Estimation

12.1.1: Estimation

Estimating population parameters from sample parameters is one of the major applications of inferential statistics.

Learning Objective

Describe how to estimate population parameters with consideration of error

Key Points

- Seldom is the sample statistic exactly equal to the population parameter, so a range of likely values, or an estimate interval, is often given.

- Error is defined as the difference between the population parameter and the sample statistics.

- Bias (or systematic error) leads to a sample mean that is either lower or higher than the true mean.

- Mean-squared error is used to indicate how far, on average, the collection of estimates are from the parameter being estimated.

- Mean-squared error is used to indicate how far, on average, the collection of estimates are from the parameter being estimated.

Key Terms

- interval estimate

-

A range of values used to estimate a population parameter.

- error

-

The difference between the population parameter and the calculated sample statistics.

- point estimate

-

a single value estimate for a population parameter

One of the major applications of statistics is estimating population parameters from sample statistics. For example, a poll may seek to estimate the proportion of adult residents of a city that support a proposition to build a new sports stadium. Out of a random sample of 200 people, 106 say they support the proposition. Thus in the sample, 0.53 (

) of the people supported the proposition. This value of 0.53 (or 53%) is called a point estimate of the population proportion. It is called a point estimate because the estimate consists of a single value or point.

It is rare that the actual population parameter would equal the sample statistic. In our example, it is unlikely that, if we polled the entire adult population of the city, exactly 53% of the population would be in favor of the proposition. Instead, we use confidence intervals to provide a range of likely values for the parameter.

For this reason, point estimates are usually supplemented by interval estimates or confidence intervals. Confidence intervals are intervals constructed using a method that contains the population parameter a specified proportion of the time. For example, if the pollster used a method that contains the parameter 95% of the time it is used, he or she would arrive at the following 95% confidence interval:

. The pollster would then conclude that somewhere between 46% and 60% of the population supports the proposal. The media usually reports this type of result by saying that 53% favor the proposition with a margin of error of 7%.

Error and Bias

Assume that

(the Greek letter “theta”) is the value of the population parameter we are interested in. In statistics, we would represent the estimate as

(read theta-hat). We know that the estimate

would rarely equal the actual population parameter

. There is some level of error associated with it. We define this error as

.

All measurements have some error associated with them. Random errors occur in all data sets and are sometimes known as non-systematic errors. Random errors can arise from estimation of data values, imprecision of instruments, etc. For example, if you are reading lengths off a ruler, random errors will arise in each measurement as a result of estimating between which two lines the length lies. Bias is sometimes known as systematic error. Bias in a data set occurs when a value is consistently under or overestimated. Bias can also arise from forgetting to take into account a correction factor or from instruments that are not properly calibrated. Bias leads to a sample mean that is either lower or higher than the true mean .

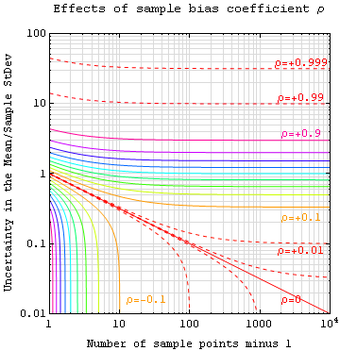

Sample Bias Coefficient

An estimate of expected error in the sample mean of variable

, sampled at

locations in a parameter space

, can be expressed in terms of sample bias coefficient

— defined as the average auto-correlation coefficient over all sample point pairs. This generalized error in the mean is the square root of the sample variance (treated as a population) times

. The

line is the more familiar standard error in the mean for samples that are uncorrelated.

Mean-Squared Error

The mean squared error (MSE) of

is defined as the expected value of the squared errors. It is used to indicate how far, on average, the collection of estimates are from the single parameter being estimated

. Suppose the parameter is the bull’s-eye of a target, the estimator is the process of shooting arrows at the target, and the individual arrows are estimates (samples). In this case, high MSE means the average distance of the arrows from the bull’s-eye is high, and low MSE means the average distance from the bull’s-eye is low. The arrows may or may not be clustered. For example, even if all arrows hit the same point, yet grossly miss the target, the MSE is still relatively large. However, if the MSE is relatively low, then the arrows are likely more highly clustered (than highly dispersed).

12.1.2: Estimates and Sample Size

Here, we present how to calculate the minimum sample size needed to estimate a population mean (

) and population proportion (

).

Learning Objective

Calculate sample size required to estimate the population mean

Key Points

- Before beginning a study, it is important to determine the minimum sample size, taking into consideration the desired level of confidence, the margin of error, and a previously observed sample standard deviation.

- When

, the sample standard deviation (

) can be used in place of the population standard deviation (

). - The minimum sample size

needed to estimate the population mean (

) is calculated using the formula:

.

. - The minimum sample size

needed to estimate the population proportion (

) is calculated using the formula:

.

Key Term

- margin of error

-

An expression of the lack of precision in the results obtained from a sample.

Determining Sample Size Required to Estimate the Population Mean (

)

Before calculating a point estimate and creating a confidence interval, a sample must be taken. Often, the number of data values needed in a sample to obtain a particular level of confidence within a given error needs to be determined before taking the sample. If the sample is too small, the result may not be useful, and if the sample is too big, both time and money are wasted in the sampling. The following text discusses how to determine the minimum sample size needed to make an estimate given the desired confidence level and the observed standard deviation.

First, consider the margin of error,

, the greatest possible distance between the point estimate and the value of the parameter it is estimating. To calculate

, we need to know the desired confidence level (

) and the population standard deviation,

. When

, the sample standard deviation (

) can be used to approximate the population standard deviation

.

To change the size of the error (

), two variables in the formula could be changed: the level of confidence (

) or the sample size (

). The standard deviation (

) is a given and cannot change.

As the confidence increases, the margin of error (

) increases. To ensure that the margin of error is small, the confidence level would have to decrease. Hence, changing the confidence to lower the error is not a practical solution.

As the sample size (

) increases, the margin of error decreases. The question now becomes: how large a sample is needed for a particular error? To determine this, begin by solving the equation for the

in terms of

:

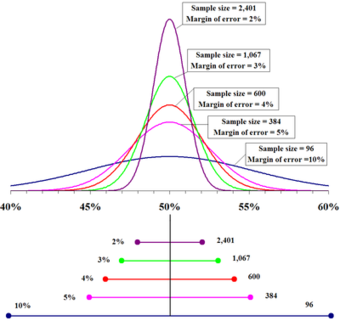

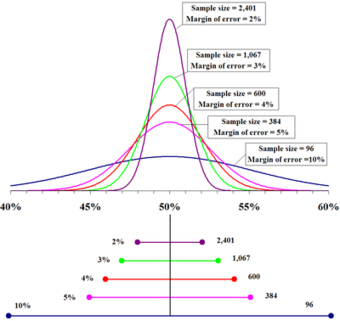

Sample size compared to margin of error

The top portion of this graphic depicts probability densities that show the relative likelihood that the “true” percentage is in a particular area given a reported percentage of 50%. The bottom portion shows the 95% confidence intervals (horizontal line segments), the corresponding margins of error (on the left), and sample sizes (on the right). In other words, for each sample size, one is 95% confident that the “true” percentage is in the region indicated by the corresponding segment. The larger the sample is, the smaller the margin of error is.

where

is the critical

score based on the desired confidence level,

is the desired margin of error, and

is the population standard deviation.

Since the population standard deviation is often unknown, the sample standard deviation from a previous sample of size

may be used as an approximation to

. Now, we can solve for

to see what would be an appropriate sample size to achieve our goals. Note that the value found by using the formula for sample size is generally not a whole number. Since the sample size must be a whole number, always round up to the next larger whole number.

Example

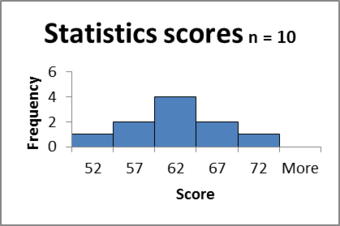

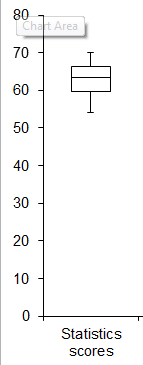

Suppose the scores on a statistics final are normally distributed with a standard deviation of 10 points. Construct a 95% confidence interval with an error of no more than 2 points.

Solution

So, a sample of size of 68 must be taken to create a 95% confidence interval with an error of no more than 2 points.

Determining Sample Size Required to Estimate Population Proportion (

)

The calculations for determining sample size to estimate a proportion (

) are similar to those for estimating a mean (

). In this case, the margin of error,

, is found using the formula:

where:

-

is the point estimate for the population proportion -

is the number of successes in the sample -

is the number in the sample; and

Then, solving for the minimum sample size

needed to estimate :

Example

The Mesa College mathematics department has noticed that a number of students place in a non-transfer level course and only need a 6 week refresher rather than an entire semester long course. If it is thought that about 10% of the students fall in this category, how many must the department survey if they wish to be 95% certain that the true population proportion is within

?

Solution

So, a sample of size of 139 must be taken to create a 95% confidence interval with an error of

.

12.1.3: Estimating the Target Parameter: Point Estimation

Point estimation involves the use of sample data to calculate a single value which serves as the “best estimate” of an unknown population parameter.

Learning Objective

Contrast why MLE and linear least squares are popular methods for estimating parameters

Key Points

- In inferential statistics, data from a sample is used to “estimate” or “guess” information about the data from a population.

- The most unbiased point estimate of a population mean is the sample mean.

- Maximum-likelihood estimation uses the mean and variance as parameters and finds parametric values that make the observed results the most probable.

- Linear least squares is an approach fitting a statistical model to data in cases where the desired value provided by the model for any data point is expressed linearly in terms of the unknown parameters of the model (as in regression).

Key Term

- point estimate

-

a single value estimate for a population parameter

In inferential statistics, data from a sample is used to “estimate” or “guess” information about the data from a population. Point estimation involves the use of sample data to calculate a single value or point (known as a statistic) which serves as the “best estimate” of an unknown population parameter. The point estimate of the mean is a single value estimate for a population parameter. The most unbiased point estimate of a population mean (µ) is the sample mean (

).

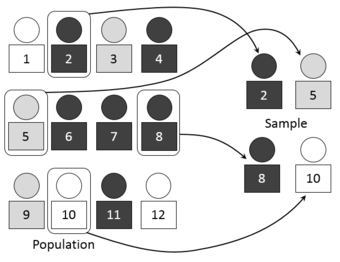

Simple random sampling of a population

We use point estimators, such as the sample mean, to estimate or guess information about the data from a population. This image visually represents the process of selecting random number-assigned members of a larger group of people to represent that larger group.

Maximum Likelihood

A popular method of estimating the parameters of a statistical model is maximum-likelihood estimation (MLE). When applied to a data set and given a statistical model, maximum-likelihood estimation provides estimates for the model’s parameters. The method of maximum likelihood corresponds to many well-known estimation methods in statistics. For example, one may be interested in the heights of adult female penguins, but be unable to measure the height of every single penguin in a population due to cost or time constraints. Assuming that the heights are normally (Gaussian) distributed with some unknown mean and variance, the mean and variance can be estimated with MLE while only knowing the heights of some sample of the overall population. MLE would accomplish this by taking the mean and variance as parameters and finding particular parametric values that make the observed results the most probable, given the model.

In general, for a fixed set of data and underlying statistical model, the method of maximum likelihood selects the set of values of the model parameters that maximizes the likelihood function. Maximum-likelihood estimation gives a unified approach to estimation, which is well-defined in the case of the normal distribution and many other problems. However, in some complicated problems, maximum-likelihood estimators are unsuitable or do not exist.

Linear Least Squares

Another popular estimation approach is the linear least squares method. Linear least squares is an approach fitting a statistical model to data in cases where the desired value provided by the model for any data point is expressed linearly in terms of the unknown parameters of the model (as in regression). The resulting fitted model can be used to summarize the data, to estimate unobserved values from the same system, and to understand the mechanisms that may underlie the system.

Mathematically, linear least squares is the problem of approximately solving an over-determined system of linear equations, where the best approximation is defined as that which minimizes the sum of squared differences between the data values and their corresponding modeled values. The approach is called “linear” least squares since the assumed function is linear in the parameters to be estimated. In statistics, linear least squares problems correspond to a statistical model called linear regression which arises as a particular form of regression analysis. One basic form of such a model is an ordinary least squares model.

12.1.4: Estimating the Target Parameter: Interval Estimation

Interval estimation is the use of sample data to calculate an interval of possible (or probable) values of an unknown population parameter.

Learning Objective

Use sample data to calculate interval estimation

Key Points

- The most prevalent forms of interval estimation are confidence intervals (a frequentist method) and credible intervals (a Bayesian method).

- When estimating parameters of a population, we must verify that the sample is random, that data from the population have a Normal distribution with mean

and standard deviation

, and that individual observations are independent. - In order to specify a specific

-distribution, which is different for each sample size

, we use its degrees of freedom, which is denoted by

, and

. - If we wanted to calculate a confidence interval for the population mean, we would use:

, where

is the critical value for the

distribution.

Key Terms

- t-distribution

-

a family of continuous probability disrtibutions that arises when estimating the mean of a normally distributed population in situations where the sample size is small and population standard devition is unknown

- critical value

-

the value corresponding to a given significance level

Interval estimation is the use of sample data to calculate an interval of possible (or probable) values of an unknown population parameter. The most prevalent forms of interval estimation are:

- confidence intervals (a frequentist method); and

- credible intervals (a Bayesian method).

Other common approaches to interval estimation are:

- Tolerance intervals

- Prediction intervals – used mainly in Regression Analysis

- Likelihood intervals

Example: Estimating the Population Mean

How can we construct a confidence interval for an unknown population mean

when we don’t know the population standard deviation

? We need to estimate from the data in order to do this. We also need to verify three conditions about the data:

- The data is from a simple random sample of size

from the population of interest. - Data from the population have a Normal distribution with mean and standard deviation. These are both unknown parameters.

- The method for calculating a confidence interval assumes that individual observations are independent.

The sample mean

has a Normal distribution with mean and standard deviation

. Since we don’t know

, we estimate it using the sample standard deviation

. So, we estimate the standard deviation of

using

, which is called the standard error of the sample mean.

The

-Distribution

When we do not know

, we use

. The distribution of the resulting statistic,

, is not Normal and fits the

-distribution. There is a different

-distribution for each sample size

. In order to specify a specific

-distribution, we use its degrees of freedom, which is denoted by

, and

.

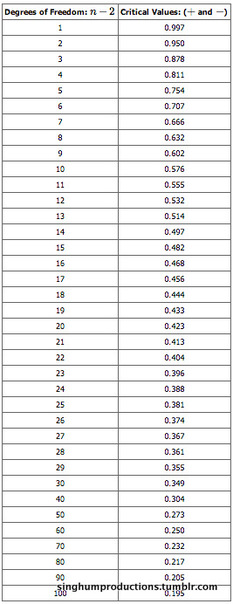

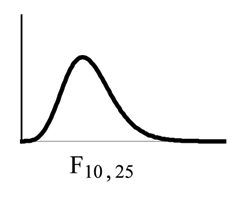

-Distribution

A plot of the

-distribution for several different degrees of freedom.

If we wanted to estimate the population mean, we can now put together everything we’ve learned. First, draw a simple random sample from a population with an unknown mean. A confidence interval for is calculated by:

, where

is the critical value for the

distribution.

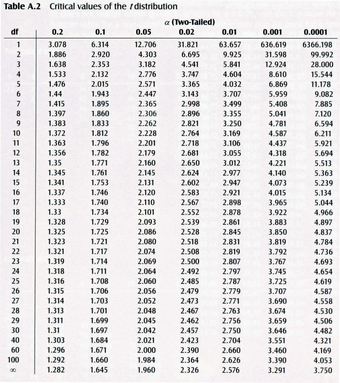

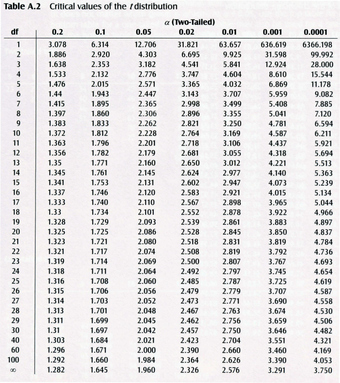

-Table

Critical values of the

-distribution.

12.1.5: Estimating a Population Proportion

In order to estimate a population proportion of some attribute, it is helpful to rely on the proportions observed within a sample of the population.

Learning Objective

Derive the population proportion using confidence intervals

Key Points

- If you want to rely on a sample, it is important that the sample be random (i.e., done in such as way that each member of the underlying population had an equal chance of being selected for the sample).

- As the size of a random sample increases, there is greater “confidence” that the observed sample proportion will be “close” to the actual population proportion.

- For general estimates of a population proportion, we use the formula:

. - To estimate a population proportion to be within a specific confidence interval, we use the formula:

.

Key Terms

- standard error

-

A measure of how spread out data values are around the mean, defined as the square root of the variance.

- confidence interval

-

A type of interval estimate of a population parameter used to indicate the reliability of an estimate.

Facts About Population Proportions

You do not need to be a math major or a professional statistician to have an intuitive appreciation of the following:

- In order to estimate the proportions of some attribute within a population, it would be helpful if you could rely on the proportions observed within a sample of the population.

- If you want to rely on a sample, it is important that the sample be random. This means that the sampling was done in such a way that each member of the underlying population had an equal chance of being selected for the sample.

- The size of the sample is important. As the size of a random sample increases, there is greater “confidence” that the observed sample proportion will be “close” to the actual population proportion. If you were to toss a fair coin ten times, it would not be that surprising to get only 3 or fewer heads (a sample proportion of 30% or less). But if there were 1,000 tosses, most people would agree – based on intuition and general experience – that it would be very unlikely to get only 300 or fewer heads. In other words, with the larger sample size, it is generally apparent that the sample proportion will be closer to the actual “population” proportion of 50%.

- While the sample proportion might be the best estimate of the total population proportion, you would not be very confident that this is exactly the population proportion.

Finding the Population Proportion Using Confidence Intervals

Let’s look at the following example. Assume a political pollster samples 400 voters and finds 208 for Candidate

and 192 for Candidate

. This leads to an estimate of 52% as

‘s support in the population. However, it is unlikely that

‘s support actual will be exactly 52%. We will call 0.52

(pronounced “p-hat”). The population proportion, , is estimated using the sample proportion

. However, the estimate is usually off by what is called the standard error (SE). The SE can be calculated by:

where

is the sample size. So, in this case, the SE is approximately equal to 0.02498. Therefore, a good population proportion for this example would be

.

Often, statisticians like to use specific confidence intervals for

. This is computed slightly differently, using the formula:

where

is the upper critical value of the standard normal distribution. In the above example, if we wished to calculate

with a confidence of 95%, we would use a

-value of 1.960 (found using a critical value table), and we would find

to be estimated as

. So, we could say with 95% confidence that between 47.104% and 56.896% of the people will vote for candidate

.

Critical Value Table

-table used for finding

for a certain level of confidence.

A simple guideline – If you use a confidence level of

, you should expect

of your conclusions to be incorrect. So, if you use a confidence level of 95%, you should expect 5% of your conclusions to be incorrect.

12.2: Statistical Power

12.2.1: Statistical Power

Statistical power helps us answer the question of how much data to collect in order to find reliable results.

Learning Objective

Discuss statistical power as it relates to significance testing and breakdown the factors that influence it.

Key Points

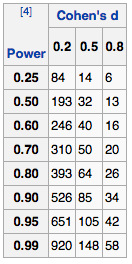

- Statistical power is the probability that a test will find a statistically significant difference between two samples, as a function of the size of the true difference between the two populations.

- Statistical power is the probability of finding a difference that does exist, as opposed to the likelihood of declaring a difference that does not exist.

- Statistical power depends on the significance criterion used in the test, the magnitude of the effect of interest in the population, and the sample size used to the detect the effect.

Key Terms

- significance criterion

-

a statement of how unlikely a positive result must be, if the null hypothesis of no effect is true, for the null hypothesis to be rejected

- null hypothesis

-

A hypothesis set up to be refuted in order to support an alternative hypothesis; presumed true until statistical evidence in the form of a hypothesis test indicates otherwise.

- Type I error

-

An error occurring when the null hypothesis (H0) is true, but is rejected.

In statistical practice, it is possible to miss a real effect simply by not taking enough data. In most cases, this is a problem. For instance, we might miss a viable medicine or fail to notice an important side-effect. How do we know how much data to collect? Statisticians provide the answer in the form of statistical power.

Background

Statistical tests use data from samples to assess, or make inferences about, a statistical population. In the concrete setting of a two-sample comparison, the goal is to assess whether the mean values of some attribute obtained for individuals in two sub-populations differ. For example, to test the null hypothesis that the mean scores of men and women on a test do not differ, samples of men and women are drawn. The test is administered to them, and the mean score of one group is compared to that of the other group using a statistical test such as the two-sample z-test. The power of the test is the probability that the test will find a statistically significant difference between men and women, as a function of the size of the true difference between those two populations. Note that power is the probability of finding a difference that does exist, as opposed to the likelihood of declaring a difference that does not exist (which is known as a Type I error or “false positive”).

Factors Influencing Power

Statistical power may depend on a number of factors. Some of these factors may be particular to a specific testing situation, but at a minimum, power nearly always depends on the following three factors:

- The Statistical Significance Criterion Used in the Test: A significance criterion is a statement of how unlikely a positive result must be, if the null hypothesis of no effect is true, for the null hypothesis to be rejected. The most commonly used criteria are probabilities of 0.05 (5%, 1 in 20), 0.01 (1%, 1 in 100), and 0.001 (0.1%, 1 in 1000). One easy way to increase the power of a test is to carry out a less conservative test by using a larger significance criterion, for example 0.10 instead of 0.05. This increases the chance of rejecting the null hypothesis when the null hypothesis is false, but it also increases the risk of obtaining a statistically significant result (i.e. rejecting the null hypothesis) when the null hypothesis is not false.

- The Magnitude of the Effect of Interest in the Population: The magnitude of the effect of interest in the population can be quantified in terms of an effect size, where there is greater power to detect larger effects. An effect size can be a direct estimate of the quantity of interest, or it can be a standardized measure that also accounts for the variability in the population. If constructed appropriately, a standardized effect size, along with the sample size, will completely determine the power. An unstandardized (direct) effect size will rarely be sufficient to determine the power, as it does not contain information about the variability in the measurements.

- The Sample Size Used to Detect the Effect: The sample size determines the amount of sampling error inherent in a test result. Other things being equal, effects are harder to detect in smaller samples. Increasing sample size is often the easiest way to boost the statistical power of a test.

A Simple Example

Suppose a gambler is convinced that an opponent has an unfair coin. Rather than getting heads half the time and tails half the time, the proportion is different, and the opponent is using this to cheat at incredibly boring coin-flipping games. How do we prove it?

Let’s say we look for a significance criterion of 0.05. That is, if we count up the number of heads after 10 or 100 trials and find a deviation from what we’d expect – half heads, half tails – the coin would be unfair if there’s only a 5% chance of getting a deviation that size or larger with a fair coin. What happens if we flip a coin 10 times and apply these criteria?

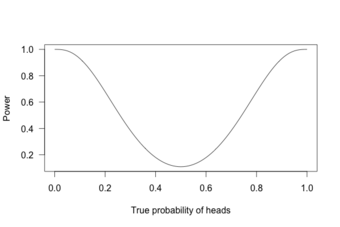

Power Curve 1

This graph shows the true probability of heads when flipping a coin 10 times.

This is called a power curve. Along the horizontal axis, we have the different possibilities for the coin’s true probability of getting heads, corresponding to different levels of unfairness. On the vertical axis is the probability that I will conclude the coin is rigged after 10 tosses, based on the probability of the result.

This graph shows that the coin is rigged to give heads 60% of the time. However, if we flip the coin only 10 times, we only have a 20% chance of concluding that it’s rigged. There’s too little data to separate rigging from random variation. However, what if we flip the coin 100 times?

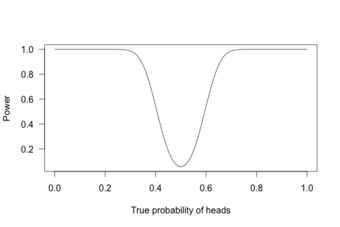

Power Curve 2

This graph shows the true probability of heads when flipping a coin 100 times.

Or 1,000 times?

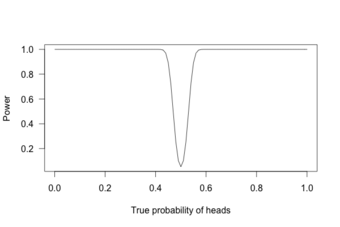

Power Curve 3

This graph shows the true probability of heads when flipping a coin 1,000 times.

With 1,000 flips, we can easily tell if the coin is rigged to give heads 60% of the time. It is overwhelmingly unlikely that we could flip a fair coin 1,000 times and get more than 600 heads.

12.3: Comparing More than Two Means

12.3.1: Elements of a Designed Study

The problem of comparing more than two means results from the increase in Type I error that occurs when statistical tests are used repeatedly.

Learning Objective

Discuss the increasing Type I error that accompanies comparisons of more than two means and the various methods of correcting this error.

Key Points

- Unless the tests are perfectly dependent, the familywide error rate increases as the number of comparisons increases.

- Multiple testing correction refers to re-calculating probabilities obtained from a statistical test which was repeated multiple times.

- In order to retain a prescribed familywise error rate

in an analysis involving more than one comparison, the error rate for each comparison must be more stringent than

. - The most conservative, but free of independency and distribution assumptions method, way of controlling the familywise error rate is known as the Bonferroni correction.

- Multiple comparison procedures are commonly used in an analysis of variance after obtaining a significant omnibus test result, like the ANOVA

-test.

Key Terms

- ANOVA

-

Analysis of variance—a collection of statistical models used to analyze the differences between group means and their associated procedures (such as “variation” among and between groups).

- Boole’s inequality

-

a probability theory stating that for any finite or countable set of events, the probability that at least one of the events happens is no greater than the sum of the probabilities of the individual events

- Bonferroni correction

-

a method used to counteract the problem of multiple comparisons; considered the simplest and most conservative method to control the familywise error rate

For hypothesis testing, the problem of comparing more than two means results from the increase in Type I error that occurs when statistical tests are used repeatedly. If

independent comparisons are performed, the experiment-wide significance level

, also termed FWER for familywise error rate, is given by:

Hence, unless the tests are perfectly dependent,

increases as the number of comparisons increases. If we do not assume that the comparisons are independent, then we can still say:

.

There are different ways to assure that the familywise error rate is at most

. The most conservative, but free of independency and distribution assumptions method, is known as the Bonferroni correction

. A more sensitive correction can be obtained by solving the equation for the familywise error rate of independent comparisons for

.

This yields

, which is known as the Šidák correction. Another procedure is the Holm–Bonferroni method, which uniformly delivers more power than the simple Bonferroni correction by testing only the most extreme

-value (

) against the strictest criterion, and the others (

) against progressively less strict criteria.

Methods

Multiple testing correction refers to re-calculating probabilities obtained from a statistical test which was repeated multiple times. In order to retain a prescribed familywise error rate

in an analysis involving more than one comparison, the error rate for each comparison must be more stringent than

. Boole’s inequality implies that if each test is performed to have type I error rate

, the total error rate will not exceed

. This is called the Bonferroni correction and is one of the most commonly used approaches for multiple comparisons.

Because simple techniques such as the Bonferroni method can be too conservative, there has been a great deal of attention paid to developing better techniques, such that the overall rate of false positives can be maintained without inflating the rate of false negatives unnecessarily. Such methods can be divided into general categories:

- Methods where total alpha can be proved to never exceed 0.05 (or some other chosen value) under any conditions. These methods provide “strong” control against Type I error, in all conditions including a partially correct null hypothesis.

- Methods where total alpha can be proved not to exceed 0.05 except under certain defined conditions.

- Methods which rely on an omnibus test before proceeding to multiple comparisons. Typically these methods require a significant ANOVA/Tukey’s range test before proceeding to multiple comparisons. These methods have “weak” control of Type I error.

- Empirical methods, which control the proportion of Type I errors adaptively, utilizing correlation and distribution characteristics of the observed data.

Post-Hoc Testing of ANOVA

Multiple comparison procedures are commonly used in an analysis of variance after obtaining a significant omnibus test result, like the ANOVA

-test. The significant ANOVA result suggests rejecting the global null hypothesis

that the means are the same across the groups being compared. Multiple comparison procedures are then used to determine which means differ. In a one-way ANOVA involving

group means, there are

pairwise comparisons.

12.3.2: Randomized Design: Single-Factor

Completely randomized designs study the effects of one primary factor without the need to take other nuisance variables into account.

Learning Objective

Discover how randomized experimental design allows researchers to study the effects of a single factor without taking into account other nuisance variables.

Key Points

- In complete random design, the run sequence of the experimental units is determined randomly.

- The levels of the primary factor are also randomly assigned to the experimental units in complete random design.

- All completely randomized designs with one primary factor are defined by three numbers:

(the number of factors, which is always 1 for these designs),

(the number of levels), and

(the number of replications). The total sample size (number of runs) is

.

Key Terms

- factor

-

The explanatory, or independent, variable in an experiment.

- level

-

The specific value of a factor in an experiment.

In the design of experiments, completely randomized designs are for studying the effects of one primary factor without the need to take into account other nuisance variables. The experiment under a completely randomized design compares the values of a response variable based on the different levels of that primary factor. For completely randomized designs, the levels of the primary factor are randomly assigned to the experimental units.

Randomization

In complete random design, the run sequence of the experimental units is determined randomly. For example, if there are 3 levels of the primary factor with each level to be run 2 times, then there are

(where “!” denotes factorial) possible run sequences (or ways to order the experimental trials). Because of the replication, the number of unique orderings is 90 (since

). An example of an unrandomized design would be to always run 2 replications for the first level, then 2 for the second level, and finally 2 for the third level. To randomize the runs, one way would be to put 6 slips of paper in a box with 2 having level 1, 2 having level 2, and 2 having level 3. Before each run, one of the slips would be drawn blindly from the box and the level selected would be used for the next run of the experiment.

Three Key Numbers

All completely randomized designs with one primary factor are defined by three numbers:

(the number of factors, which is always 1 for these designs),

(the number of levels), and

(the number of replications). The total sample size (number of runs) is

. Balance dictates that the number of replications be the same at each level of the factor (this will maximize the sensitivity of subsequent statistical

– (or

-) tests). An example of a completely randomized design using the three numbers is:

-

: 1 factor (

) -

: 4 levels of that single factor (called 1, 2, 3, and 4) -

: 3 replications per level -

: 4 levels multiplied by 3 replications per level gives 12 runs

12.3.3: Multiple Comparisons of Means

ANOVA is useful in the multiple comparisons of means due to its reduction in the Type I error rate.

Learning Objective

Explain the issues that arise when researchers aim to make a number of formal comparisons, and give examples of how these issues can be resolved.

Key Points

- “Multiple comparisons” arise when a statistical analysis encompasses a number of formal comparisons, with the presumption that attention will focus on the strongest differences among all comparisons that are made.

- As the number of comparisons increases, it becomes more likely that the groups being compared will appear to differ in terms of at least one attribute.

- Doing multiple two-sample

-tests would result in an increased chance of committing a Type I error.

Key Terms

- ANOVA

-

Analysis of variance—a collection of statistical models used to analyze the differences between group means and their associated procedures (such as “variation” among and between groups).

- null hypothesis

-

A hypothesis set up to be refuted in order to support an alternative hypothesis; presumed true until statistical evidence in the form of a hypothesis test indicates otherwise.

- Type I error

-

An error occurring when the null hypothesis (H0) is true, but is rejected.

The multiple comparisons problem occurs when one considers a set of statistical inferences simultaneously or infers a subset of parameters selected based on the observed values. Errors in inference, including confidence intervals that fail to include their corresponding population parameters or hypothesis tests that incorrectly reject the null hypothesis, are more likely to occur when one considers the set as a whole. Several statistical techniques have been developed to prevent this, allowing direct comparison of means significance levels for single and multiple comparisons. These techniques generally require a stronger level of observed evidence in order for an individual comparison to be deemed “significant,” so as to compensate for the number of inferences being made.

The Problem

When researching, we typically refer to comparisons of two groups, such as a treatment group and a control group. “Multiple comparisons” arise when a statistical analysis encompasses a number of formal comparisons, with the presumption that attention will focus on the strongest differences among all comparisons that are made. Failure to compensate for multiple comparisons can have important real-world consequences

As the number of comparisons increases, it becomes more likely that the groups being compared will appear to differ in terms of at least one attribute. Our confidence that a result will generalize to independent data should generally be weaker if it is observed as part of an analysis that involves multiple comparisons, rather than an analysis that involves only a single comparison.

For example, if one test is performed at the 5% level, there is only a 5% chance of incorrectly rejecting the null hypothesis if the null hypothesis is true. However, for 100 tests where all null hypotheses are true, the expected number of incorrect rejections is 5. If the tests are independent, the probability of at least one incorrect rejection is 99.4%. These errors are called false positives, or Type I errors.

Techniques have been developed to control the false positive error rate associated with performing multiple statistical tests. Similarly, techniques have been developed to adjust confidence intervals so that the probability of at least one of the intervals not covering its target value is controlled.

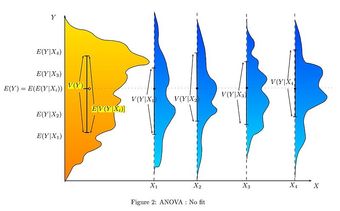

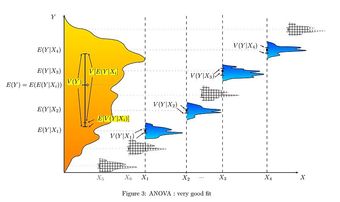

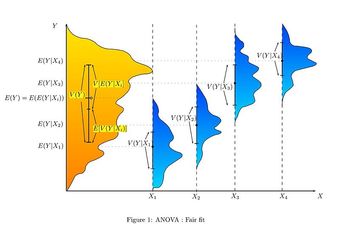

Analysis of Variance (ANOVA) for Comparing Multiple Means

In order to compare the means of more than two samples coming from different treatment groups that are normally distributed with a common variance, an analysis of variance is often used. In its simplest form, ANOVA provides a statistical test of whether or not the means of several groups are equal. Therefore, it generalizes the

-test to more than two groups. Doing multiple two-sample

-tests would result in an increased chance of committing a Type I error. For this reason, ANOVAs are useful in comparing (testing) three or more means (groups or variables) for statistical significance.

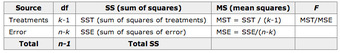

The following table summarizes the calculations that need to be done, which are explained below:

ANOVA Calculation Table

This table summarizes the calculations necessary in an ANOVA for comparing multiple means.

Letting

be the th measurement in the th sample (where

), then:

and the sum of the squares of the treatments is:

where

is the total of the observations in treatment

,

is the number of observations in sample

and CM is the correction of the mean:

The sum of squares of the error SSE is given by:

and

Example

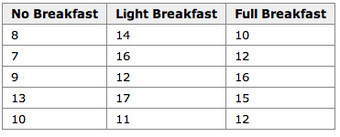

An example for the effect of breakfast on attention span (in minutes) for small children is summarized in the table below:

.

Breakfast and Children’s Attention Span

This table summarizes the effect of breakfast on attention span (in minutes) for small children.

The hypothesis test would be:

versus:

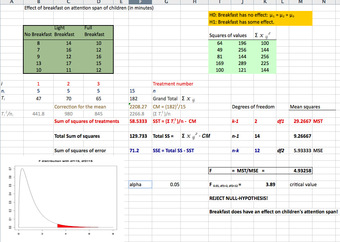

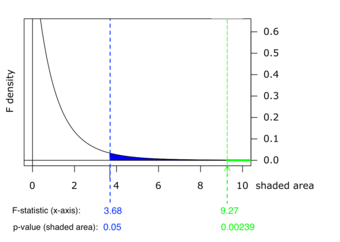

The solution to the test can be seen in the figure below:

.

Excel Solution

This image shows the solution to our ANOVA example performed in Excel.

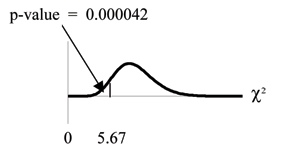

The test statistic

is equal to 4.9326. The corresponding right-tail probability is 0.027, which means that if the significance level is 0.05, the test statistic would be in the rejection region, and therefore, the null-hypothesis would be rejected.

Hence, this indicates that the means are not equal (i.e., that sample values give sufficient evidence that not all means are the same). In terms of the example this means that breakfast (and its size) does have an effect on children’s attention span.

12.3.4: Randomized Block Design

Block design is the arranging of experimental units into groups (blocks) that are similar to one another, to control for certain factors.

Learning Objective

Reconstruct how the use of randomized block design is used to control the effects of nuisance factors.

Key Points

- The basic concept of blocking is to create homogeneous blocks in which the nuisance factors are held constant, and the factor of interest is allowed to vary.

- Nuisance factors are those that may affect the measured result, but are not of primary interest.

- The general rule is: “Block what you can; randomize what you cannot. “ Blocking is used to remove the effects of a few of the most important nuisance variables. Randomization is then used to reduce the contaminating effects of the remaining nuisance variables.

Key Terms

- blocking

-

A schedule for conducting treatment combinations in an experimental study such that any effects on the experimental results due to a known change in raw materials, operators, machines, etc., become concentrated in the levels of the blocking variable.

- nuisance factors

-

Variables that may affect the measured results, but are not of primary interest.

What is Blocking?

In the statistical theory of the design of experiments, blocking is the arranging of experimental units in groups (blocks) that are similar to one another. Typically, a blocking factor is a source of variability that is not of primary interest to the experimenter. An example of a blocking factor might be the sex of a patient; by blocking on sex, this source of variability is controlled for, thus leading to greater accuracy.

Nuisance Factors

For randomized block designs, there is one factor or variable that is of primary interest. However, there are also several other nuisance factors. Nuisance factors are those that may affect the measured result, but are not of primary interest. For example, in applying a treatment, nuisance factors might be the specific operator who prepared the treatment, the time of day the experiment was run, and the room temperature. All experiments have nuisance factors. The experimenter will typically need to spend some time deciding which nuisance factors are important enough to keep track of or control, if possible, during the experiment.

When we can control nuisance factors, an important technique known as blocking can be used to reduce or eliminate the contribution to experimental error contributed by nuisance factors. The basic concept is to create homogeneous blocks in which the nuisance factors are held constant and the factor of interest is allowed to vary. Within blocks, it is possible to assess the effect of different levels of the factor of interest without having to worry about variations due to changes of the block factors, which are accounted for in the analysis.

The general rule is: “Block what you can; randomize what you cannot. ” Blocking is used to remove the effects of a few of the most important nuisance variables. Randomization is then used to reduce the contaminating effects of the remaining nuisance variables.

Example of a Blocked Design

The progress of a particular type of cancer differs in women and men. A clinical experiment to compare two therapies for their cancer therefore treats gender as a blocking variable, as illustrated in . Two separate randomizations are done—one assigning the female subjects to the treatments and one assigning the male subjects. It is important to note that there is no randomization involved in making up the blocks. They are groups of subjects that differ in some way (gender in this case) that is apparent before the experiment begins.

Block Design

An example of a blocked design, where the blocking factor is gender.

12.3.5: Factorial Experiments: Two Factors

A full factorial experiment is an experiment whose design consists of two or more factors with discrete possible levels.

Learning Objective

Outline the design of a factorial experiment, the corresponding notations, and the resulting analysis.

Key Points

- A full factorial experiment allows the investigator to study the effect of each factor on the response variable, as well as the effects of interactions between factors on the response variable.

- The experimental units of a factorial experiment take on all possible combinations of the discrete levels across all such factors.

- To save space, the points in a two-level factorial experiment are often abbreviated with strings of plus and minus signs.

Key Terms

- level

-

The specific value of a factor in an experiment.

- factor

-

The explanatory, or independent, variable in an experiment.

A full factorial experiment is an experiment whose design consists of two or more factors, each with discrete possible values (or levels), and whose experimental units take on all possible combinations of these levels across all such factors. A full factorial design may also be called a fully crossed design. Such an experiment allows the investigator to study the effect of each factor on the response variable, as well as the effects of interactions between factors on the response variable.

For the vast majority of factorial experiments, each factor has only two levels. For example, with two factors each taking two levels, a factorial experiment would have four treatment combinations in total, and is usually called a 2 by 2 factorial design.

If the number of combinations in a full factorial design is too high to be logistically feasible, a fractional factorial design may be done, in which some of the possible combinations (usually at least half) are omitted.

Notation

To save space, the points in a two-level factorial experiment are often abbreviated with strings of plus and minus signs. The strings have as many symbols as factors, and their values dictate the level of each factor: conventionally,

for the first (or low) level, and

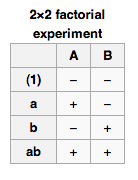

for the second (or high) level .

Factorial Notation

This table shows the notation used for a 2×2 factorial experiment.

The factorial points can also be abbreviated by (1),

,

, and

, where the presence of a letter indicates that the specified factor is at its high (or second) level and the absence of a letter indicates that the specified factor is at its low (or first) level (for example,

indicates that factor

is on its high setting, while all other factors are at their low (or first) setting). (1) is used to indicate that all factors are at their lowest (or first) values.

Analysis

A factorial experiment can be analyzed using ANOVA or regression analysis. It is relatively easy to estimate the main effect for a factor. To compute the main effect of a factor

, subtract the average response of all experimental runs for which

was at its low (or first) level from the average response of all experimental runs for which

was at its high (or second) level.

Other useful exploratory analysis tools for factorial experiments include main effects plots, interaction plots, and a normal probability plot of the estimated effects.

When the factors are continuous, two-level factorial designs assume that the effects are linear. If a quadratic effect is expected for a factor, a more complicated experiment should be used, such as a central composite design.

Example

The simplest factorial experiment contains two levels for each of two factors. Suppose an engineer wishes to study the total power used by each of two different motors,

and

, running at each of two different speeds, 2000 or 3000 RPM. The factorial experiment would consist of four experimental units: motor

at 2000 RPM, motor

at 2000 RPM, motor

at 3000 RPM, and motor

at 3000 RPM. Each combination of a single level selected from every factor is present once.

This experiment is an example of a

(or 2 by 2) factorial experiment, so named because it considers two levels (the base) for each of two factors (the power or superscript), or

, producing

factorial points.

Designs can involve many independent variables. As a further example, the effects of three input variables can be evaluated in eight experimental conditions shown as the corners of a cube.

Factorial Design

This figure is a sketch of a 2 by 3 factorial design.

This can be conducted with or without replication, depending on its intended purpose and available resources. It will provide the effects of the three independent variables on the dependent variable and possible interactions.

12.4: Confidence Intervals

12.4.1: What Is a Confidence Interval?

A confidence interval is a type of interval estimate of a population parameter and is used to indicate the reliability of an estimate.

Learning Objective

Explain the principle behind confidence intervals in statistical inference

Key Points

- In inferential statistics, we use sample data to make generalizations about an unknown population.

- A confidence interval is a type of estimate, like a sample average or sample standard deviation, but instead of being just one number it is an interval of numbers.

- The interval of numbers is an estimated range of values calculated from a given set of sample data.

- The principle behind confidence intervals was formulated to provide an answer to the question raised in statistical inference: how do we resolve the uncertainty inherent in results derived from data that are themselves only a randomly selected subset of a population?

- Note that the confidence interval is likely to include an unknown population parameter.

Key Terms

- sample

-

a subset of a population selected for measurement, observation, or questioning to provide statistical information about the population

- confidence interval

-

A type of interval estimate of a population parameter used to indicate the reliability of an estimate.

- population

-

a group of units (persons, objects, or other items) enumerated in a census or from which a sample is drawn

Example

- A confidence interval can be used to describe how reliable survey results are. In a poll of election voting-intentions, the result might be that 40% of respondents intend to vote for a certain party. A 90% confidence interval for the proportion in the whole population having the same intention on the survey date might be 38% to 42%. From the same data one may calculate a 95% confidence interval, which in this case might be 36% to 44%. A major factor determining the length of a confidence interval is the size of the sample used in the estimation procedure, for example the number of people taking part in a survey.

Suppose you are trying to determine the average rent of a two-bedroom apartment in your town. You might look in the classified section of the newpaper, write down several rents listed, and then average them together—from this you would obtain a point estimate of the true mean. If you are trying to determine the percent of times you make a basket when shooting a basketball, you might count the number of shots you make, and divide that by the number of shots you attempted. In this case, you would obtain a point estimate for the true proportion.

In inferential statistics, we use sample data to make generalizations about an unknown population. The sample data help help us to make an estimate of a population parameter. We realize that the point estimate is most likely not the exact value of the population parameter, but close to it. After calculating point estimates, we construct confidence intervals in which we believe the parameter lies.

A confidence interval is a type of estimate (like a sample average or sample standard deviation), in the form of an interval of numbers, rather than only one number. It is an observed interval (i.e., it is calculated from the observations), used to indicate the reliability of an estimate. The interval of numbers is an estimated range of values calculated from a given set of sample data. How frequently the observed interval contains the parameter is determined by the confidence level or confidence coefficient. Note that the confidence interval is likely to include an unknown population parameter.

Philosophical Issues

The principle behind confidence intervals provides an answer to the question raised in statistical inference: how do we resolve the uncertainty inherent in results derived from data that (in and of itself) is only a randomly selected subset of a population? Bayesian inference provides further answers in the form of credible intervals.

Confidence intervals correspond to a chosen rule for determining the confidence bounds; this rule is essentially determined before any data are obtained or before an experiment is done. The rule is defined such that over all possible datasets that might be obtained, there is a high probability (“high” is specifically quantified) that the interval determined by the rule will include the true value of the quantity under consideration—a fairly straightforward and reasonable way of specifying a rule for determining uncertainty intervals.

Ostensibly, the Bayesian approach offers intervals that (subject to acceptance of an interpretation of “probability” as Bayesian probability) offer the interpretation that the specific interval calculated from a given dataset has a certain probability of including the true value (conditional on the data and other information available). The confidence interval approach does not allow this, as in this formulation (and at this same stage) both the bounds of the interval and the true values are fixed values; no randomness is involved.

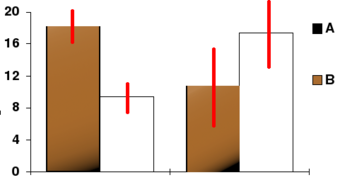

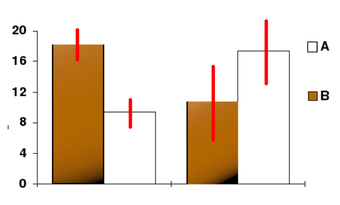

Confidence Interval

In this bar chart, the top ends of the bars indicate observation means and the red line segments represent the confidence intervals surrounding them. Although the bars are shown as symmetric in this chart, they do not have to be symmetric.

12.4.2: Interpreting a Confidence Interval

For users of frequentist methods, various interpretations of a confidence interval can be given.

Learning Objective

Construct a confidence intervals based on the point estimate of the quantity being considered

Key Points

- Methods for deriving confidence intervals include descriptive statistics, likelihood theory, estimating equations, significance testing, and bootstrapping.

- The confidence interval can be expressed in terms of samples: “Were this procedure to be repeated on multiple samples, the calculated confidence interval would encompass the true population parameter 90% of the time”.

- The explanation of a confidence interval can amount to something like: “The confidence interval represents values for the population parameter, for which the difference between the parameter and the observed estimate is not statistically significant at the 10% level”.

- The probability associated with a confidence interval may also be considered from a pre-experiment point of view, in the same context in which arguments for the random allocation of treatments to study items are made.

Key Terms

- confidence interval

-

A type of interval estimate of a population parameter used to indicate the reliability of an estimate.

- frequentist

-

An advocate of frequency probability.

Deriving a Confidence Interval

For non-standard applications, there are several routes that might be taken to derive a rule for the construction of confidence intervals. Established rules for standard procedures might be justified or explained via several of these routes. Typically a rule for constructing confidence intervals is closely tied to a particular way of finding a point estimate of the quantity being considered.

- Descriptive statistics – This is closely related to the method of moments for estimation. A simple example arises where the quantity to be estimated is the mean, in which case a natural estimate is the sample mean. The usual arguments indicate that the sample variance can be used to estimate the variance of the sample mean. A naive confidence interval for the true mean can be constructed centered on the sample mean with a width which is a multiple of the square root of the sample variance.

- Likelihood theory – The theory here is for estimates constructed using the maximum likelihood principle. It provides for two ways of constructing confidence intervals (or confidence regions) for the estimates.

- Estimating equations – The estimation approach here can be considered as both a generalization of the method of moments and a generalization of the maximum likelihood approach. There are corresponding generalizations of the results of maximum likelihood theory that allow confidence intervals to be constructed based on estimates derived from estimating equations.

- Significance testing – If significance tests are available for general values of a parameter, then confidence intervals/regions can be constructed by including in the

confidence region all those points for which the significance test of the null hypothesis that the true value is the given value is not rejected at a significance level of

. - Bootstrapping – In situations where the distributional assumptions for the above methods are uncertain or violated, resampling methods allow construction of confidence intervals or prediction intervals. The observed data distribution and the internal correlations are used as the surrogate for the correlations in the wider population.

Meaning and Interpretation

For users of frequentist methods, various interpretations of a confidence interval can be given:

- The confidence interval can be expressed in terms of samples (or repeated samples): “Were this procedure to be repeated on multiple samples, the calculated confidence interval (which would differ for each sample) would encompass the true population parameter 90% of the time. ” Note that this does not refer to repeated measurement of the same sample, but repeated sampling.

- The explanation of a confidence interval can amount to something like: “The confidence interval represents values for the population parameter, for which the difference between the parameter and the observed estimate is not statistically significant at the 10% level. ” In fact, this relates to one particular way in which a confidence interval may be constructed.

- The probability associated with a confidence interval may also be considered from a pre-experiment point of view, in the same context in which arguments for the random allocation of treatments to study items are made. Here, the experimenter sets out the way in which they intend to calculate a confidence interval. Before performing the actual experiment, they know that the end calculation of that interval will have a certain chance of covering the true but unknown value. This is very similar to the “repeated sample” interpretation above, except that it avoids relying on considering hypothetical repeats of a sampling procedure that may not be repeatable in any meaningful sense.

In each of the above, the following applies: If the true value of the parameter lies outside the 90% confidence interval once it has been calculated, then an event has occurred which had a probability of 10% (or less) of happening by chance.

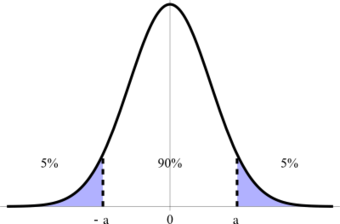

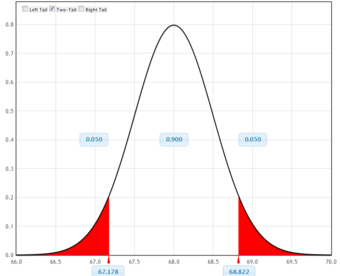

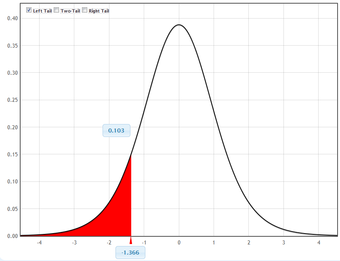

Confidence Interval

This figure illustrates a 90% confidence interval on a standard normal curve.

12.4.3: Caveat Emptor and the Gallup Poll

Readers of polls, such as the Gallup Poll, should exercise Caveat Emptor by taking into account the poll’s margin of error.

Learning Objective

Explain how margin of error plays a significant role in making purchasing decisions

Key Points

- Historically, the Gallup Poll has measured and tracked the public’s attitudes concerning virtually every political, social, and economic issue of the day, including highly sensitive or controversial subjects.

- Caveat emptor is Latin for “let the buyer beware”—the property law principle that controls the sale of real property after the date of closing, but may also apply to sales of other goods.

- The margin of error is usually defined as the “radius” (or half the width) of a confidence interval for a particular statistic from a survey.

- The larger the margin of error, the less confidence one should have that the poll’s reported results are close to the “true” figures — that is, the figures for the whole population.

- Like confidence intervals, the margin of error can be defined for any desired confidence level, but usually a level of 90%, 95% or 99% is chosen (typically 95%).

Key Terms

- caveat emptor

-

Latin for “let the buyer beware”—the property law principle that controls the sale of real property after the date of closing, but may also apply to sales of other goods.

- margin of error

-

An expression of the lack of precision in the results obtained from a sample.

Gallup Poll

The Gallup Poll is the division of the Gallup Company that regularly conducts public opinion polls in more than 140 countries around the world. Gallup Polls are often referenced in the mass media as a reliable and objective measurement of public opinion. Gallup Poll results, analyses, and videos are published daily on Gallup.com in the form of data-driven news.

Since inception, Gallup Polls have been used to measure and track public attitudes concerning a wide range of political, social, and economic issues (including highly sensitive or controversial subjects). General and regional-specific questions, developed in collaboration with the world’s leading behavioral economists, are organized into powerful indexes and topic areas that correlate with real-world outcomes.

Caveat Emptor

Caveat emptor is Latin for “let the buyer beware.” Generally, caveat emptor is the property law principle that controls the sale of real property after the date of closing, but may also apply to sales of other goods. Under its principle, a buyer cannot recover damages from a seller for defects on the property that render the property unfit for ordinary purposes. The only exception is if the seller actively conceals latent defects, or otherwise states material misrepresentations amounting to fraud.

This principle can also be applied to the reading of polling information. The reader should “beware” of possible errors and biases present that might skew the information being represented. Readers should pay close attention to a poll’s margin of error.

Margin of Error

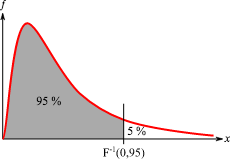

The margin of error statistic expresses the amount of random sampling error in a survey’s results. The larger the margin of error, the less confidence one should have that the poll’s reported results represent “true” figures (i.e., figures for the whole population). Margin of error occurs whenever a population is incompletely sampled.

The margin of error is usually defined as the “radius” (half the width) of a confidence interval for a particular statistic from a survey. When a single, global margin of error is reported, it refers to the maximum margin of error for all reported percentages using the full sample from the survey. If the statistic is a percentage, this maximum margin of error is calculated as the radius of the confidence interval for a reported percentage of 50%.

For example, if the true value is 50 percentage points, and the statistic has a confidence interval radius of 5 percentage points, then we say the margin of error is 5 percentage points. As another example, if the true value is 50 people, and the statistic has a confidence interval radius of 5 people, then we might say the margin of error is 5 people.

In some cases, the margin of error is not expressed as an “absolute” quantity; rather, it is expressed as a “relative” quantity. For example, suppose the true value is 50 people, and the statistic has a confidence interval radius of 5 people. If we use the “absolute” definition, the margin of error would be 5 people. If we use the “relative” definition, then we express this absolute margin of error as a percent of the true value. So in this case, the absolute margin of error is 5 people, but the “percent relative” margin of error is 10% (10% of 50 people is 5 people).

Like confidence intervals, the margin of error can be defined for any desired confidence level, but usually a level of 90%, 95% or 99% is chosen (typically 95%). This level is the probability that a margin of error around the reported percentage would include the “true” percentage. Along with the confidence level, the sample design for a survey (in particular its sample size) determines the magnitude of the margin of error. A larger sample size produces a smaller margin of error, all else remaining equal.

If the exact confidence intervals are used, then the margin of error takes into account both sampling error and non-sampling error. If an approximate confidence interval is used (for example, by assuming the distribution is normal and then modeling the confidence interval accordingly), then the margin of error may only take random sampling error into account. It does not represent other potential sources of error or bias, such as a non-representative sample-design, poorly phrased questions, people lying or refusing to respond, the exclusion of people who could not be contacted, or miscounts and miscalculations.

Different Confidence Levels

For a simple random sample from a large population, the maximum margin of error is a simple re-expression of the sample size

. The numerators of these equations are rounded to two decimal places.

- Margin of error at 99% confidence

- Margin of error at 95% confidence

- Margin of error at 90% confidence

If an article about a poll does not report the margin of error, but does state that a simple random sample of a certain size was used, the margin of error can be calculated for a desired degree of confidence using one of the above formulae. Also, if the 95% margin of error is given, one can find the 99% margin of error by increasing the reported margin of error by about 30%.

As an example of the above, a random sample of size 400 will give a margin of error, at a 95% confidence level, of

or 0.049 (just under 5%). A random sample of size 1,600 will give a margin of error of

, or 0.0245 (just under 2.5%). A random sample of size 10,000 will give a margin of error at the 95% confidence level of

, or 0.0098 – just under 1%.

Margin for Error

The top portion of this graphic depicts probability densities that show the relative likelihood that the “true” percentage is in a particular area given a reported percentage of 50%. The bottom portion shows the 95% confidence intervals (horizontal line segments), the corresponding margins of error (on the left), and sample sizes (on the right). In other words, for each sample size, one is 95% confident that the “true” percentage is in the region indicated by the corresponding segment. The larger the sample is, the smaller the margin of error is.

12.4.4: Level of Confidence

The proportion of confidence intervals that contain the true value of a parameter will match the confidence level.

Learning Objective

Explain the use of confidence intervals in estimating population parameters

Key Points

- The presence of a confidence level is guaranteed by the reasoning underlying the construction of confidence intervals.

- Confidence level is represented by a percentage.

- The desired level of confidence is set by the researcher (not determined by data).

- In applied practice, confidence intervals are typically stated at the 95% confidence level.

Key Term

- confidence level

-

The probability that a measured quantity will fall within a given confidence interval.

If confidence intervals are constructed across many separate data analyses of repeated (and possibly different) experiments, the proportion of such intervals that contain the true value of the parameter will match the confidence level. This is guaranteed by the reasoning underlying the construction of confidence intervals.

Confidence intervals consist of a range of values (interval) that act as good estimates of the unknown population parameter . However, in infrequent cases, none of these values may cover the value of the parameter. The level of confidence of the confidence interval would indicate the probability that the confidence range captures this true population parameter given a distribution of samples. It does not describe any single sample. This value is represented by a percentage, so when we say, “we are 99% confident that the true value of the parameter is in our confidence interval,” we express that 99% of the observed confidence intervals will hold the true value of the parameter.

Confidence Level

In this bar chart, the top ends of the bars indicate observation means and the red line segments represent the confidence intervals surrounding them. Although the bars are shown as symmetric in this chart, they do not have to be symmetric.

After a sample is taken, the population parameter is either in the interval made or not — there is no chance. The desired level of confidence is set by the researcher (not determined by data). If a corresponding hypothesis test is performed, the confidence level is the complement of respective level of significance (i.e., a 95% confidence interval reflects a significance level of 0.05).

In applied practice, confidence intervals are typically stated at the 95% confidence level. However, when presented graphically, confidence intervals can be shown at several confidence levels (for example, 50%, 95% and 99%).

12.4.5: Determining Sample Size

A major factor determining the length of a confidence interval is the size of the sample used in the estimation procedure.

Learning Objective

Assess the most appropriate way to choose a sample size in a given situation

Key Points

- Sample size determination is the act of choosing the number of observations or replicates to include in a statistical sample.

- The sample size is an important feature of any empirical study in which the goal is to make inferences about a population from a sample.

- In practice, the sample size used in a study is determined based on the expense of data collection and the need to have sufficient statistical power.

- Larger sample sizes generally lead to increased precision when estimating unknown parameters.

Key Terms

- law of large numbers

-

The statistical tendency toward a fixed ratio in the results when an experiment is repeated a large number of times.

- central limit theorem

-

The theorem that states: If the sum of independent identically distributed random variables has a finite variance, then it will be (approximately) normally distributed.

- Stratified Sampling

-

A method of sampling that involves dividing members of the population into homogeneous subgroups before sampling.

Sample size, such as the number of people taking part in a survey, determines the length of the estimated confidence interval. Sample size determination is the act of choosing the number of observations or replicates to include in a statistical sample. The sample size is an important feature of any empirical study in which the goal is to make inferences about a population from a sample.

In practice, the sample size used in a study is determined based on the expense of data collection and the need to have sufficient statistical power. In complicated studies there may be several different sample sizes involved. For example, in a survey sampling involving stratified sampling there would be different sample sizes for each population. In a census, data are collected on the entire population, hence the sample size is equal to the population size. In experimental design, where a study may be divided into different treatment groups, there may be different sample sizes for each group.

Sample sizes may be chosen in several different ways:

- expedience, including those items readily available or convenient to collect (choice of small sample sizes, though sometimes necessary, can result in wide confidence intervals or risks of errors in statistical hypothesis testing)

- using a target variance for an estimate to be derived from the sample eventually obtained

- using a target for the power of a statistical test to be applied once the sample is collected

Larger sample sizes generally lead to increased precision when estimating unknown parameters. For example, if we wish to know the proportion of a certain species of fish that is infected with a pathogen, we would generally have a more accurate estimate of this proportion if we sampled and examined 200, rather than 100 fish. Several fundamental facts of mathematical statistics describe this phenomenon, including the law of large numbers and the central limit theorem.

In some situations, the increase in accuracy for larger sample sizes is minimal, or even non-existent. This can result from the presence of systematic errors or strong dependence in the data, or if the data follow a heavy-tailed distribution.

Sample sizes are judged based on the quality of the resulting estimates. For example, if a proportion is being estimated, one may wish to have the 95% confidence interval be less than 0.06 units wide. Alternatively, sample size may be assessed based on the power of a hypothesis test. For example, if we are comparing the support for a certain political candidate among women with the support for that candidate among men, we may wish to have 80% power to detect a difference in the support levels of 0.04 units.

Calculating the Sample Size

If researchers desire a specific margin of error, then they can use the error bound formula to calculate the required sample size. The error bound formula for a population proportion is:

Solving for

gives an equation for the sample size:

12.4.6: Confidence Interval for a Population Proportion

The procedure to find the confidence interval and the confidence level for a proportion is similar to that for the population mean.

Learning Objective

Calculate the confidence interval given the estimated proportion of successes

Key Points

- Confidence intervals can be calculated for the true proportion of stocks that go up or down each week and for the true proportion of households in the United States that own personal computers.

- To form a proportion, take

(the random variable for the number of successes) and divide it by

(the number of trials, or the sample size). - If we divide the random variable by

, the mean by

, and the standard deviation by

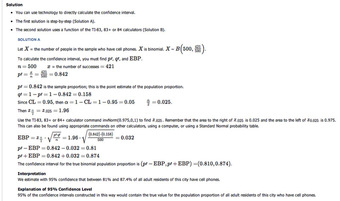

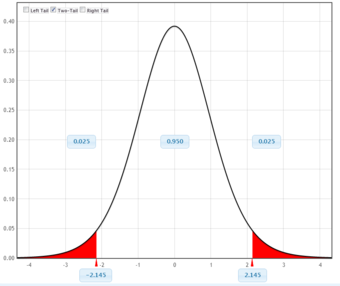

, we get a normal distribution of proportions with